- WHAT IS CYBERSECURITY?

Cybersecurity refers to a set of techniques used to protect the integrity of networks, programs and data from attack, damage or unauthorized access.

https://www.paloaltonetworks.com/cyberpedia/what-is-cyber-security

- Data privacy vs Cybersecurity vs Information Security

Data privacy relates to business policies that define appropriate data management such as collection, retention, and deletion. Cybersecurity comprises methods for protecting networks, devices, and data from unauthorized access, and ensuring the confidentiality, integrity, and availability of all that information. Information Security includes cybersecurity and physical security

https://training.fortinet.com/pluginfile.php/1057447/mod_resource/content/9/NSE1_Lesson_Scripts-EN.pdf

- Threat

A threat is any type of danger, which can damage or steal data, create a disruption or cause a harm in general. Common examples of threats include malware, phishing, data breaches and even rogue employees.

Vulnerability

A vulnerability is a weakness in hardware, software, personnel or procedures, which may be exploited by threat actors in order to achieve their goals.

Risk

Risk is a combination of the threat probability and the impact of a vulnerability. In other words, risk is the probability of a threat agent successfully exploiting a vulnerability

Risk = Threat Probability * Vulnerability Impact.

https://lifars.com/2020/07/threat-vulnerability-risk-what-is-the-difference/

- **safety: The state of being safe;

exemption from hurt or injury;freedom from danger.safety chain, a chain providing additional security

***security: The condition of being protected from or not exposed to danger;

https://www.quora.com/What-is-the-difference-between-safety-and-security

- What is a Zero-Day Vulnerability?

http://www.pctools.com/security-news/zero-day-vulnerability/

- A zero-day vulnerability, at its core, is a flaw. It is an unknown exploit in the wild that exposes a vulnerability in software or hardware and can create complicated problems well before anyone realizes something is wrong. In fact, a zero-day exploit leaves NO opportunity for detection.

Vulnerability Timeline

A zero-day attack happens once that flaw, or software/hardware vulnerability,

A company’s developers create software, but unbeknownst to them, it contains a vulnerability.

The threat actor spots that vulnerability either before the developer does or acts on it before the developer

The attacker writes and implements exploit code while the vulnerability is still open and available

After releasing the exploit, either the public recognizes it in the form of identity or information theft or the developer catches it and creates a patch to staunch the

Once a patch

https://www.fireeye.com/current-threats/what-is-a-zero-day-exploit.html

- Cyber-insurance is an insurance product used to protect businesses and individual users from Internet-based risks, and

more generally are typically excluded are not specifically defined cyber -insurance policies may include first-party coverage against losses such as data destruction, extortion, theft, hacking, and denial of service attacks;liability coverage indemnifying companies for losses to others caused, for example, by errors and omissions, failure to safeguard data, or defamation; and other benefits including regular security-audit, post-incident public relations and investigative expenses, and criminal reward funds.

- Zero-day

actually

Zero-day vulnerability refers to a security hole in software—such as browser software or operating system software—that is yet unknown to the software maker or

Zero-day exploit refers to code that attackers

The term “zero-day” refers to the number of days that the software vendor has known about the hole

https://www.wired.com/2014/11/what-is-a-zero-day/

- Information Security

Information Security (also known as

Cybersecurity

A physical security component is available to Information Security and Cybersecurity. Either a data

In Information Security, the main concern is safeguarding the data of the company from the illegal access of any kind, whereas, in Cybersecurity, the main concern is safeguarding the data of the company from illegal digital access.

https://www.hack2secure.com/blogs/cyber-security-vs-information-security

- Information security (or “

InfoSec This is often referred

Cybersecurity is all about protecting data that

Overlap Between Information Security & Cybersecurity

There is a physical security component to both cybersecurity and information security

They both take the value of the data into consideration.

If you’re in information security, your main concern is protecting your company's data from unauthorized access of any sort—and if you’re in cybersecurity, your main concern is protecting your company’s data from

https://www.bitsighttech.com/blog/cybersecurity-vs-information-security

- Cyber Hygiene: A Baseline Set of Practices

https://resources.sei.cmu.edu/asset_files/Presentation/2017_017_001_508771.pdf

- Defining Cyber Hygiene

To achieve security within the domain, we need to adapt a good

It includes organizing security in hardware, software and IT infrastructure, continuous network monitoring, and employee awareness and training.

The Center for Internet Security (CIS) and the Council on Cyber Security (CCS) defines

The campaign’s top priorities address the vast majority of known

Count: Know what’s connected to and running on your network

Configure: Implement key security settings to help protect your systems

Control: Limit and manage those who have administrative privileges for security settings

Patch: Regularly update all apps, software, and operating systems

Repeat: Regularly revisit the Top Priorities to form a solid foundation of

AAA

Access Controls

Monitoring

Plan

Security Patches and Remediation process

Managed Cyber Risks

Non-technical issues mainly concentrate on absence of employee training, security awareness, organization policies and social engineering awareness.

https://resources.infosecinstitute.com/the-importance-of-cyber-hygiene-in-cyberspace/#gref

- Authentication, authorization and accounting (AAA) is a system for tracking user activities on an IP-based network and controlling their access to network resources. AAA is often

is implemented

https://www.techopedia.com/definition/24130/authentication-authorization-and-accounting-aaa

- Authentication, authorization, and accounting (AAA) is a term for a framework for intelligently controlling access to computer resources, enforcing policies, auditing usage, and providing the information necessary to bill for services

http://searchsecurity.techtarget.com/definition/authentication-authorization-and-accounting

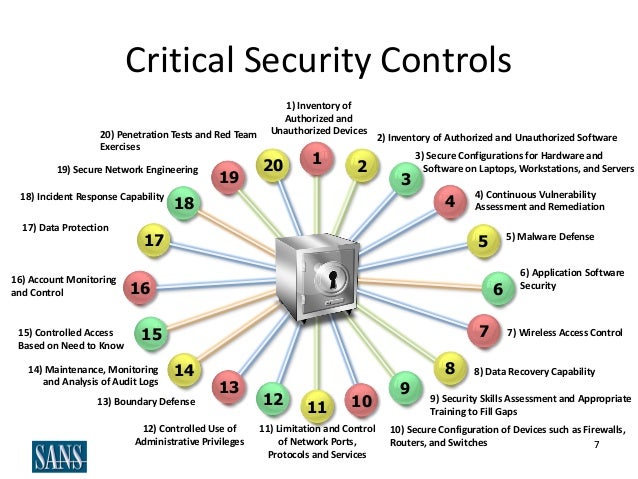

- The Critical Security Controls: Basic Cybersecurity Hygiene for your Organization

Fortunately, there is a

https://blog.qualys.com/news/2017/10/12/the-critical-security-controls-basic-cyber-security-hygiene-for-your-organization

- Implementing the CIS 20 Critical Security Controls: Building Upon Foundational Cyber Hygiene

You can significantly lower the risk of being victimized by this

This set of 20 structured

https://www.marketscreener.com/QUALYS-INC-11612572/news/Implementing-the-CIS-20-Critical-Security-Controls-Building-Upon-Foundational-Cyber-Hygiene-25582102/

- CIS Controls V7 Measures & Metrics Measures & Metrics for CIS Controls V7

The CIS Controls

https://www.cisecurity.org/white-papers/cis-controls-v7-measures-metrics/

- CIS Controls Measures and Metrics for Version 7

https://www.cisecurity.org/wp-content/uploads/2018/03/CIS-Controls-Measures-and-Metrics-for-Version-7-cc-FINAL.pdf

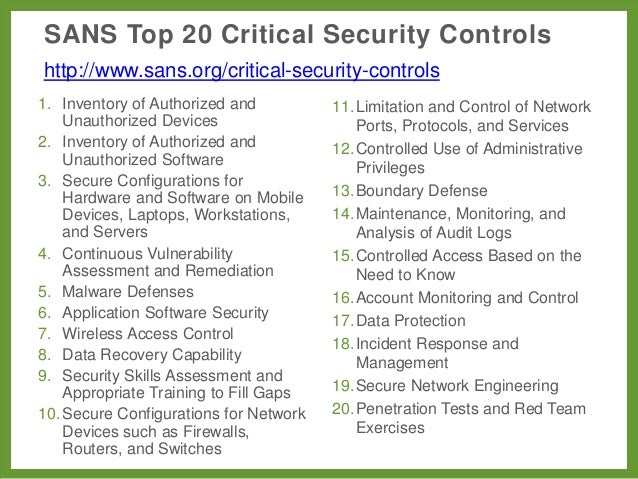

- Top 20 Critical Security Controls for Effective Cyber Defense

The complete list of CIS Critical Security Controls, version 6.1

The CIS CSC is a set of 20 controls (sometimes called the SANS Top 20) designed to help organizations safeguard their systems and data from known attack vectors. It can also be an effective guide for companies that do yet not have a coherent security program. Although the CIS Controls are not a replacement for any existing compliance scheme, the controls map to several major compliance frameworks (e.g., the NIST Cybersecurity framework) and regulations (e.g., PCI DSS and HIPAA).

Implementing the Controls: A Pragmatic Approach

Getting value from the CIS Critical Security Controls does not

A more pragmatic approach to implementing the controls includes the following steps:

Discover your information assets and estimate their value. Perform

Compare your current security controls to the CIS Controls. Make

Develop a plan for adopting the most valuable new security controls and improving the operational effectiveness of your existing controls.

Implement the controls.

https://blog.netwrix.com/2018/02/01/top-20-critical-security-controls-for-effective-cyber-defense/

- The 20 CIS Controls & Resources

Basic CIS Controls

1. Inventory and Control of Hardware Assets

2. Inventory and Control of Software Assets

3. Continuous Vulnerability Management

4. Controlled Use of Administrative Privileges

5. Secure Configuration for Hardware and Software on Mobile Devices, Laptops, Workstations and Servers

6. Maintenance, Monitoring and Analysis of Audit Logs

Foundational CIS Controls

7. Email and Web Browser Protections

8. Malware Defenses

9. Limitation and Control of Network Ports, Protocols and Services

10. Data Recovery Capabilities

11. Secure Configuration for Network Devices, such as Firewalls, Routers and Switches

12. Boundary Defense

13. Data Protection

14. Controlled Access Based on the Need to Know

15. Wireless Access Control

16. Account Monitoring and Control

Organizational CIS Controls

17. Implement a Security Awareness and Training Program

18. Application Software Security

19. Incident Response and Management

20. Penetration Tests and Red Team Exercises

https://www.cisecurity.org/controls/cis-controls-list/

- Tabletop Exercises

Six Scenarios to Help Prepare Your Cybersecurity Team

https://www.cisecurity.org/wp-content/uploads/2018/10/Six-tabletop-exercises-FINAL.pdf

- A Measurement Companion to the CIS Critical Security Controls

The CIS Critical Security Controls (

information to help them track progress and to encourage the use of automation

https://www.cisecurity.org/wp-content/uploads/2017/03/A-Measurement-Companion-to-the-CIS-Critical-Security-Controls-VER-6.0-10.15.2015.pdf

- In Forensic science,

Locard's exchange principle (sometimes simply be used as

https://en.wikipedia.org/wiki/Locard%27s_exchange_principle

The NICE Framework is comprised of

Categories (7)

Specialty Areas (33)

Work Roles (52)

Knowledge, Skills, and Abilities (KSAs)

Tasks

https://www.nist.gov/itl/applied-cybersecurity/national-initiative-cybersecurity-education-nice/nice-cybersecurity

- The National Initiative for Cybersecurity Education (NICE) Cybersecurity Workforce Framework provides a blueprint to categorize, organize, and describe cybersecurity work into Categories, Specialty Areas, Work Roles, tasks, and knowledge, skills, and abilities (KSAs). The NICE Framework provides a common language to speak about

cyber roles and jobs and helps define personal requirements in cybersecurity.

https://niccs.us-cert.gov/workforce-development/cyber-security-workforce-framework

- This voluntary Framework

consists of Framework’s

- The Cyber Analytics Repository (CAR) is a knowledge base of analytics developed by MITRE based on the Adversary Tactics, Techniques, and Common Knowledge (ATT&CK™) adversary model.

a hypothesis which explains the idea behind the analytic

references to ATT&CK Techniques and Tactics that the analytic detects

the

a

a unit test

https://car.mitre.org/wiki/Main_Page

ATT&CK is useful for understanding security risk against known adversary behavior, for planning security improvements, and verifying

https://attack.mitre.org/wiki/Main_Page

With MITRE’s Navigator tool you can select an actor or malware family. After making the selection, the boxes in the matrix show which techniques the actor or malware has used.

From these techniques, we can learn how our environments protect against these techniques and where we have gaps. The goal is not to create coverage or signatures for each technique; the matrix helps organizations understand how attackers behave. Having more visibility into their methods leads us to the right

https://securingtomorrow.mcafee.com/mcafee-labs/apply-mitres-attck-model-to-check-your-defenses/

- MITRE ATT&CK™ is a

globally-accessible is used as

https://attack.mitre.org/

- CK is a community-driven, decentralized, small and cross-platform framework helping users to save their knowledge and automate repetitive and often painful tasks in the form of portable, customizable and reusable Python components with a unified API, CLI and JSON

meta MacOS assembling , running andautotuning hackathons

http://cknowledge.org/

- What is the four-eyes principle?

https://www.unido.org/change/faq/what-is-the-four-eyes-principle.html

- The 4-eyes principle (4EP) is a well-known access control and authorization

principle, it is considered is forced

- secure islands

http://www.secureislands.com/

- Information Security Management: Using BS 10012:2009 to Comply with The Data Protection Act (1998)

Without these characteristics, data cannot

Authentic data is what it purports to

Useable data can

Data with integrity is complete and unaltered

The 8 Data Protection Principles which Apply to all Personal Data

http://www.dcc.ac.uk/resources/briefing-papers/standards-watch-papers/information-security-management-using-bs-100122009-

- What is BS 10012:2009?

http://shop.bsigroup.com/ProductDetail/?pid=000000000030175849

- At a high-level, both the Clark-Wilson Model and the Biba Model want to do the following three things:

First, they don’t want unauthorized users making changes within a system.

This is one of the core goals of the Biba and Clark Wilson access control model, to prevent unauthorized modifications to data. To consistently maintain its integrity.

Second, the Biba and

As in, yes the user

Meaning, they don’t do something accidentally or even intentionally, (even though they

Third, they both make

Internal consistency is making sure that numbers

What we just talked about are the high-level concepts of what these two models (Clark-Wilson & Biba) want to do as far as maintaining integrity, but not their specific implementation. That’s where they are different. They both want to uphold integrity, but they both go about doing it in different ways.

Quick Recap of The Biba Model

The Biba Model has these three rules:

No Write Up Rule, which says a subject can’t write data to an object at a higher integrity level.

No Read Down Rule, which states a subject cannot read data

The Invocation Rule where a subject can’t request a service at a higher integrity level (depending on classification level: Top Secret/Secret/Confidential)

The Clark-Wilson Model does the same thing, but it does so in a

With Clark-Wilson, instead of using integrity levels like in the Biba model, it uses a stringent set of change control principles and an intermediary.

The beauty of the Clark-Wilson model is that if a subject is trying to access an object, it does so without having a direct connection to it - without having direct access to the object.

It takes external entities

Clark-Wilson Model

Users.

It all starts with users, otherwise known as the subjects. The subjects which will access the objects. Users are the ones that need the information. I think the books call users the “active agents”.

Transformation Procedures (TPs)

Then we have Transformation Procedures. Think of them as operations the subject is trying to perform.

Is the subject trying to read a file?

Write to a file?

Or

Transformation Procedures are

Constrained Data Items (CDIs)

There’s Constrained Data Items and Unconstrained Data Items.

Objects which belong in the subset of Constrained Data Items are at a higher level of protection.

In a Clark-Wilson Model, there are two types of protections given to data items, constrained and unconstrained.

Objects within a Constrained Data Items are so valuable, that in a Clark-Wilson model, a subject has to go through an intermediary to even just access it.

Unconstrained Data Items (UDIs)

These can

Subjects access objects in a UDI like how they would normally access an object in a networked environment as if they weren't using a Clark-Wilson Model.

Integrity Verification Procedures (IVPs)

they check the internal and external consistency.

IVPs are a way to audit the transformation procedure.

- The Clark-Wilson Model enforces the concept of separation of duties.

When the IVP audits the well-formed transactions of the Transformation Procedures, the IVP is independently performing a duty separate from that of the Transformation Procedure

Separation of duties

https://www.studynotesandtheory.com/single-post/The-Clark-Wilson-Model

- Trusted Computer System Evaluation Criteria (TCSEC) is a United States Government Department of Defense (DoD) standard that sets basic requirements for assessing the effectiveness of computer security controls built into a computer system. The TCSEC

was used evaluate

https://en.wikipedia.org/wiki/Trusted_Computer_System_Evaluation_Criteria

The documents and guidelines were developed evaluate

A trusted system has undergone testing and validation to a specific standard.

Assurance is the freedom of doubt and a level of confidence that a system will perform as required every time

When a developer prepares to sell a system, he must have a way to measure the system’s features and abilities.

The buyer, when preparing to make a purchase, must have a way to measure the system’s effectiveness and benchmark its abilities.

The Rainbow Series

The rainbow series

The Orange Book: Trusted Computer System Evaluation Criteria

The Orange Book’s official name is the Trusted Computer System Evaluation Criteria. As noted,

A—Verified protection.

B—Mandatory security. A B-rated system has mandatory protection of the TCB.

C—Discretionary protection. A C-rated system provides discretionary protection of the TCB.

D—Minimal protection. A D-rated system

http://www.pearsonitcertification.com/articles/article.aspx?p=1998558&seqNum=5

- What Is Access Control?

Access control - Granting or denying approval to use specific resources; it is controlling access

Physical access control - Fencing, hardware door locks, and mantraps that limit contact with devices

Technical access control - Technology restrictions that limit users on computers from accessing data

Access control has

Four standard access control models

Specific practices used to enforce access control

Access Control Terminology

Identification - Presenting credentials (Example: delivery driver presenting employee badge)

Authentication - Checking credentials (Example: examining the delivery driver’s badge)

Authorization - Granting permission to take action (Example: allowing delivery driver to pick up package)

Basic Steps In Access Control

Identification User enters username

Authentication User provides password

Authorization User

Access User allowed to access specific data

Impose Technical Access Control

Object - Specific resource (Example: file or hardware device)

Subject - User or process functioning on behalf of a user (Example: computer user)

Operation - Action taken by the subject over an object (Example: deleting

Access Control Models

Access control model - Hardware and software

Access control models used by custodians for access control are neither created nor installed by custodians or users;

Four major access control models

Discretionary Access Control (DAC)

Discretionary Access Control (DAC) - Least restrictive model

Every object has

Owners can create and access their objects freely

Owner can give permissions to other subjects over these objects

DAC used on operating systems like UNIX and Microsoft Windows

DAC has two significant weaknesses:

DAC relies on decisions by end-user to set

Subject’s permissions will

Mandatory Access Control (MAC)

Mandatory Access Control (MAC) - Opposite of DAC and is most restrictive access control model

MAC assigns users’ access controls strictly according to

Mandatory Access Control (MAC): Elements

Two key elements to MAC:

Labels - Every entity is an object (laptops, files, projects, and so on) and assigned classification label (confidential, secret, and top secret) while subjects assigned privilege label (a clearance)

Levels - Hierarchy based on labels

MAC grants permissions by matching object labels with subject labels based on their respective levels

Mandatory Access Control (MAC): Major Implementations

Lattice model - Subjects and objects

Bell-

Biba Integrity model - Goes beyond BLP model and adds protecting data integrity and confidentiality

Mandatory Integrity Control (MIC) - Based on Biba model, MIC ensures data integrity by controlling access to securable objects

Role Based Access Control (RBAC)

Role Based Access Control (RBAC) - Considered more “real-world” access control than other models because of access based on

Instead of setting permissions for each user or group assigns permissions to particular roles in the organization and then assigns users to those roles

Rule-Based Access Control (RBAC)

Rule-Based Access Control (RBAC) - Dynamically assign roles to subjects based on a set of rules defined by the custodian

Each resource object contains a set of access properties based on rules

When a user attempts to access that resource, system checks rules

https://www.emaze.com/@ALQOIRLR

- Confidentiality, integrity and availability, also known as the CIA triad, is a model designed to guide policies for information security within an organization. The model is also sometimes referred to as the AIC triad (availability, integrity and confidentiality) to avoid confusion with the Central Intelligence Agency

In this context, confidentiality is a set of rules that limits access to information, integrity is the assurance that the information is trustworthy and accurate, and availability is a guarantee of reliable access to the information by

http://whatis.techtarget.com/definition/Confidentiality-integrity-and-availability-CIA

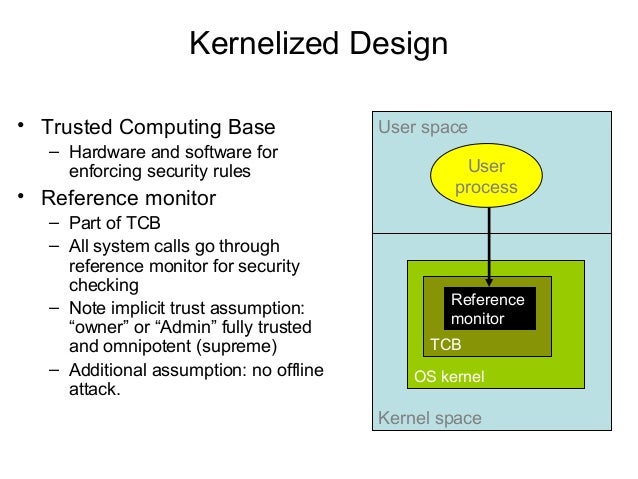

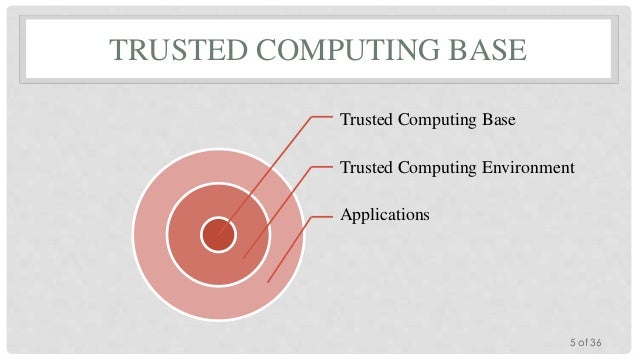

- Security Architecture

Although a robust architecture is a good start, real security requires that you have a security architecture in place to control processes and applications. The concepts related to security architecture include

Protection rings

Trusted computer base (TCB)

Open and closed systems

Security modes

Recovery procedures

Protection Rings

The operating system knows who and what to trust by relying on rings of protection. Rings of protection work much like your network of family, friends, coworkers, and acquaintances. The people who are closest to you, such as your spouse and family, have the highest level of trust.

In reality, the protection rings are conceptual.

The first implementation of such a system was in MIT’s Multics time-shared operating system.

The protection ring model provides the operating system with various levels at which to execute code or to restrict that code’s access. The rings provide much greater granularity than a system that just operates in user and privileged mode. As code moves toward the outer bounds of the model, the layer number increases and the level of trust decreases

Layer 0—The most trusted level. The operating system kernel

Layer 1—Contains nonprivileged portions of the operating system.

Layer 2—Where I/O drivers, low-level operations, and utilities

Layer 3—Where applications and processes operate. This is the level at which individuals usually interact with the operating system.

Most systems that

Protection rings are part of the trusted computing base concept.

Trusted Computer Base

The trusted computer base (TCB) is the sum of all the protection mechanisms within a computer and

This includes hardware, software, controls, and processes.

This includes hardware, firmware, and software

The TCB is responsible for confidentiality and integrity.

The TCB is the only portion of a system that operates at a high level of trust.

It monitors four basic functions:

Input/output operations—I/O operations are a security concern because operations from the outermost rings might need to interface with rings of greater protection. These cross-domain communications must

Execution domain switching—Applications running in one domain or level of protection often invoke applications or services in other domains. If these requests are to obtain more sensitive data or service,

Memory protection—To truly be secure, the TCB must monitor memory references to verify confidentiality and integrity in storage.

Process activation—Registers, process status information, and file access lists are vulnerable to loss of confidentiality in a multiprogramming environment.

http://www.pearsonitcertification.com/articles/article.aspx?p=1998558&seqNum=3

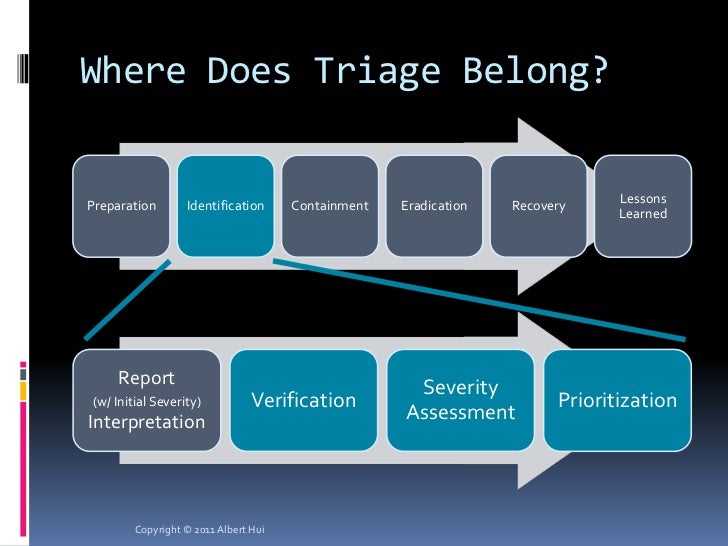

- Incident Response

–

triage is the first post-detection incident response process any responder will execute to open an incident or false positive

Structuring an efficient and accurate triage process will reduce Analyst Fatigue and ensure that only valid alerts are promoted

https://www.syncurity.net/2015/10/07/ir-management-process-triage/

- Effective security alert triage

the process of quickly and accurately determining the severity of a threat

is a must-have component for every organization

It’s critical that analysts quickly differentiate between security alerts that are actual threats to the organization and those that aren’t.

which alerts require their immediate attention

which ones the team can safely ignore

https://www.lastline.com/blog/effective-security-alert-triage/

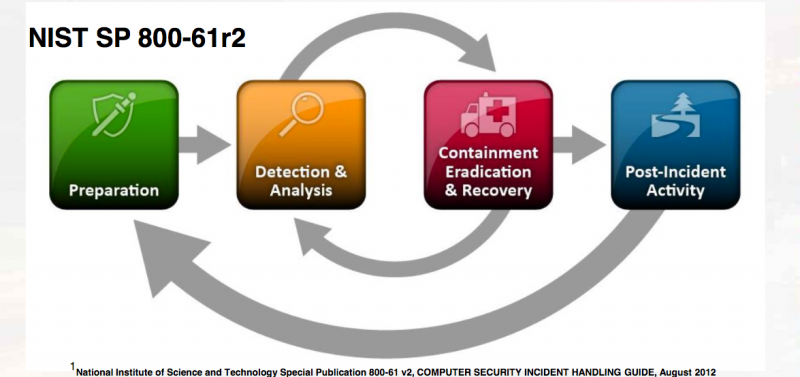

Incident preparation

The defined incident response standards by NIST and SANS both begin with preparation, which includes having the required tools and logs before an incident occur.

Incident detection and analysis

An incident such as an intrusion or malware outbreak shouldresult in

This identification or analysis includes scoping an incident; defining the proportionate response to the impact of an incident and determining the appropriate

scale of the investigation. Scoping should answer the question “what is the goal of the investigation” or“

Incident containment

The containment and remediation plan must be based objective

https://community.jisc.ac.uk/blogs/csirt/article/incident-response-triage

The incident response phases are:

Preparation

Identification

Containment

Eradication

Recovery

Lessons Learned

https://www.securitymetrics.com/blog/6-phases-incident-response-plan

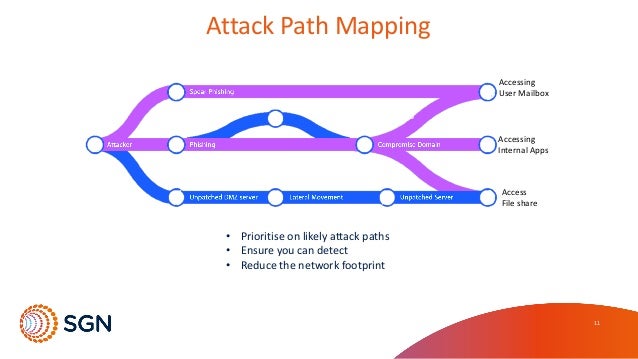

Vulnerability-centric testing has limitations and drawbacks

manage a list of Highs, Mediums and Lows which only ever grows, however much remediation effort gets thrown at it

many business managers don't really understand the language of vulnerabilities

increasing security budgets in terms of lists of vulnerabilities

Attack Path Mapping (APM) is an asset-centric approach that helps prioritise investments in controls, mitigations, and remediations

It works by starting with the assets that matter most, or the risks that would hurt the business most

These are then used to map, and validate, all the routes an attacker could use to reach those things

This drives priorities – targeted, precision improvements in terms of what vulnerabilities to fix & what attack paths to close , how to channel potential attackers, what controls to strengthen, where detection needs improving

You can make it harder for attackers to damage the business inthe ways that would do most harm

It helps you to detect attackers when they try - and to respond effectively

when the business comes looking forjustification of your security spend, you can immediately talk about the things that matter most to them and how you’re going to protect those things.

APM will need your people’s time

To be cost-effective it needs tobe done as a white-box, collaborative exercise

If you aren’t yet sure what your organisation’s most important assetsare,you might want to consider doing another exercise first - such as a Business Impact Assessment or a Security Maturity Review - which helps define & agree what is most important to your organisation.

if you feel confident your primary assetsare already identified , the attack paths well secured, and that you're already prepared to detect and respond to attempted attacks,you might want to consider a Targeted Attack Simulation which will test and further enhance the organisation’s ability to prevent, detect and respond.

https://www.mwrinfosecurity.com/our-thinking/what-is-attack-path-mapping/

NIST’s technique offers a standard model for measuring security in a quantifiable, comparable way.

Called Probabilistic Attack Graphs, it charts the paths through a network used to exploit multiple vulnerabilities, assigning a risk value to each vulnerability that takes into account how easy or likely it is tobe exploited at that point in the path.

These metrics can be used to objectively assess the security risks and to evaluate the return on a cybersecurity investment

One limitation of an attack graph is that it assumes a vulnerability can alwaysbe exploited

But there is a range of probabilities thatvulnerabilities at different steps in the path can be exploited , depending on the skill of the attacker and the difficulty of the exploit

Attack graphs typically show only what is possible rather than what is likely.

Probabilistic attack graphs use the industry standard Common Vulnerability Scoring System as a starting point for quantifying risk, also taking into account the likelihood thata given vulnerability can be exploited given

With anobjective metric, trade-offs between security costs and benefits can be analyzed , so organizations can spend their money on security measures that pay off.

An automatic attack-graph generator can identify obscure attack possibilities arising from intricate security interactions within an enterprise networkthat could be easily overlooked by a human analyst .

https://gcn.com/Articles/2011/10/04/NIST-attack-graphs-measure-cyber-risks.aspx

Composition of vulnerabilities can be modeled usingprobabilistic attack graphs, whichshow all paths of attacks that allow incrementalnetwork penetration.

Attacklikelihoods are propagated through the attack graph, yielding a novelway to measurethe security risk of enterprise systems

This metric for risk mitigation analysis is used to maximizethe securityof enterprise systems

Thismethodology based on probabilistic attackgraphs canbe used to evaluate and strengthenthe overall security of enterprise networks.

Federal agenciesseekinginformation on how to useprobabilistic attack graphs for security risk analysisof their enterprise networks

The objective of our research was to develop a standard model for measuring security of computer networks. A standard model will enable usto answer questions such as

“Are we more secure than yesterday?”

“How does the security of one network configuration compare with another?”

the challenges for security risk analysis of enterprise networks

Security vulnerabilities are rampant:

Security vulnerabilities are rampant: CERT1reports about a hundred new security vulnerabilities each week. It becomes difficult to manage the security of an enterprise network (with hundreds of hosts and different operating systems and applications on each host) in the presence of software vulnerabilities that can be exploited .

Attackers launch complex multi-stepcyber attacks: Cyber attackers can launch multi -step and multi-host attacks that can incrementally penetrate the network with the goal of eventually compromising critical systems.

Current attack detection methods cannot deal with the complexity of attacks: Traditional approaches to detecting attacks (using an Intrusion Detection System) have problems such as too many false positives, limited scalability, and limits on detecting attacks

Decision makers can therefore avoid over investing in security measures that do not pay off, or under investing and risk devastating consequences

Attack graphs model howmultiple vulnerabilities

They represent system states using a collection of security-related conditions, such as the existence of vulnerability on a particularhost or

Vulnerability exploitation is modeled as a transition between system states

Tools for Generating Attack Graphs

TVA (Topological Analysis of Network Attack Vulnerability)

NETSPA (A Network Security Planning Architecture)

MULVAL (Multihost , multistage, Vulnerability Analysis)

https://nvlpubs.nist.gov/nistpubs/Legacy/IR/nistir7788.pdf

- Incident Response Triage

–

Incident preparation

The defined incident response standards by NIST and SANS both begin with preparation, which includes having the required tools and logs before an incident occur.

Incident detection and analysis

An incident such as an intrusion or malware outbreak should

This identification or analysis includes scoping an incident; defining the proportionate response to the impact of an incident and determining the appropriate

scale of the investigation. Scoping should answer the question “what is the goal of the investigation” or

Incident containment

https://community.jisc.ac.uk/blogs/csirt/article/incident-response-triage

- 6 Phases in the Incident Response Plan

The incident response phases are:

Preparation

Identification

Containment

Eradication

Recovery

Lessons Learned

https://www.securitymetrics.com/blog/6-phases-incident-response-plan

Intelligence Concepts — The SANS Incident Response Process

The Big Picture of the Security Incident Cycle

- Security Incident Response Industry Standards

- NIST

- US-CERT

- SANS

- veriscommunity

- An overview of Attack Path Mapping, an asset-centric approach that helps

prioritise security spending

Vulnerability-centric testing has limitations and drawbacks

manage a list of Highs, Mediums and Lows which only ever grows, however much remediation effort gets thrown at it

many business managers don't really understand the language of vulnerabilities

increasing security budgets in terms of lists of vulnerabilities

Attack Path Mapping (APM) is an asset-centric approach that helps prioritise investments in controls, mitigations, and remediations

It works by starting with the assets that matter most, or the risks that would hurt the business most

These are then used to map, and validate, all the routes an attacker could use to reach those things

This drives priorities

You can make it harder for attackers to damage the business in

It helps you to detect attackers when they try - and to respond effectively

when the business comes looking for

APM will need your people’s time

To be cost-effective it needs to

If you aren’t yet sure what your organisation’s most important assets

if you feel confident your primary assets

https://www.mwrinfosecurity.com/our-thinking/what-is-attack-path-mapping/

- As attacks and networks become more complex, the possible attack scenarios grow exponentially and

evaluating real-world risk levels becomes difficult

NIST’s technique offers a standard model for measuring security in a quantifiable, comparable way.

Called Probabilistic Attack Graphs, it charts the paths through a network used to exploit multiple vulnerabilities, assigning a risk value to each vulnerability that takes into account how easy or likely it is to

One limitation of an attack graph is that it assumes a vulnerability can always

But there is a range of probabilities that

Attack graphs typically show only what is possible rather than what is likely.

Probabilistic attack graphs use the industry standard Common Vulnerability Scoring System as a starting point for quantifying risk, also taking into account the likelihood that

With an

An automatic attack-graph generator can identify obscure attack possibilities arising from intricate security interactions within an enterprise network

https://gcn.com/Articles/2011/10/04/NIST-attack-graphs-measure-cyber-risks.aspx

To moreaccurately assess the security ofenterprise systems ,one must combinedand exploited tostage an attack.

Attack

This

Federal agencies

“Are we more secure than yesterday?”

“How does the security of one network configuration compare with another?”

the challenges for security risk analysis of enterprise networks

Security vulnerabilities are rampant:

Security vulnerabilities are rampant

Attackers launch complex multi-step

Current attack detection methods cannot deal with the complexity of attacks: Traditional approaches to detecting attacks (using an Intrusion Detection System) have problems such as too many false positives, limited scalability, and limits on detecting attacks

Decision makers can therefore avoid over investing in security measures that do not pay off, or under investing and risk devastating consequences

Attack graphs model how

They represent system states using a collection of security-related conditions, such as the existence of vulnerability on a particular

Tools for Generating Attack Graphs

TVA (Topological Analysis of Network Attack Vulnerability)

NETSPA (A Network Security Planning Architecture)

MULVAL (

https://nvlpubs.nist.gov/nistpubs/Legacy/IR/nistir7788.pdf

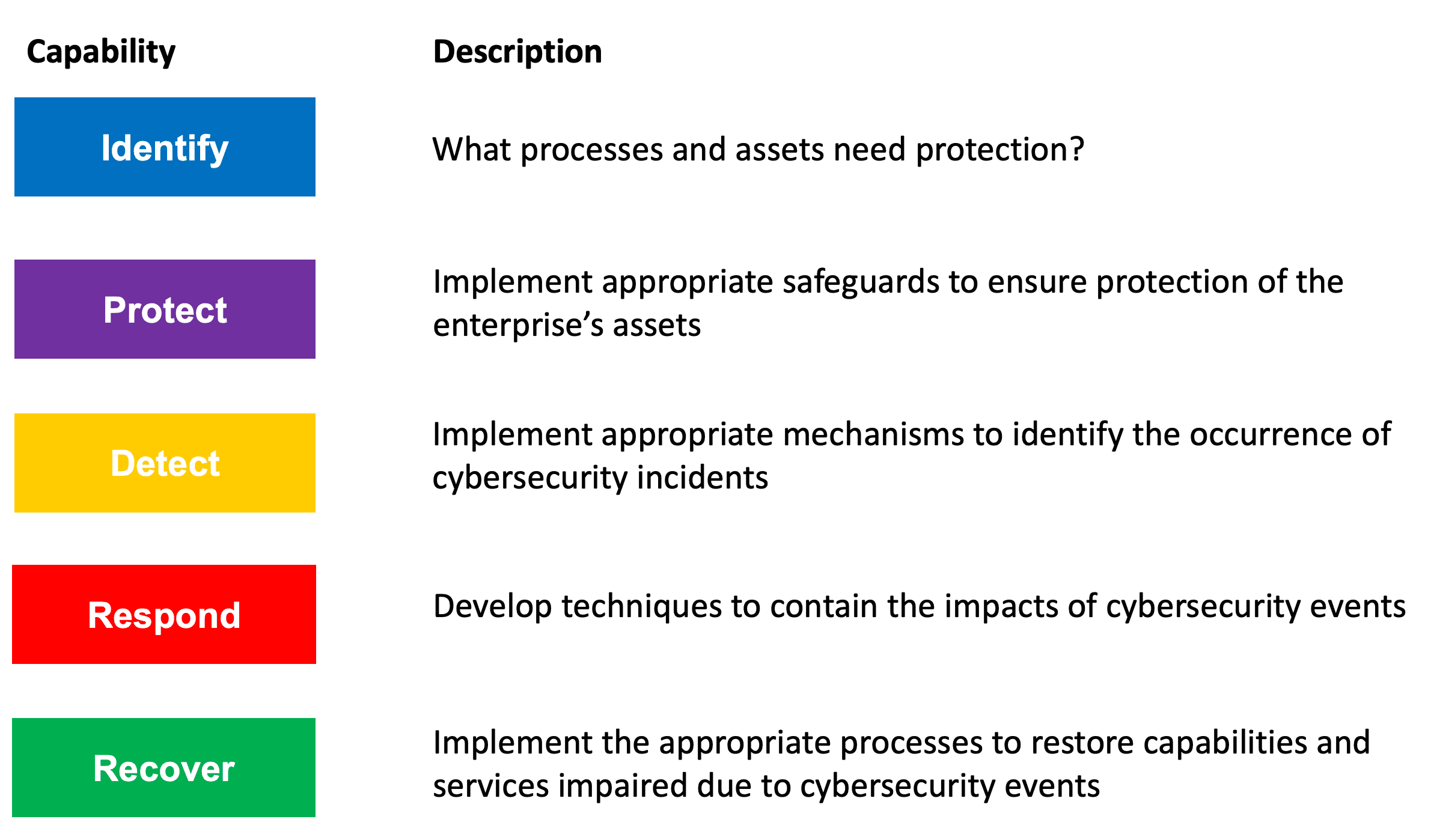

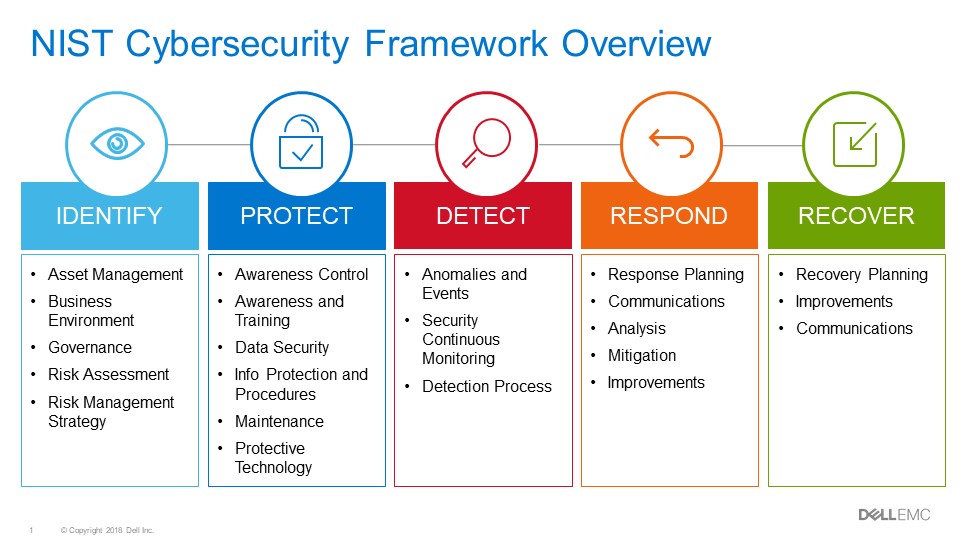

- Cybersecurity Framework Version 1.1

https://www.nist.gov/cyberframework/framework

- WHAT IS THE FRAMEWORK?

The Framework is voluntary guidance, based on existing standards, guidelines, and practices for organizations to better manage and reduce cybersecurity risk. In addition to helping organizations manage and reduce risks, it was designed to foster risk and cybersecurity management communications amongst both internal and external organizational stakeholders

AN INTRODUCTION TO THE COMPONENTS OF THE FRAMEWORK

The Cybersecurity Framework consists of three main components: the Core, Implementation Tiers, and Profiles.

The Framework Core provides a set of desired cybersecurity activities and outcomes using common language that is easy to understand. The Core guides organizations in managing and reducing their cybersecurity risks in a way that complements an organization’s existing cybersecurity and risk management processes.

The Framework Implementation Tiers assist organizations by providing context on how an organization views cybersecurity risk management. The Tiers guide organizations to consider the appropriate level of rigor for their cybersecurity program and are often used as a communication tool to discuss risk appetite, mission priority, and budget.

Framework Profiles are an organization’s unique alignment of their organizational requirements and objectives, risk appetite, and resources against the desired outcomes of the Framework Core. Profiles are primarily used to identify and prioritize opportunities for improving cybersecurity at an organization.

https://www.nist.gov/cyberframework/getting-started