- The term “

hyperscale ” refers to a computer archit1ecture’s ability to scalein order to respond to increasing demand.

Computers rely on resources within a

The goal of scaling is to continue building a robust system, whether that system revolves around the cloud, big data, or distributed storage, or, as is increasingly likely these days, a combination of all three

https://www.bmc.com/blogs/hyperscale-data-center/

- A

hyperscale cloud data center looks different from an enterprise data center, or even a large hosting provider.

Here are

https://www.computerworld.com/article/3138432/inside-a-hyperscale-data-center-how-different-is-it.html

Hyperscaler Storage

Enterprise

https://www.snia.org/hyperscaler

- Software-defined: Infrastructure where the functionality

is completely decoupled from the underlying hardware and is both extensible and programmatic.

Commodity-based: Infrastructure built atop commodity or industry-standard infrastructure, usually an x86 rack-mount or blade server.

Converged: A scale-out architecture where server, storage, network, and virtualization/containerization components

https://www.infoworld.com/article/3040038/what-hyperscale-storage-really-means.html

- Today’s hardware-defined infrastructure

Software-defined infrastructure: the first step toward hyperscale

Individual component replacement

Flexible host setup

Dynamically optimized data centers

https://www.ericsson.com/en/white-papers/hyperscale-cloud--reimagining-data-centers-from-hardware-to-applications

- Veritas

HyperScale Architecture

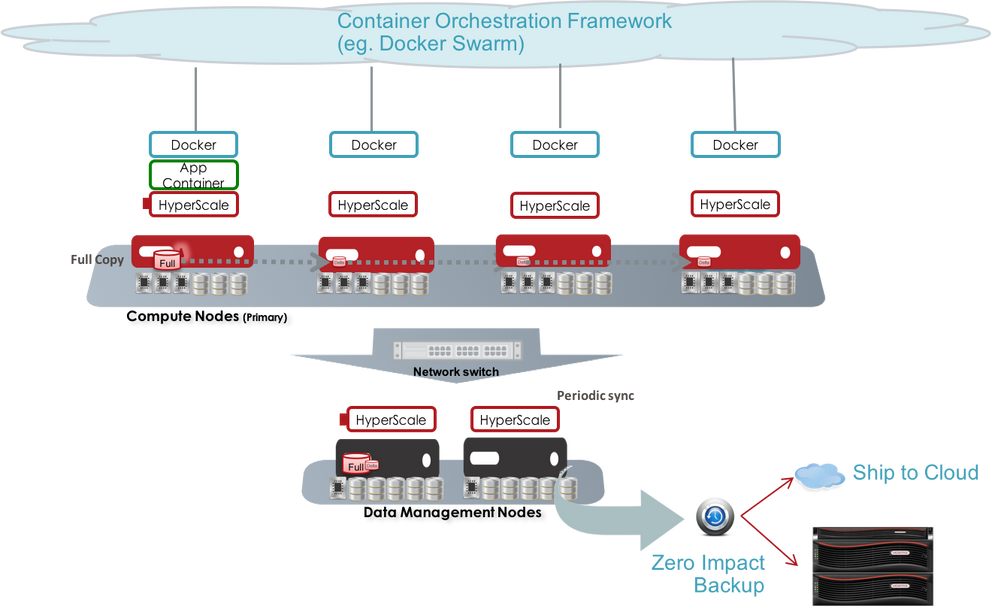

Veritas HyperScale for containers is based on a unique architecture to provide resilient storage with predictable performance. The predictability is provided by internally separating the storage for secondary operations from that required for primary operations. HyperScale employs an architecture with services segregated into two horizontal planes; the top plane (Compute Plane) responsible for active/primary IO from application containers and the lower plane (Data Plane) responsible for version( snapshot) management of volumes and the usage of these snapshots for secondary operations like backup, analytics

https://vox.veritas.com/t5/Software-Defined-Storage/Predictable-performance-with-containerized-applications/ba-p/829920

- We are very excited to introduce

DirectFlash ™ Fabric which extends theDirectFlashTM family outside of the array and into the fabrics via RDMA over converged Ethernet (also known as RoCEv2).

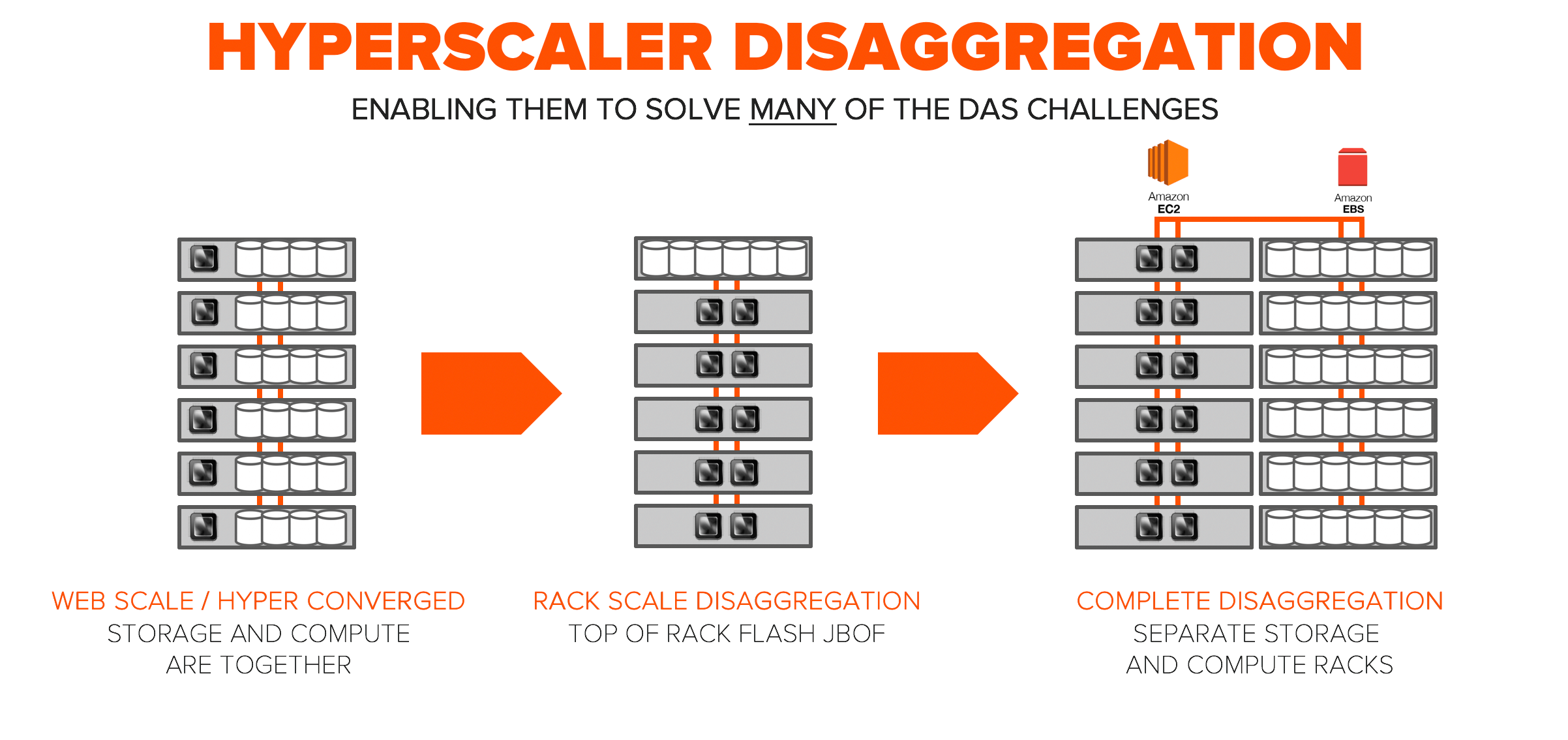

At hyperscale , efficiency is a requirement. In the beginning, there was “web-scale”. This was the simple and easy way to scale. Our hyper-converged friends are still in this early evolution. As you saw with the Stanford Flash Storage Disaggregation study, this type of architecture was immature and equates to high levels of inefficiency at scale. To enable independant scale of compute and storage many hyperscale environments went to “rack-scale disaggregation.”

https://blog.purestorage.com/directflash-fabric-continuation-of-pures-nvme-innovation/

Atlantis USX (Unified Software-defined Storage) is a software-defined storage solution (100% software) that delivers the performance of an all-flash storage array at half the cost of traditional SAN or NAS.

https://vinfrastructure.it/2015/05/atlantis-hyperscale-products/

- Choose

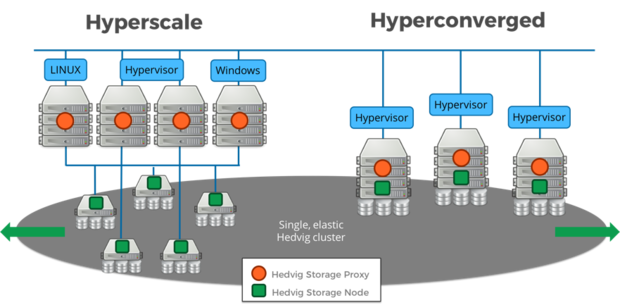

hyperscale when… your organization has 5,000 employees or more,more than 500 terabytes of data,more than 500 applications, ormore than 1,000 VMs.

Choose hyperconverged when… you’re below these watermark numbers, have five or fewer staff managing your virtual infrastructure, or you’re in a remote or branch office.

https://www.enterpriseai.news/2016/11/14/demystifying-hyperconverged-hyperscale-storage/