What are the biggest security risks associated with cloud computing, and how can they be mitigated?

Cloud computing provides many benefits, such as cost savings, scalability, and flexibility, but it also introduces security risks that organizations must address. Here are some of the biggest security risks associated with cloud computing and some ways to mitigate them:

Data breaches: Cloud providers store sensitive data on their servers, making them a target for cybercriminals. To mitigate the risk of data breaches, organizations should encrypt data at rest and in transit, implement access controls and strong passwords, and regularly monitor for unusual activity.

Insider threats: Cloud providers have privileged access to data and systems, making them a potential insider threat. Organizations should implement strict access controls, conduct background checks on employees, and monitor employee activity.

Denial-of-service (DoS) attacks: Cloud providers are vulnerable to DoS attacks, which can disrupt services and cause downtime. To mitigate the risk of DoS attacks, organizations should implement firewalls and intrusion detection systems, use load balancers to distribute traffic, and have a disaster recovery plan in place.

Lack of visibility and control: When data is stored in the cloud, organizations may have limited visibility and control over their data. To mitigate this risk, organizations should conduct regular audits of their cloud providers' security controls and implement monitoring tools to detect unusual activity.

Compliance risks: Organizations that handle sensitive data must comply with regulations such as GDPR and HIPAA. To mitigate compliance risks, organizations should choose cloud providers that are compliant with these regulations and implement processes and controls to ensure compliance.

In summary, to mitigate the security risks associated with cloud computing, organizations should implement strong access controls, encrypt data, monitor for unusual activity, conduct regular audits, and choose cloud providers that are compliant with regulations.

How do you ensure that sensitive data is kept secure in a cloud environment, and what tools and technologies do you use to achieve this?

To ensure that sensitive data is kept secure in a cloud environment, there are several best practices, tools, and technologies that organizations can use:

Encryption: Data encryption is an essential tool for protecting sensitive data in the cloud. Encryption algorithms are used to scramble data, making it unreadable to anyone who does not have the encryption key. Cloud providers typically offer encryption capabilities, and organizations should use encryption to protect sensitive data both in transit and at rest.

Access controls: Access controls limit who can access sensitive data in the cloud. Organizations should use role-based access control (RBAC) to ensure that only authorized individuals can access sensitive data. RBAC assigns permissions based on an individual's role in the organization, limiting access to only what is necessary to perform their job.

Multi-factor authentication: Multi-factor authentication (MFA) is an extra layer of security that requires users to provide two or more forms of authentication to access sensitive data. MFA helps prevent unauthorized access, even if an attacker has obtained the user's password.

Data loss prevention (DLP): DLP tools are designed to prevent sensitive data from leaving an organization's network. These tools can detect and block the transmission of sensitive data, such as credit card numbers or Social Security numbers, outside of the organization.

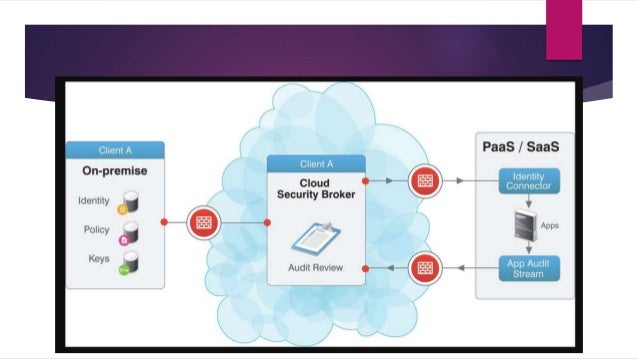

Cloud Access Security Broker (CASB): A CASB is a security tool that provides visibility and control over cloud applications and data. A CASB can monitor cloud traffic, enforce security policies, and detect and respond to threats.

Regular security audits: Organizations should conduct regular security audits of their cloud environment to identify vulnerabilities and ensure compliance with security policies and regulations.

In summary, to ensure that sensitive data is kept secure in a cloud environment, organizations should use encryption, access controls, MFA, DLP, CASB, and regular security audits. By implementing these best practices and using these tools and technologies, organizations can better protect their sensitive data in the cloud.

What steps would you take to ensure that the cloud infrastructure is properly configured to prevent unauthorized access?

To ensure that the cloud infrastructure is properly configured to prevent unauthorized access, here are some steps that organizations should take:

Implement strong authentication: Organizations should implement strong authentication mechanisms, such as multi-factor authentication (MFA) and strong passwords, to prevent unauthorized access to cloud resources.

Use role-based access controls (RBAC): RBAC assigns permissions to users based on their role in the organization. This ensures that users only have access to the resources they need to perform their job.

Implement network security measures: Organizations should use network security measures such as firewalls, intrusion detection and prevention systems (IDS/IPS), and virtual private networks (VPNs) to protect their cloud infrastructure from unauthorized access.

Implement data encryption: Organizations should use data encryption to protect sensitive data stored in the cloud. Encryption should be used both in transit and at rest.

Regularly monitor access and activity: Organizations should regularly monitor access and activity logs to detect any unauthorized access or suspicious activity.

Regularly update and patch software: Organizations should regularly update and patch software to ensure that vulnerabilities are addressed and that the cloud infrastructure remains secure.

Conduct regular security audits: Organizations should conduct regular security audits of their cloud infrastructure to identify vulnerabilities and ensure compliance with security policies and regulations.

Implement a disaster recovery plan: Organizations should implement a disaster recovery plan to ensure that in the event of a security breach, data can be recovered quickly and the impact on the organization is minimized.

In summary, to ensure that the cloud infrastructure is properly configured to prevent unauthorized access, organizations should implement strong authentication, use RBAC, implement network security measures, use data encryption, monitor access and activity, regularly update and patch software, conduct regular security audits, and implement a disaster recovery plan.

Can you explain how multi-factor authentication works in a cloud environment and why it is important?

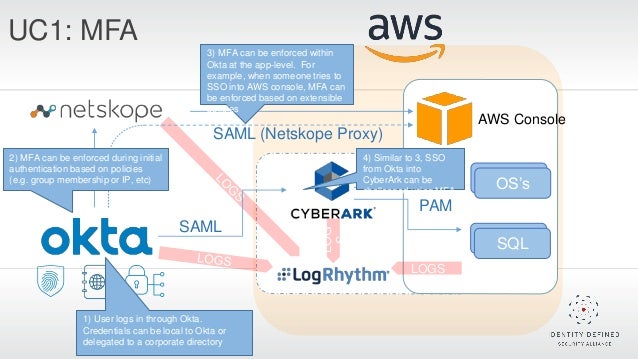

Multi-factor authentication (MFA) is a security mechanism that requires users to provide two or more forms of authentication to access a system or application. In a cloud environment, MFA is typically used to provide an additional layer of security beyond a username and password.

MFA in a cloud environment typically works in the following way:

The user provides their username and password to access a cloud application or resource.

The cloud service prompts the user to provide a second form of authentication, such as a fingerprint scan, a one-time password (OTP), or a smart card.

If the user provides the correct second form of authentication, they are granted access to the cloud application or resource.

MFA is important in a cloud environment for several reasons:

Increased security: MFA provides an additional layer of security beyond a username and password, making it more difficult for attackers to gain unauthorized access to cloud resources.

Compliance: Many regulatory frameworks, such as PCI-DSS, require the use of MFA to protect sensitive data.

Reduced risk of data breaches: By requiring multiple forms of authentication, MFA reduces the risk of data breaches and other security incidents.

Improved user experience: While MFA may add an extra step to the login process, it can also improve the user experience by providing additional security without requiring users to remember complex passwords.

In summary, MFA in a cloud environment provides an additional layer of security beyond a username and password, reducing the risk of data breaches and other security incidents. MFA is also often required for compliance purposes and can improve the user experience.

How do you ensure compliance with regulatory requirements such as GDPR, HIPAA, or PCI-DSS in a cloud environment?

Ensuring compliance with regulatory requirements such as GDPR, HIPAA, or PCI-DSS in a cloud environment requires a comprehensive approach that involves both technical and organizational measures. Here are some steps that organizations can take to ensure compliance:

Understand the regulatory requirements: Organizations must have a thorough understanding of the regulatory requirements that apply to them. This includes understanding the scope of the regulation, the types of data that are covered, and the specific requirements for data handling, storage, and processing.

Select a compliant cloud service provider: Organizations should select a cloud service provider that is compliant with the regulatory requirements that apply to them. This includes reviewing the provider's certifications, such as SOC 2, ISO 27001, and HIPAA Business Associate Agreements (BAAs).

Implement appropriate security controls: Organizations should implement appropriate security controls to protect the data they store and process in the cloud. This includes implementing access controls, encryption, and monitoring tools.

Conduct regular security assessments: Organizations should conduct regular security assessments to identify and remediate any vulnerabilities in their cloud environment.

Develop policies and procedures: Organizations should develop policies and procedures that address the specific regulatory requirements that apply to them. This includes policies around data handling, incident response, and data breach notification.

Train employees: Organizations should provide training to employees on the regulatory requirements that apply to them and the policies and procedures in place to ensure compliance.

Conduct regular audits: Organizations should conduct regular audits to ensure that they are in compliance with the regulatory requirements that apply to them. This includes both internal audits and external audits by third-party auditors.

In summary, ensuring compliance with regulatory requirements such as GDPR, HIPAA, or PCI-DSS in a cloud environment requires a comprehensive approach that involves understanding the regulatory requirements, selecting a compliant cloud service provider, implementing appropriate security controls, developing policies and procedures, training employees, and conducting regular audits.

Can you describe a situation where you had to deal with a security incident in a cloud environment, and how did you respond to it?

As an AI language model, I don't have personal experience dealing with security incidents in a cloud environment. However, I can provide a hypothetical scenario to illustrate how a security incident in a cloud environment might be handled:

Suppose an organization that stores sensitive customer data in a cloud environment becomes aware of a potential security incident. The organization receives a notification from their cloud service provider that an unauthorized user attempted to access the organization's cloud resources using stolen credentials. The organization immediately takes the following steps to respond to the incident:

Isolate the affected resources: The organization isolates the affected resources to prevent any further unauthorized access or damage to the system.

Conduct a forensic investigation: The organization conducts a forensic investigation to determine the scope and impact of the incident. This includes identifying the source of the breach, the types of data that were accessed, and any potential damage or loss.

Notify affected parties: The organization notifies any affected parties, such as customers or partners, of the potential data breach and advises them on any steps they can take to protect themselves.

Implement security controls: The organization implements additional security controls to prevent similar incidents from occurring in the future. This includes reviewing access controls, implementing multi-factor authentication, and strengthening password policies.

Report the incident: The organization reports the incident to any applicable regulatory authorities and works with them to ensure that any required notifications or remediation measures are taken.

Conduct a post-incident review: The organization conducts a post-incident review to identify any gaps or weaknesses in their security controls and implements any necessary improvements.

In summary, responding to a security incident in a cloud environment requires a swift and coordinated effort to isolate the affected resources, conduct a forensic investigation, notify affected parties, implement additional security controls, report the incident to regulatory authorities, and conduct a post-incident review.

How do you ensure that cloud applications and services are kept up-to-date with the latest security patches and updates?

Keeping cloud applications and services up-to-date with the latest security patches and updates is essential to prevent security vulnerabilities and ensure the overall security of the cloud environment. Here are some steps that organizations can take to ensure that cloud applications and services are kept up-to-date:

Implement an automated patch management system: An automated patch management system can be used to ensure that all applications and services in the cloud environment are patched with the latest security updates as soon as they become available.

Monitor security advisories and alerts: Organizations should monitor security advisories and alerts from their cloud service provider, software vendors, and other sources to stay informed of any new security vulnerabilities or updates that need to be applied.

Prioritize patching based on risk: Organizations should prioritize patching based on the risk associated with each vulnerability. Critical vulnerabilities should be patched immediately, while lower-risk vulnerabilities can be addressed in a more routine manner.

Test patches before deployment: Organizations should test patches in a non-production environment before deploying them in production to ensure that they do not cause any compatibility issues or other problems.

Ensure compatibility with third-party integrations: Organizations should ensure that patches are compatible with any third-party integrations that are used in the cloud environment, such as APIs or plugins.

Implement a change management process: Organizations should implement a change management process that includes documentation, testing, and approval procedures for all updates and patches to ensure that they are applied consistently and correctly.

In summary, ensuring that cloud applications and services are kept up-to-date with the latest security patches and updates requires a systematic approach that includes implementing an automated patch management system, monitoring security advisories and alerts, prioritizing patching based on risk, testing patches before deployment, ensuring compatibility with third-party integrations, and implementing a change management process.

How do you maintain the security of cloud-based applications in the face of evolving threats and attack vectors?

Maintaining the security of cloud-based applications requires a proactive and continuous approach that can adapt to evolving threats and attack vectors. Here are some strategies that organizations can use to maintain the security of cloud-based applications:

Conduct regular security assessments: Regular security assessments can help identify vulnerabilities in cloud-based applications and assess the effectiveness of existing security controls. These assessments can be performed by internal security teams or third-party auditors.

Monitor for security incidents: Organizations should monitor cloud-based applications for security incidents, such as unauthorized access or data breaches, using security information and event management (SIEM) tools, intrusion detection systems (IDS), and other security monitoring tools.

Stay informed of emerging threats: Organizations should stay informed of emerging threats and attack vectors by monitoring threat intelligence feeds and participating in information sharing communities. This can help identify potential threats and take proactive measures to mitigate them.

Implement security best practices: Organizations should implement security best practices for cloud-based applications, such as using encryption, implementing strong access controls, and following secure coding practices.

Train employees on security awareness: Employees should be trained on security awareness to ensure that they understand the risks associated with cloud-based applications and are able to identify and report potential security incidents.

Update security controls regularly: Security controls, such as firewalls, intrusion prevention systems, and access controls, should be updated regularly to ensure that they are effective against the latest threats and attack vectors.

Use security automation and orchestration: Security automation and orchestration can help organizations respond quickly and effectively to security incidents by automating routine tasks and orchestrating incident response activities.

In summary, maintaining the security of cloud-based applications requires a proactive and continuous approach that includes regular security assessments, monitoring for security incidents, staying informed of emerging threats, implementing security best practices, training employees on security awareness, updating security controls regularly, and using security automation and orchestration.

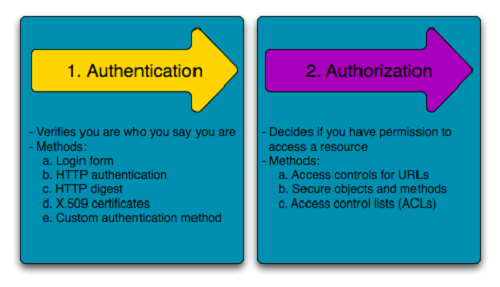

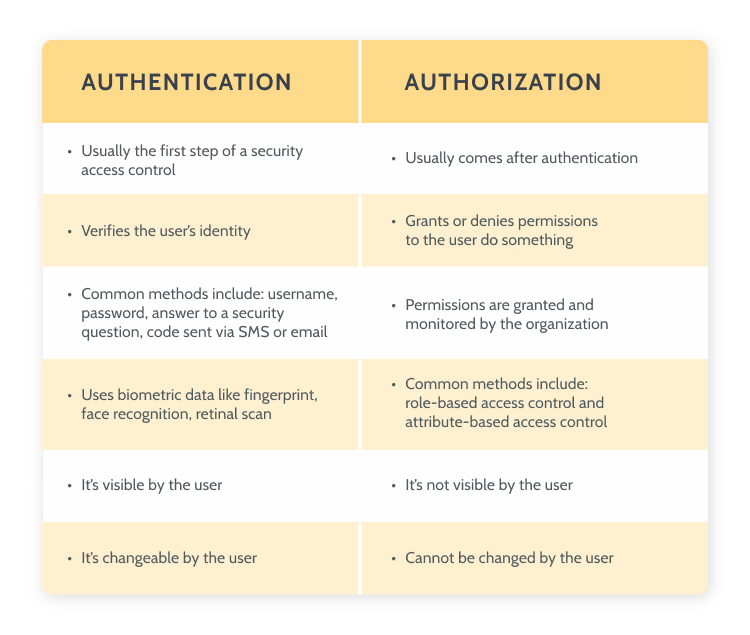

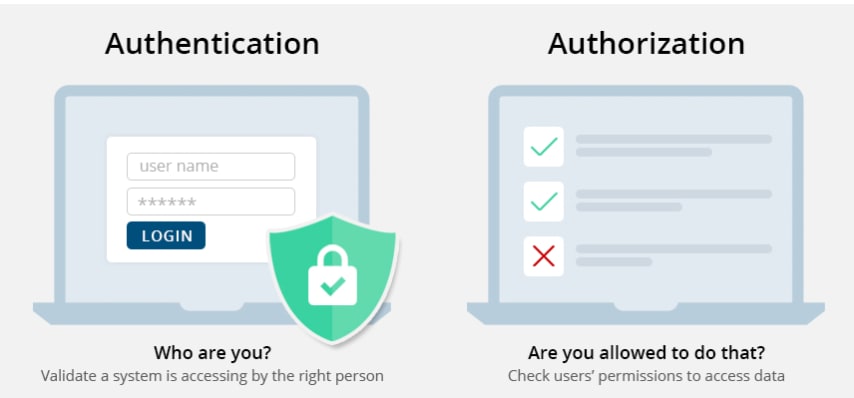

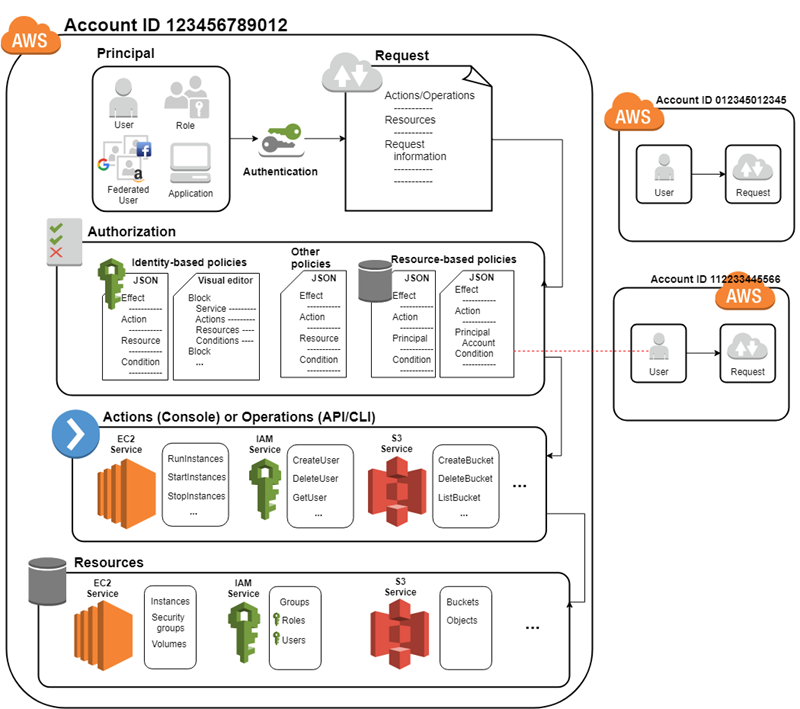

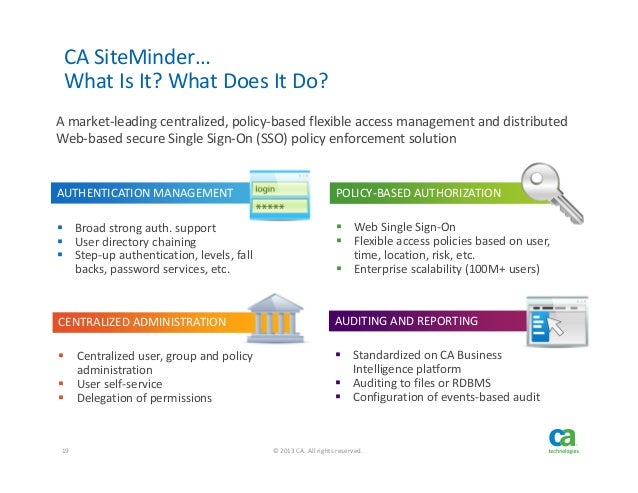

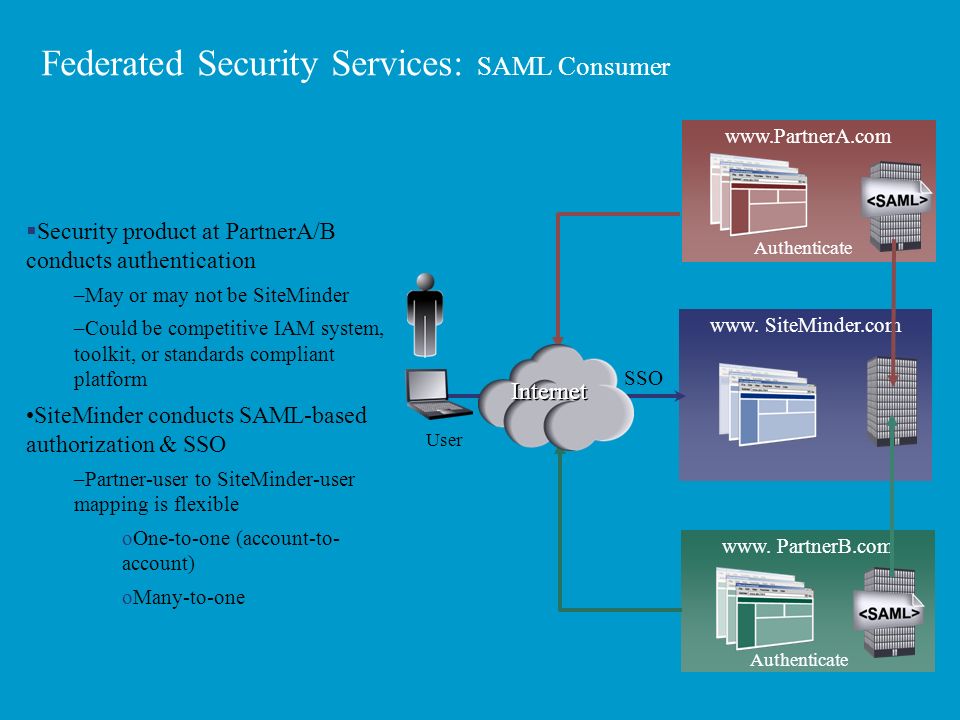

- What Is Authentication?

Authentication is the act of validating that users are whom they claim to be

Giving someone permission to download a particular file on a server or providing individual users with administrative access to an application are good examples of authentication

What Is Authorization?

Authorization in a system security is the process of giving the user permission to access a specific resource or function. This term is often used interchangeably with access control or client privilege.

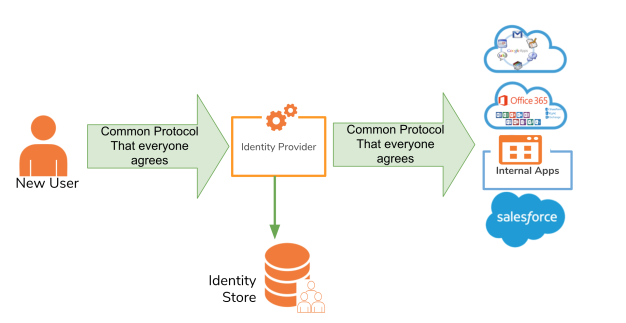

In secure environments, authorization must always follow authentication. Users should first prove that their identities are genuine before an organization’s administrators grant them access to the requested resources.

- https://www.okta.com/identity-101/authentication-vs-authorization/

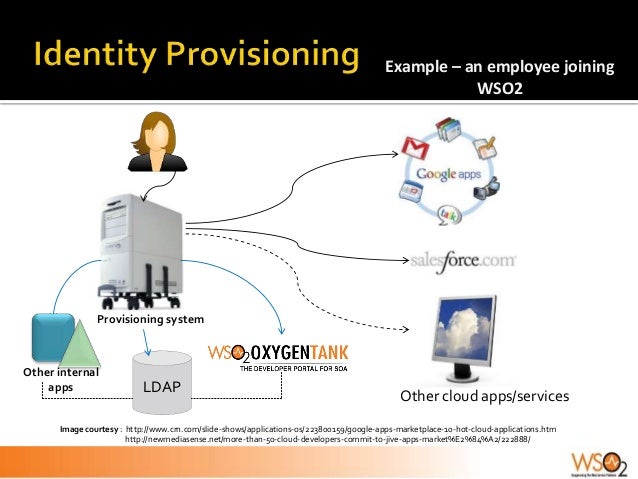

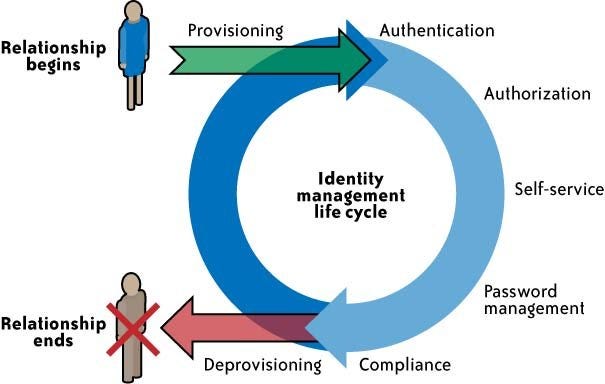

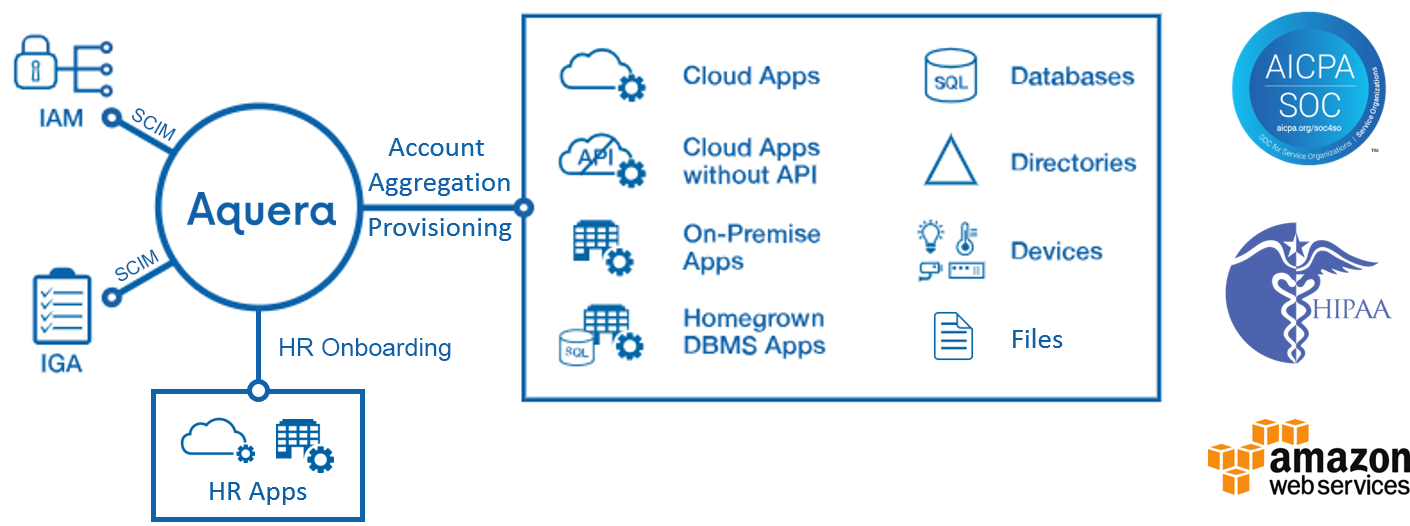

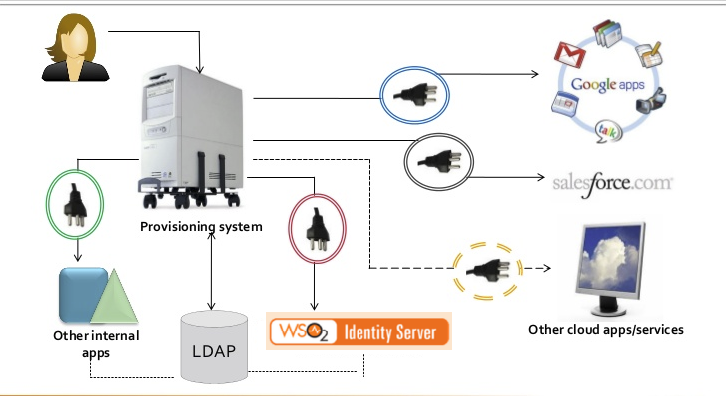

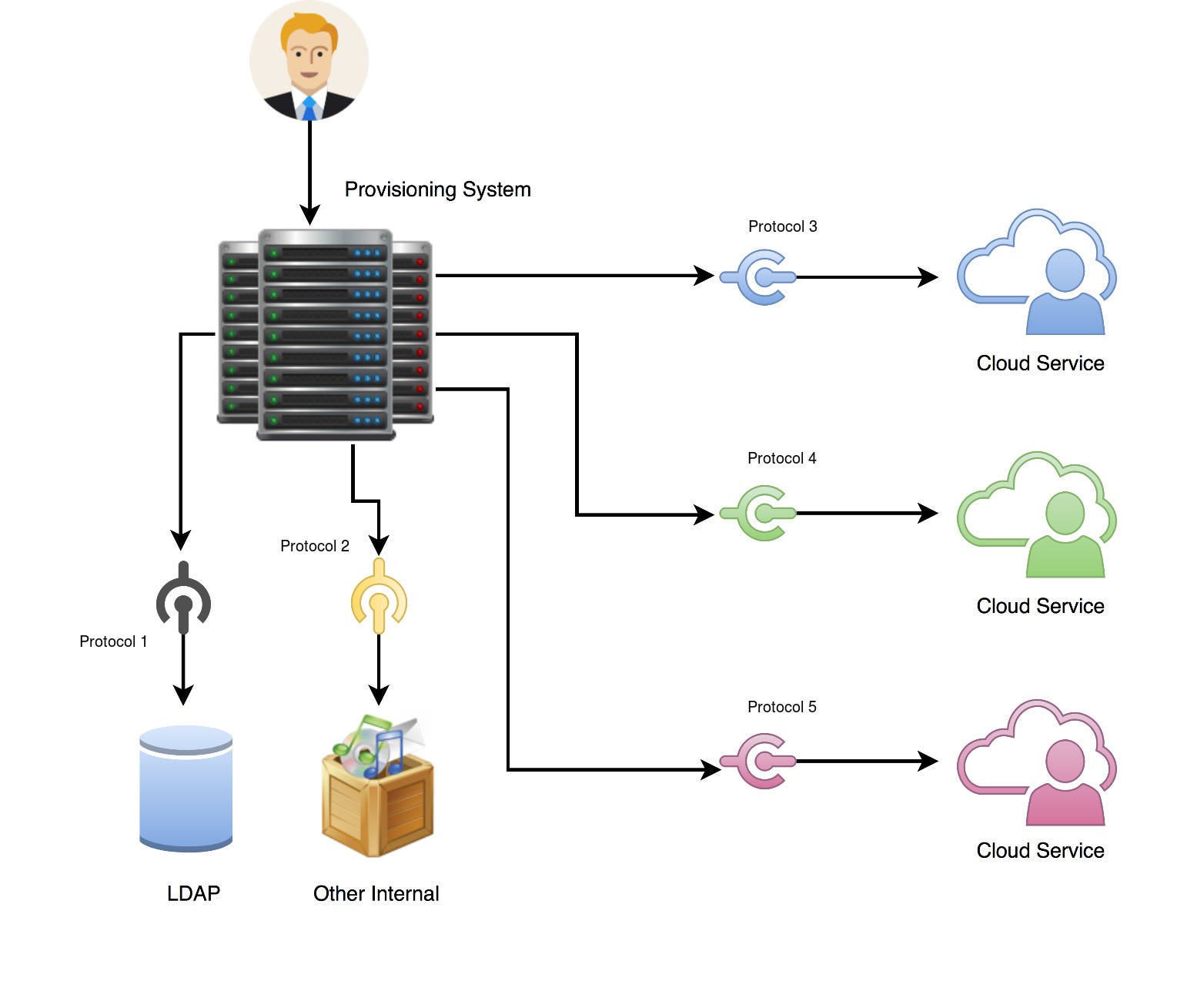

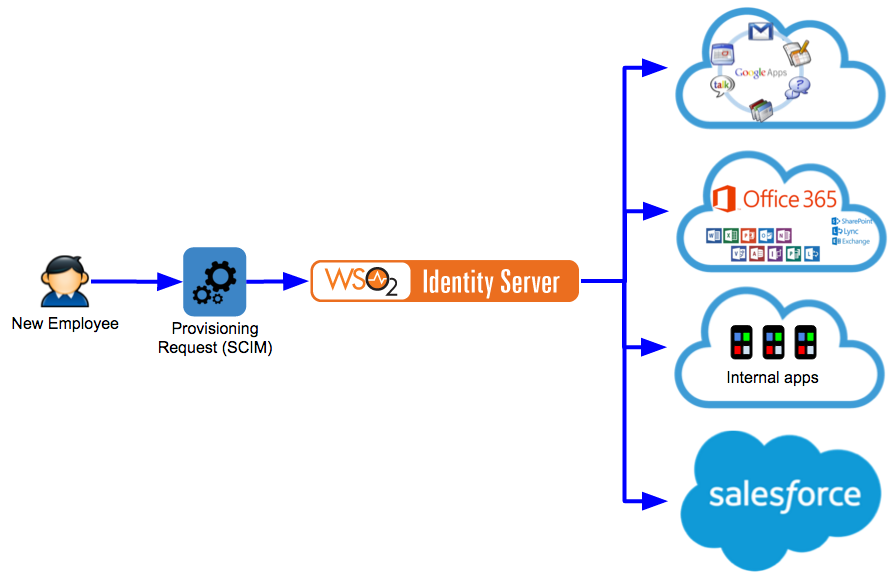

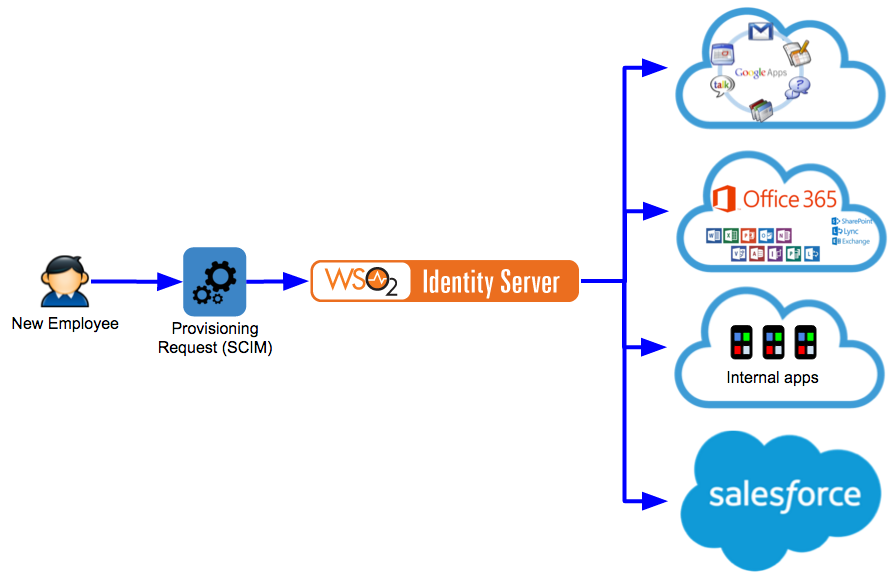

- User provisioning and deprovisioning involves the process of creating, updating and deleting user accounts in multiple applications and systems. This access management practice can sometimes include associated information, such as user entitlements, group memberships and even the groups themselves.

User provisioning and deprovisioning key benefits

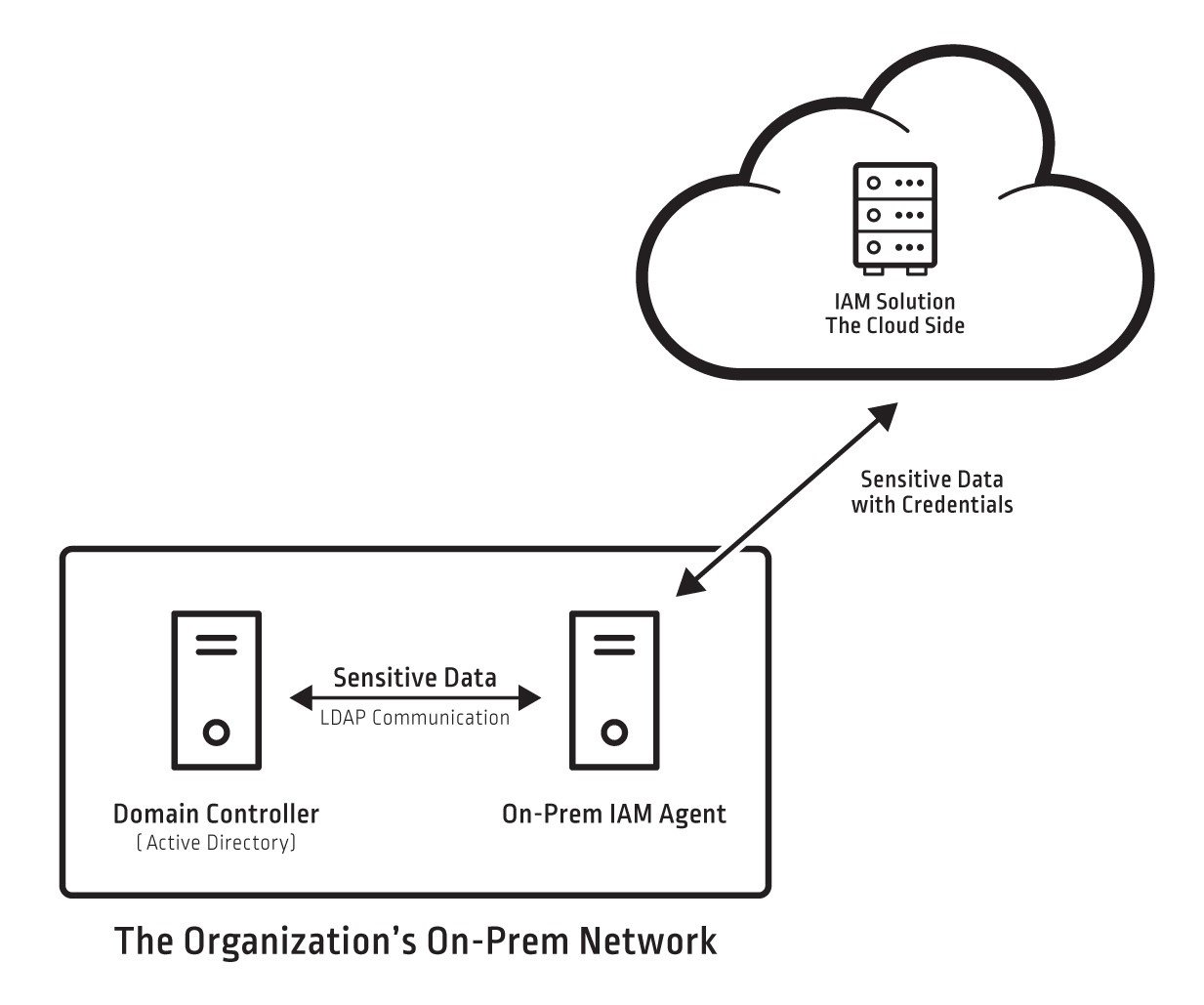

Automated user provisioning is one of the main features of many identity and access management (IAM) solutions. Provisioning comes into play when an employee joins an organization, moves to a different department or division, or exits a company.This is known as the joiner/mover/leaver (JML) process. By integrating an IAM solution directly to HR and personnel systems, you connect the process of creating/updating/deleting user accounts with HR actions. Actions that result in changes to HR data, such as those related to employee onboarding and offboarding, can automatically result in changes to permissions for accessing systems and applications tied to corresponding employee accounts.

Automated user provisioning helps keep your company secure by ensuring employees have access only to the apps they need. Automated user deprovisioning helps keep your company secure by ensuring that whenever an employee leaves, their access is automatically removed for all connected applications. In addition, all existing user sessions are removed to reduce security risk.

https://www.onelogin.com/learn/what-is-user-provisioning-and-deprovisioning

- User provisioning and deprovisioning involves the process of creating, updating and deleting user accounts in multiple applications and systems. This access management practice can sometimes include associated information, such as user entitlements, group memberships and even the groups themselves.

SCIM, or System for Cross-domain Identity Management, is an open standard that allows for the automation of user provisioning. SCIM communicates user identity data between identity providers (such as companies with multiple individual users) and service providers requiring user identity information (such as enterprise SaaS apps).

Why use SCIM?

In short, SCIM makes user data more secure and simplifies the user experience by automating the user identity lifecycle management process.

With SCIM, user identities can be created either directly in a tool like Okta, or imported from external systems like HR software or Active Directory.

Employees outside of IT can take advantage of single sign-on (SSO) to streamline their own workflows and reduce the need to pester IT for password resets by up to 50%.

When employees no longer need to sign on to each of their accounts individually, companies can ensure security policy compliance. This also mitigates risks associated with employees using the same password across different tools and apps

How it works

SCIM is a REST and JSON-based protocol that defines a client and server role. A client is usually an identity provider (IDP), like Okta, that contains a robust directory of user identities. A service provider (SP) is usually a SaaS app, like Box or Slack, that needs a subset of information from those identities. When changes to identities are made in the IdP, including create, update, and delete, they are automatically synced to the SP according to the SCIM protocol. The IdP can also read identities from the SP to add to its directory and to detect incorrect values in the SP that could create security vulnerabilities. For end users, this means that they have seamless access to applications for which they’re assigned, with up-to-date profiles and permissions.

https://www.okta.com/blog/2017/01/what-is-scim/

- System for Cross-domain Identity Management: Protocol

Abstract

The System for Cross-domain Identity Management (SCIM) specification

is an HTTP-based protocol that makes managing identities in multi-

domain scenarios easier to support via a standardized service.

Examples include, but are not limited to, enterprise-to-cloud service

providers and inter-cloud scenarios

https://tools.ietf.org/html/rfc7644

- CIS Controls™ Cloud Companion Guide

Ensuring and understanding that the service-level agreements (SLAs

)and Legal Contracts with the Cloud Service Provider (CSP) highlight liability, service levels, breach

disclosure,and incident response timeframes is an important piece of your cloud security

As new tools become

available,the cloud consumershould consider a hybrid approach using third-

partytools along

withCSPnative security tools that best fit an organization's security and management needs.

Company management processes should ensure there is overlap rather than gaps in coverage between native and third-

partytools

A cloud environment has four distinct service models that the application or service can fall under

IaaS (Infrastructure as a Service) is a cloud environment that computing resources such as virtual servers,

storage,and networkinghardware. The consumer

utilizes their own software such as operating systems,

middleware,and applications. The underlying cloud infrastructure

is managed by the CSP

PaaS (Platform as a Service) is a cloud computing environment for development and management of a consumer’s applications. It includes the infrastructure

hardware:virtual servers, storage, and networking while tying in the middleware and development tools to allow the consumer to deploy their applications.

It is designed to support the complete application

lifecycle while leaving the management of the underlying infrastructure to the CSP.

SaaS (Software as a Service) is a cloud computing software solution that provides the consumer with access to a complete software product. The software application

resides on a

cloudenvironment and

is accessed by the consumer through the web or an application program interface (API). The consumer can

utilize the application to store and analyze data without having to worry about managing the infrastructure, service, or

software,as that falls to the CSP.

FaaS (Function as a Service) is a cloud computing service that allows the consumer to develop, manage, and run their application functionalities without having to manage and maintain any of the infrastructure that

is required.The consumer can execute code in response to events that happen within the CSP or the application without having to build out or maintain a complex underlying infrastructure.

a cloud environment has multiple deployment models:

Private cloud (on-

prem)

consists of all the computing resources being hosted and used

exclusively by one consumer (organization) within its own offices and data centers. The consumer is

responsiblefor the operational costs, hardware,

software,and the resources required to build and maintain the infrastructure. This is best used for critical business operations and applications that require complete control and configurability.

Private cloud (third-party hosted

)is a private cloud that

is hosted by an external

third party provider. The third party provides an exclusive cloud environment for the consumer and manages the hardware. All costs associated with the maintenance is the responsibility of the consumer

Community cloud (shared

)is a deployment solution where the computing resources and infrastructure

are shared between several organizations. The resources can

be managed internally or by a third party and

they can be hosted on-

prem or externally. The organizations share the cost and often have similar cloud security requirements and business objectives

Public cloud is an infrastructure and computing services hosted by a third party company defined as a CSP. It is available over the internet and

the services are delivered through a self-service portal.

The consumer is provided on-demand accessibility and scalability without the high overhead cost of maintaining the physical hardware and software. The CSP is responsible for the management and maintenance of the system while the consumer pays only for resources they use

Hybrid cloud is an environment that uses a combination of the three cloud deployment models, private cloud (on-

prem), private cloud

(third-party hosted), and public cloud with an orchestration service between the three deployment models

https://www.cisecurity.org/white-papers/cis-controls-cloud-companion-guide/

- Cloud Computing Security Considerations

Introduction

this document provides a list of

thought provoking questions to help organisations understand the risks that need to

be considered when using cloud computing.

developing a risk assessment helps senior business representatives make an informed decision

as to whether cloud computing is

currently suitable to meet their business goals with an acceptable level of risk

The questions in this document address the following topics:

availability of data and business functionality

protecting data from unauthorised access

handling security incidents.

In particular, the risk assessment needs

to seriously consider the potential risks involved in handing over control of your data to an external vendor. Risks may increase if the vendor operates offshore

Overview of cloud computing

NIST specify five characteristics

ofcloud computing:

On-demand self-service

Broad network access

Resource pooling

Rapid elasticity

Pay-per-use measured service

There are

threecloud service models.

Infrastructure as a Service

(IaaS

Platform as a Service

(PaaS

Software as a Service

(SaaS

There are four cloud deployment models

Public cloud

Private cloud

Community cloud

Hybrid cloud

Risk management

A risk management process must be used to balance the benefits of cloud computing with the security risks associated with the organisation handing over control to a vendor.

A risk assessment should consider whether the organisation

is willing to trust their reputation, business continuity, and data to a vendor that may insecurely transmit, store and process the organisation’s data.

The contract between a vendor and their customer must address mitigations to governance and security risks, and cover who has access to the customer’s data and the security measures used to protect the customer’s data.

Vendor’s responses to important security considerations must

be captured in the Service Level Agreement or other contract, otherwise the customer only has vendor promises and marketing claims that can be hard to verify and may be unenforceable.

In some cases it may be impractical or impossible for a customer

to personally verify whether the vendor is adhering to the contract, requiring the customer to rely on third party audits including certifications instead of

simply putting blind faith in the vendor

For vendors advertising ISO/IEC 27001 compliance, customers should ask to review a copy of the Statement of Applicability, a copy of the latest external auditor’s report, and the results of recent internal audits.

Overview of cloud computing security considerations

Maintaining availability and business functionality

Protecting data from unauthorised access by a third party

Protecting data from unauthorised access by the vendor’s customers

Protecting data from unauthorised access by rogue vendor employees

Handling security incidents

Detailed cloud computing security considerations

Maintaining availability and business functionality

Answers to the following questions can reveal mitigations to help manage the risk of business functionality being negatively impacted by the vendor’s cloud services becoming unavailable

Protecting data from unauthorised access by a third party

Answers to the following questions can reveal mitigations to help manage the risk of unauthorised access to data by a third party

https://www.cyber.gov.au/sites/default/files/2019-05/PROTECT%20-%20Cloud%20Computing%20Security%20Considerations%20%28April%202019%29_0.pdf

- Data at rest is data that is not actively moving from device to device or network to network such as data stored on a hard drive, laptop, flash drive, or archived/stored in some other way. Data protection at rest aims to secure inactive data stored on any device or network. While data at rest is sometimes considered to be less vulnerable than data in transit, attackers often find data at rest a more valuable target than data in motion.

Data in transit, or data in motion, is data actively moving from one location to another such as across the internet or through a private network. Data protection in transit is the protection of this data while it’s traveling from network to

network or being transferred from a local storage device to a cloud storage device

– wherever data is moving, effective data protection measures for in transit data are critical as data

is often considered less secure while in motion.

https://digitalguardian.com/blog/data-protection-data-in-transit-vs-data-at-rest

- Data in use is an information technology term referring to active data which is stored in a non-persistent digital state typically in computer random-access memory (RAM), CPU caches, or CPU registers.

https://en.wikipedia.org/wiki/Data_in_use

- Data in transit is defined into two categories, information that flows over the public or untrusted network such as the Internet and data that flows in the confines of a private network such as a corporate or enterprise Local Area Network (LAN)

https://en.wikipedia.org/wiki/Data_in_transit

- Data at rest in information technology means inactive data that is stored physically in any digital form (e.g. databases, data warehouses, spreadsheets, archives, tapes, off-site backups, mobile devices etc.)

Concerns about data at rest

Encryption

Data encryption, which prevents data visibility

in the event of its unauthorized access or theft,

is commonly used to protect data in motion and increasingly promoted for protecting data at rest

Tokenization

Tokenization is a non-mathematical approach to protecting data at rest that replaces sensitive data with non-sensitive substitutes, referred to as tokens, which have no extrinsic or exploitable meaning or value.

Lower processing and storage requirements make

tokenization an ideal method of securing data at rest in systems that manage large volumes of data.

Federation

An example of this would be a European organization which stores its archived data off-site in the USA.

https://en.wikipedia.org/wiki/Data_at_rest

- Data in transit is also referred to as data in motion, and data in flight

https://en.wikipedia.org/wiki/Data_in_transit

- Securing data at rest — access control, encryption and data retention policies can protect archived organizational data.

Securing data in use — some DLP systems can monitor and flag unauthorized activities that users may intentionally or unintentionally perform in their interactions with data.

https://www.imperva.com/learn/data-security/data-loss-prevention-dlp/

- Data Security vs. Data Protection

Data security describes the practical protection of data through technical security and monitoring measures. In this understanding, "data" means all data assigned to the domain of a person or organization.

The existing data must be protected against various threats, taking into consideration the classical protection goals of integrity, availability, confidentiality, and assignability.

In contrast to data security, data protection is more about theoretical and legal aspects

– in particular,

the protection of personal data against misuse.

Personal data means not just personal information, but data that can lead to the direct or indirect identification of an individual (also called a "natural person"). The principle of informational self-determination is decisive: It's not just about protecting an individual's data; it's about letting them decide who owns and uses their information. However, technical data security is an important data protection instrument, in particular the confidentiality of personal data.

Although data security protects data, data protection is a legal construct codified in the GDPR that

bestows informational self-determination. The role of the data protection officer and the

IT security officer should therefore preferably not be filled by the same employee

http://www.admin-magazine.com/Articles/Data-Security-vs.-Data-Protection?utm_source=ADMIN+Newsletter&utm_campaign=HPC_Update_129_2019-10-17_High-Performance_Python_%E2%80%93_Distributed_Python&utm_medium=email

- A cloud access security broker (CASB) (sometimes pronounced cas-bee) is on-premises or cloud based software that sits between cloud service users and cloud applications, and monitors all activity and enforces security policies.

https://en.wikipedia.org/wiki/Cloud_access_security_broker

Six Top CASB Vendors

Between users accessing unapproved cloud services and mishandling data even in approved ones, the cloud is one of the biggest challenges security teams face

A CASB helps IT departments monitor

cloud service usage and implement centralized controls to ensure that cloud services

are used securely.

What does a CASB do?

CASBs provide a solution

to many of the security problems posed by the use of cloud services

– both sanctioned and unsanctioned. They do this by interposing themselves between end users

– whether they are on desktops on the corporate network or on mobile devices connecting using unknown networks

– or by harnessing the power of the cloud provider's own API.

CASBs should offer organizations:

Visibility into cloud usage throughout the organization

A way to ensure and prove compliance with all regulatory requirements

A way to ensure that data

is stored securely in the cloud

A satisfactory level of threat protection to ensure that the security risk of using the cloud is acceptable

In

practice this means that at a bare minimum, CASBs need to

be able to:

Provide the IT department with visibility into sanctioned and unsanctioned cloud service usage

Provide a

consolidated view of all cloud services being used by the organization

– and the users who access them from any device or location

Control access to cloud services

Help administrators ensure that the organization complies with all relevant regulations and standards (such as data residency) when using cloud services

Allow IT departments to set and enforce security policies on cloud usage and the use of corporate data in cloud services, and apply them through audit, alert, block, quarantine, delete and other controls

Enable administrators to encrypt or tokenize data stored in the cloud

Provide data loss prevention (DLP) capabilities, or interface with existing corporate DLP systems

Provide access controls to prevent unauthorized employees, devices or applications from using cloud services

Offer threat prevention methods such as behavioral analytics, anti-malware scanning and threat intelligence.

How CASBs work

CASBs may run in a corporate data center or

in a hybrid mode that involves the data center and the cloud, but

the majority of companies choose a CASB that operates

exclusively from the cloud

– unless regulatory or data sovereignty considerations require an on-premises solution.

The three key ways that a CASB can

be deployed are as a reverse proxy, a forward proxy, or in an "API mode."

For example, reverse proxies can handle user-owned devices

without the need for configuration changes or certificates to

be installed, but they do not handle unsanctioned

cloud usage well.

Forward proxies direct all traffic from managed endpoints through the CASB, including traffic to unsanctioned cloud services, but user-owned devices may not be subject to management. Both types of proxy become a single point of failure that may leave the use of all cloud services vulnerable to a

DDoS attack.

API Mode works well with user-owned devices and allows companies to perform functions such as log telemetry, policy visibility and control and data security inspection functions on all the data at rest in the cloud service. Since a CASB working in API mode is not in the data path to the cloud, it is not a single point of failure.

https://www.esecurityplanet.com/products/top-casb-vendors.html

What’s SOM, exactly? It addresses the new operational admin and security challenges IT is facing when managing multiple SaaS applications.

overcome common challenges in SaaS environments, such as repetitive, manual processes; accidental misconfiguration of application settings; massive data sprawl; sensitive data exposure;

insider threats, and more.

1. Limit the number of super admins allowed in your environment

2. Secure crucial groups privacy settings and lock down sensitive data

In some SaaS applications, even end users can configure who can post, join, and view messages in a group.

3. Gain visibility into license usage across applications and reclaim unused licenses

4. Find who is automatically forwarding emails outside of your domain and disable it

5. Locate publicly shared files and revoke their public sharing links

6. Connect multiple instances of SaaS applications

7. Minimize security risk by ensuring that external users don’t have data access forever

can be an effective way to manage authentication and encryption across cloud and on-premise systems.

CASB definition

Think of cloud access security brokers (CASBs) as

central data authentication and encryption hubs for everything your enterprise uses, both cloud and on-premises and accessed by all endpoints, including private smartphones and tablets. Before the CASB era, enterprise security managers had no visibility into how all their data

was protected.

enterprises needed

a way to deliver consistent security across multiple clouds and protecting everyone using their data. CASBs arrived to help give organizations much deeper visibility into cloud and software-as-a-service (SaaS) usage — down to individual file names and data elements

https://www.csoonline.com/article/3104981/what-is-a-cloud-access-security-broker-and-why-do-i-need-one.html

In comparison with CASB Vs

IDaaS, the benefits of both

IDaaS and CASB are to

be compared, then it can

be found that CASB solutions offer most of the

IDaaS capabilities as a part of their cloud protection suites. In fact, CASBs can offer better features like

the following:

Logging and Reporting: In comparison with CASB Vs

IDaaS, CASBs log and report on every single transaction like login,

logout and all that happens in between, whereas,

IDaaS can report on login and

logout events, but nothing during the session.

Multi-Factor Authentication: CASB solutions can trigger MFA

at any time including the mid-sessions if it notices any suspicious content or activity, whereas,

IDaaS can trigger MFA only at the beginning of the session.

CASB (Cloud Access Security Brokers)

Cloud Access Security Brokers provide their cloud security solutions for both on-premises and cloud-based infrastructure. These CASB solutions include features

like single sign-on (SSO), Multi-factor authentication (MFA) and automated provisioning for apps and devices.

https://www.cloudcodes.com/blog/casb-vs-idaas-cloud-security.html

A Cloud Access Security Broker (CASB) is a cloud security solution designed to address the deficiencies of legacy network security models. In the past, organizations relied on a perimeter-focused security model where an array of cybersecurity defenses were deployed at the perimeter of the enterprise local area network (LAN). By forcing all traffic to flow through this perimeter, it was possible to inspect it and attempt to block threats from entering the network and sensitive data from leaving it.

A growing percentage of an organization’s resources are located outside of the enterprise LAN and the defenses that secure it.

CASB solutions help to bring the same level of protection to the cloud. Whether implemented as a physical appliance or under a Software as a Service (SaaS) model, they provide network visibility and threat protection for an organization’s cloud applications.

Pros and Cons of CASB

A CASB solution provides limited inline threat protection capabilities and can be combined with other solutions to provide an organization’s cloud infrastructure with the protection that it requires.

However, the major limitation of CASB is this need to integrate it with other standalone security solutions. Every cybersecurity solution that an organization needs to acquire, deploy, monitor, and maintain increases security complexity and decreases the efficiency of the security team.

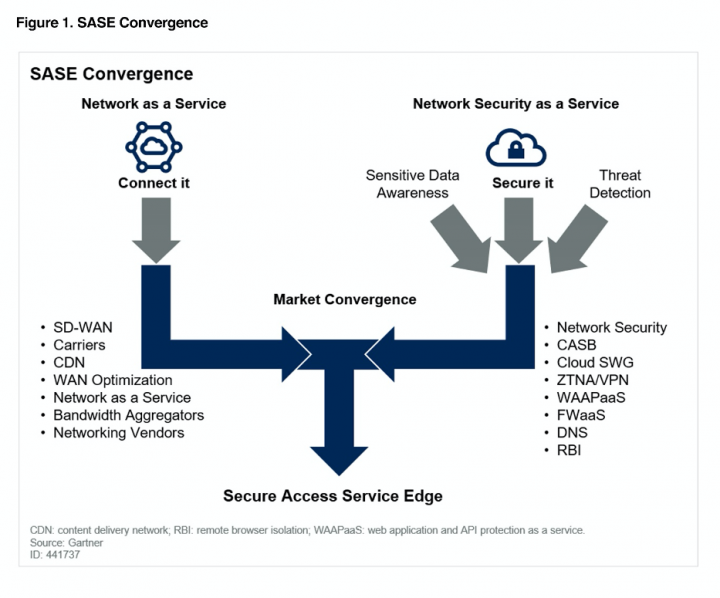

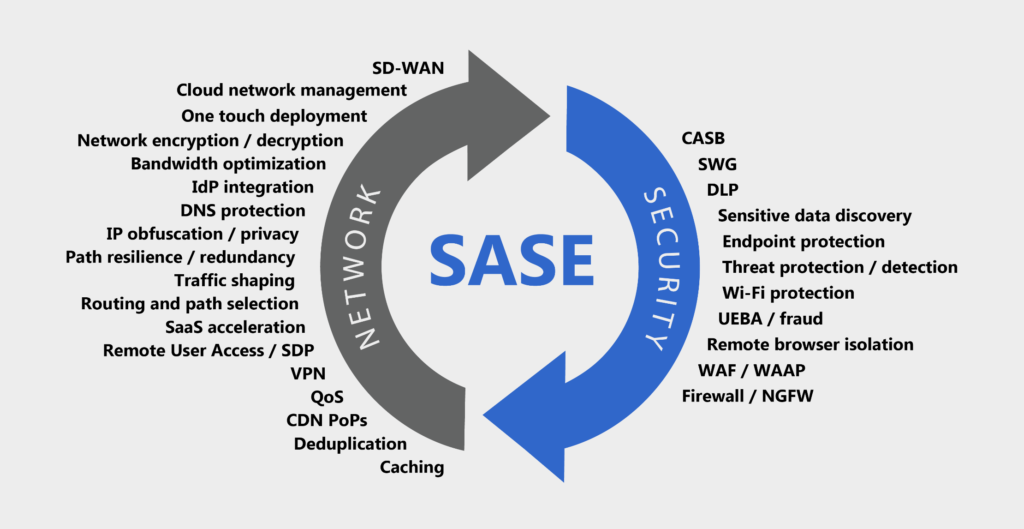

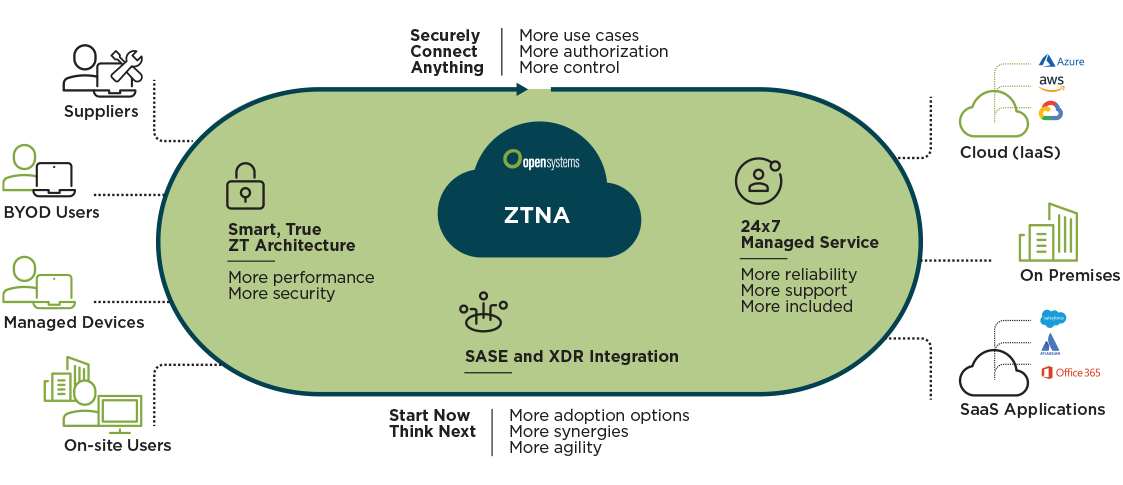

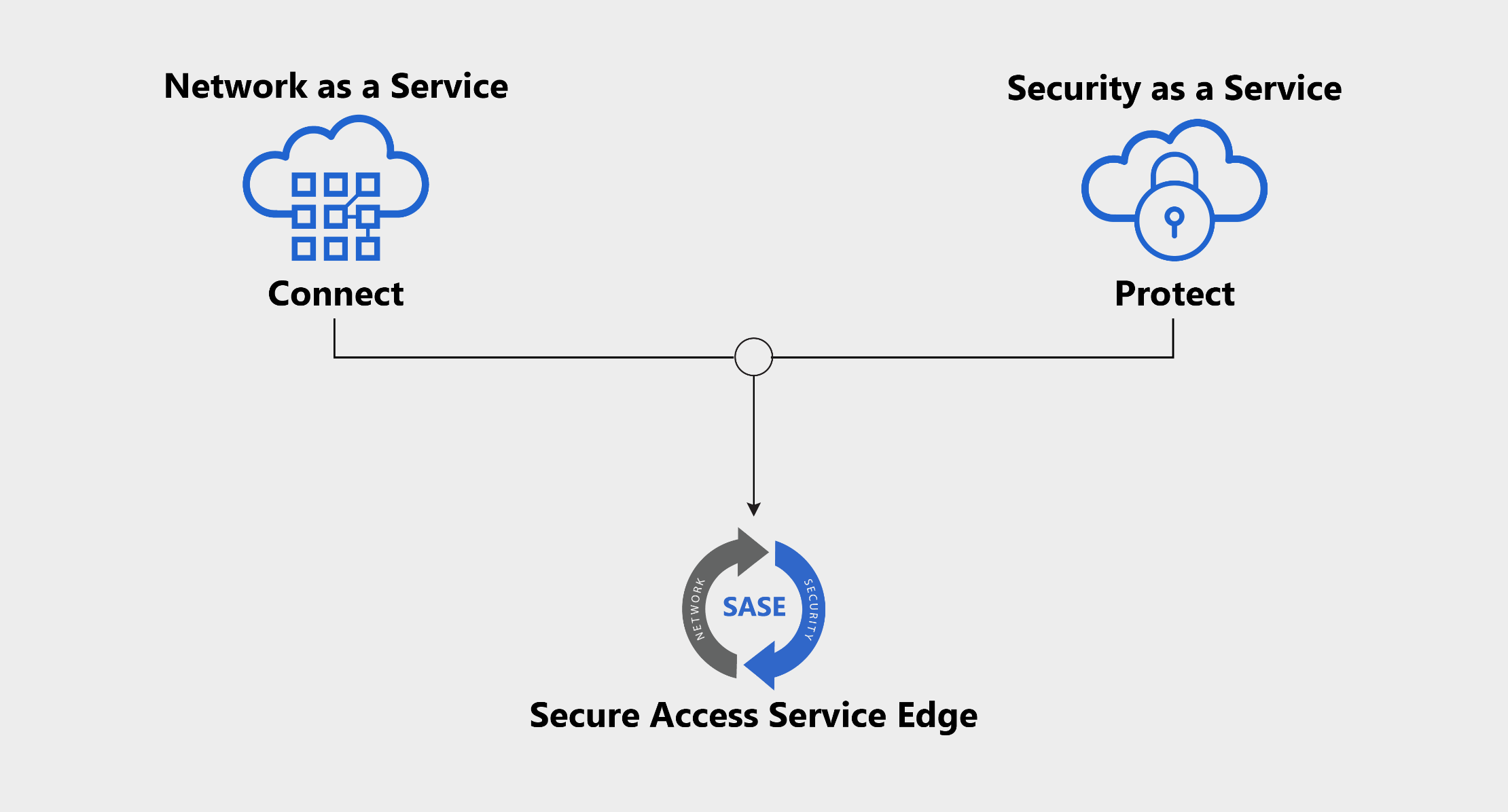

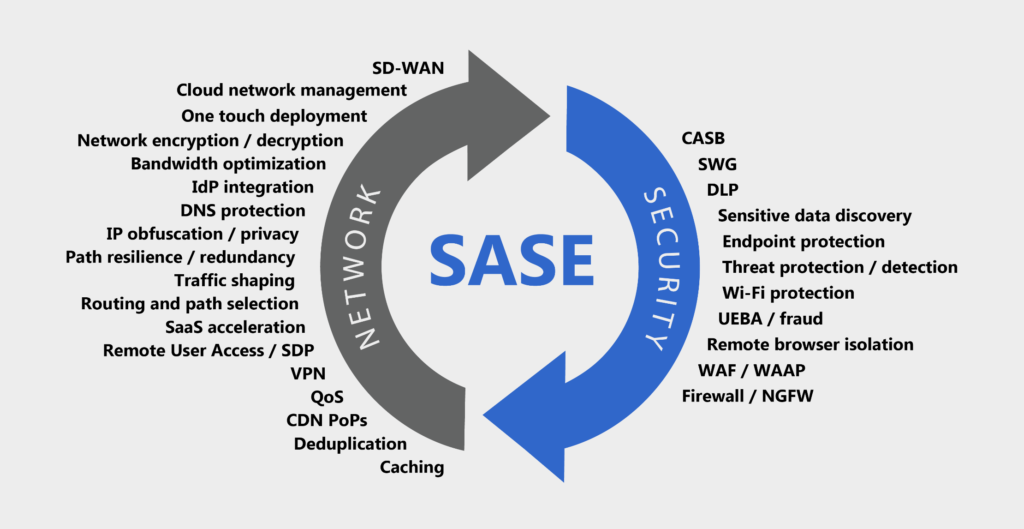

What is SASE?

Software-Defined WAN (SD-WAN) is a networking solution designed to provide high-performance and reliable network connectivity by aggregating multiple transport links. Its ability to optimally route traffic between SD-WAN appliances makes it an ideal choice for connecting organizations to their multiple cloud-based deployments.

Secure Access Service Edge (SASE) integrates SD-WAN functionality with a full network security stack and deploys the result as a cloud-native virtual appliance.

Pros and Cons of SASE

SASE is a complete WAN infrastructure solution, meaning that it cannot be just slotted into place like CASB. Implementing SASE may require a network redesign and retiring legacy networking and security solutions. However, the efficiency and security benefits of SASE can outweigh the costs associated with deploying it.

The Verdict on SASE vs CASB

CASB is designed to solve the challenges of protecting an organization’s cloud applications.

While the cloud does not fit into the traditional perimeter-focused security model used in the past, CASB extends some of the same protections to an organization’s cloud-based deployment.

SASE provides a fully integrated security stack, including CASB. This goes beyond providing the security features that CASB includes to incorporate the optimized network routing offered by SD-WAN, the security of a next-generation firewall (NGFW), and more.

The main difference between SASE and CASB is the level of security integration available within the solution and the assets protected by the solution.

CASB secures SaaS applications and can be an add-on to a security stack where the organization has already invested in and deployed the other necessary security solutions.

SASE, on the other hand, offers a fully-integrated WAN networking and security solution that connects remote users and branch offices to cloud and corporate applications and the Internet.

Why Both SASE and CASB Can Be Beneficial To Your Organization Depending On Your Business Needs

A standalone CASB and a SASE solution both provide the CASB functionality required for cloud security.

the “right choice” can depend on an organization’s unique situation and business needs.

In general, the integration and optimization provided by SASE is likely the better option since it simplifies security and maximizes the efficiency of an organization’s security team

However, a standalone CASB solution can be more easily slotted into an organization’s existing security architecture.

https://www.checkpoint.com/cyber-hub/network-security/what-is-secure-access-service-edge-sase/sase-vs-casb/

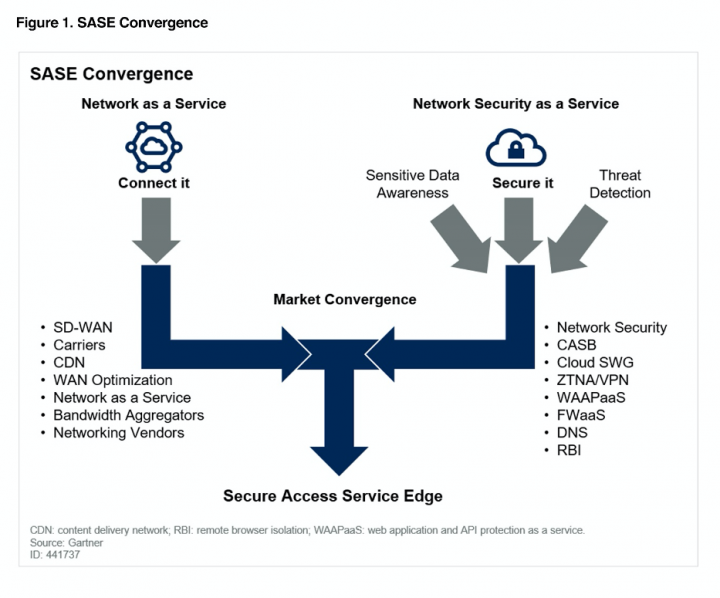

- Secure Access Service Edge (SASE) – defined by Gartner – is a security framework prescribing the conversions of security and network connectivity technologies into a single cloud-delivered platform to enable secure and fast cloud transformation. SASE’s convergence of networking and network security meets the challenges of digital business transformation, edge computing, and workforce mobility

As organizations seek to accelerate growth through use of the cloud, more data, users, devices, applications, and services are used outside the traditional enterprise premises, which means the enterprise perimeter is no longer a location

Despite this shift outside the perimeter, network architectures are still designed such that everything must pass through a network perimeter and then back out. Users, regardless of where they are, must still channel back to the corporate network often using expensive and inefficient technologies only to go back to the outside world again more often

https://www.mcafee.com/enterprise/en-us/security-awareness/cloud/what-is-sase.html

Secure access service edge, or SASE, is a cloud-based IT model that bundles software-defined networking with network security functions and delivers them from a single service provider.

A SASE approach offers better control over and visibility into the users, traffic, and data accessing a corporate network — vital capabilities for modern, globally distributed organizations. Networks built with SASE are flexible and scalable, able to connect globally distributed employees and offices across any location and via any device.

https://www.cloudflare.com/es-la/learning/access-management/what-is-sase/

- Gartner coined the CASB term to describe the security solutions designed to address the challenges created by shifting workloads to the cloud

Gartner’s report titled “The Future of Network Security in the Cloud” they coined another term, SASE (secure access service edge), that is effectively a superset of CASB, delivering cloud security along with other network and security services.

What is CASB

CASB came about because the cloud introduced a dynamic threat vector that traditional security solutions weren’t ideal for. The old “castle and moat” approach to IT security begins to fall apart when business-critical apps and data reside in the cloud

CASB According to Gartner

The four CASB pillars are:

Threat protection.

Each new service is another attack vector. CASBs help address these threats with features such as SWG and anti-malware engines dedicated to cloud services.

Data security

The implementation of security features such as tokenization, access controls and DLP (data loss prevention) help enterprises protect the integrity of their data. By providing data security for cloud services, CASBs provide a way for enterprises to shift to the cloud while mitigating the risk of critical data becoming compromised.

Compliance.

Remaining compliant to standards such as PCI-DSS or HIPAA is complicated enough on-premises. Things become even more complex when data security and data sovereignty in the cloud is thrown into the mix. CASBs help enterprises achieve and maintain compliance with cloud services.

Visibility.

Running workloads across multiple cloud platforms can limit network visibility as each vendor may have different processes for logging, auditing, and monitoring. This is particularly true when users access cloud services that aren’t explicitly authorized by IT. CASBs provide enterprises with a means to document and track activity across multiple disparate cloud platforms.

Where CASB comes up short

With CASB, enterprises can address most cloud security challenges. However, there are still network and security requirements that IT needs to address. Coupling CASB with point solutions that deliver functionality for SD-WAN, ZTNA, and WAN optimization can help meet those requirements, but also drive up costs and complexity. SASE solves this problem by delivering all the functionality of CASB along with those other network and security services in a single holistic network fabric.

How SASE delivers CASB and more

SASE achieves this by way of a cloud native architecture that abstracts away the complexities of multiple point solutions.

With all the major network and security services converging into a single cloud native multi-tenant platform, enterprises gain increased security, enhanced performance, less network complexity, and reduced costs.

true SASE is:

Based on identity-driven security

Cloud native

Able to support all network edges

Capable of providing performant network connectivity at a global scale

https://www.catonetworks.com/sase/how-sase-offers-casb-and-so-much-more/

- Secure Access Service Edge (SASE) is an emerging framework for the convergence of networking and network security services within a global cloud-based platform

Conventional security measures presumed that applications and users would be inside the network perimeter, which is no longer true. Corporate data is moving to the cloud, employees are increasingly working remote, and digital transformation initiatives require IT organizations to be nimble to capitalize on new business opportunities.

As a result, the traditional network perimeter is dissolving, and new models for access controls, data protection and threat protection are necessary.

In light of these changes, organizations are finding that their existing collection of standalone point products such as firewalls, secure web gateway, dlp and casb, are no longer applicable in a cloud-first world.

What does a SASE architecture look like?

Secure Access Service Edge, or SASE, unifies networking and security services in a cloud-delivered architecture to protect users, applications and data everywhere.

Given that users and applications are no longer on a corporate network, security measures can’t depend on conventional hardware appliances at the network edge.

Instead, SASE promises to deliver the necessary networking and security as cloud-delivered services.

a SASE model eliminates perimeter-based appliances and legacy solutions.

Instead of delivering the traffic to an appliance for security, users connect to the SASE cloud service to safely use applications and data with the consistent enforcement of security policy.

SASE includes the following technologies

Cloud-native microservices in a single platform architecture

Ability to inspect SSL/TLS encrypted traffic at cloud scale

Inline proxy capable of decoding cloud and web traffic (NG SWG)

Firewall and intrusion protection for all ports and protocols (FWaaS)

Managed cloud service API integration for data-at-rest (CASB)

Public cloud IaaS continuous security assessment (CSPM)

Advanced data protection for data-in-motion and at-rest (DLP)

Advanced threat protection, including AI/ML, UEBA, sandboxing, etc. (ATP)

Threat intelligence sharing and integration with EPP/EDR, SIEM, and SOAR

Software defined perimeter with zero trust network access, replacing legacy VPNs (SDP, ZTNA)

Protection for the branch, including support for branch networking initiatives such as SD-WAN

Carrier-grade, hyper scale network infrastructure with a global POP footprint

https://www.netskope.com/what-is-sase

- Is CASB Alone Enough? Long Live SASE

SaaS Adoption Introduces Security Risks

Pros

Quick deployment - As a software solution, the installation and configuration of SaaS apps are quick and painless. By utilizing the cloud, the apps are easily accessible to all users.

Simple maintenance - Instead of having your IT department manually upgrade the app, that responsibility falls to the SaaS vendors, saving you IT resources

Scalable - Since SaaS apps live in the cloud, they are scalable, no matter how small or large your organization is. Remote users are able to access the apps no matter their location.

Cons

Anyone with a credit card can start using almost any cloud service. They are typically set up without IT and security oversight. Users are able to access the application from every coffee shop and any device.

Maintenance isn’t always for increasing uptime. SaaS vendors do an amazing job releasing new features and functionality, but this frequent pace of change also makes it difficult for IT and security teams to keep tabs on configurations and risk.

Most tier-1 SaaS apps are designed to be infinitely scalable in theory. The downside is that unsanctioned apps will grow virally in your organization and the SaaS provider will gladly pass along the bill.

So Is CASB Enough?

The legacy CASB-centric way to secure SaaS applications uses a standalone proxy designed to perform a limited amount of inline inspection capabilities. There are different deployment modes by which a CASB can deliver its functions, including network inline, SAML proxy, agent and API (introspection). And while CASB can also be used for API-based controls, it’s often with a limited set of ties to contextual policies on which specific users or devices have access to particular data. Despite multiple options for deployment, there are shortcomings with traditional implementation methods and many enterprise CASB projects have struggled to get off the ground because of it.

Secure SaaS Requires SASE

SASE simplifies both networking and security, replacing conventional point products. Firewalls, proxies, secure web gateways, remote access VPNs, CASBs, DNS security and so on are unified into one cloud-based infrastructure.

https://www.paloaltonetworks.com/blog/2019/11/cloud-casb-sase/

CASB Vs

IDaaS in Terms of Cloud Security

The True Unified Identity Platform for CASB Solutions

Cloud Access Security Brokers - CASB

Cloud Access Security Broker (CASB) - The purpose of a forward proxy

The Critical Role of Cloud Access Security Brokers (CASBs)

– a Mini-Series [Part 2]

Cloud Access Security Broker (CASB) Model: A Simple Explanation for My

5 Year Old Niece

SafeConnect Software Defined Perimeter (SDP)

Software-Defined Perimeter Architecture Guide Preview: Part 2

Zero Trust Security Architectures - Software Defined Perimeter

Zero Trust Network, Software-Defined Perimeter, CARTA and

BeyondCorp

Software-Defined Perimeter (SDP) Market Global Competitive Analysis and Overview 2019 to 2024

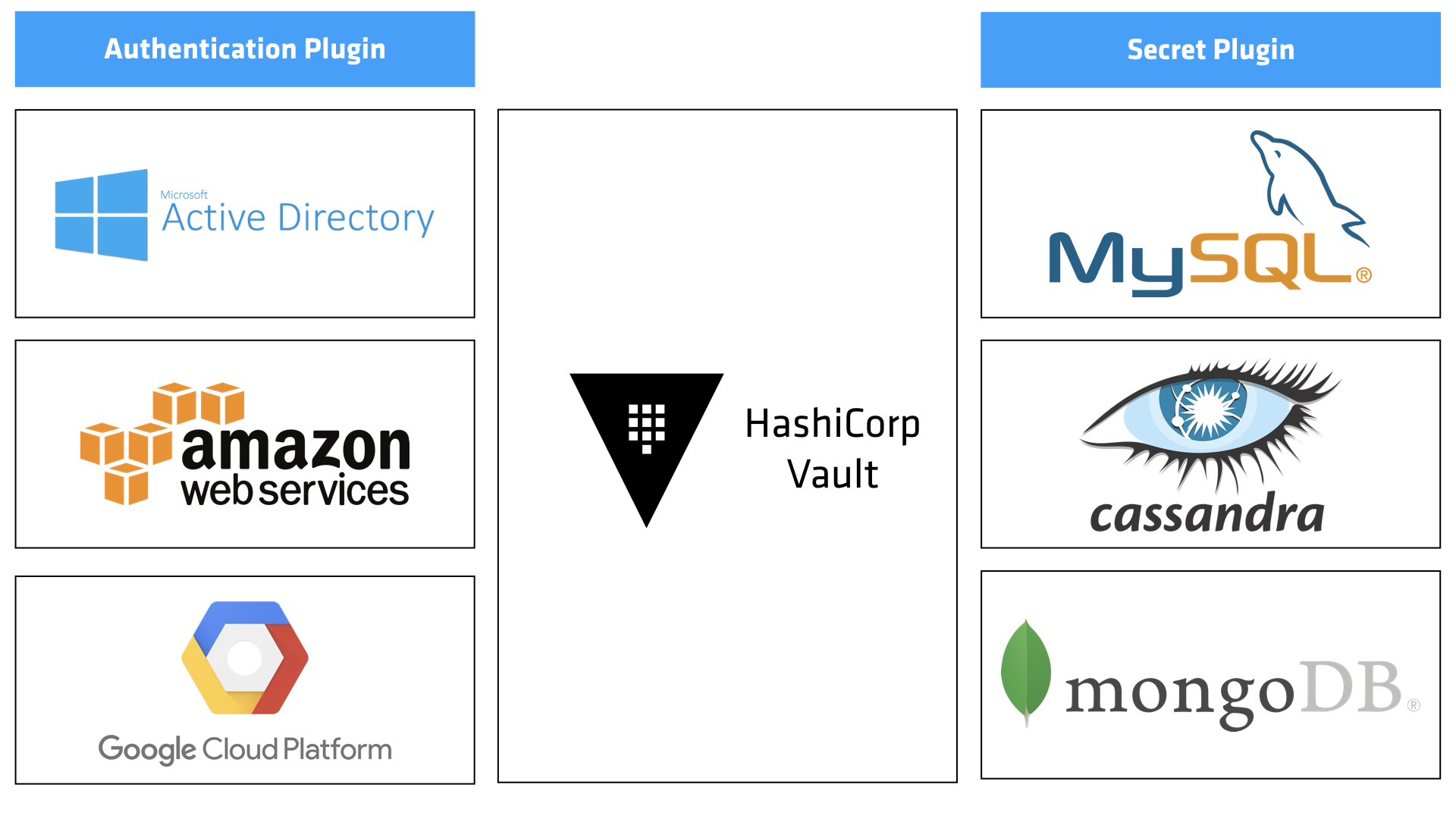

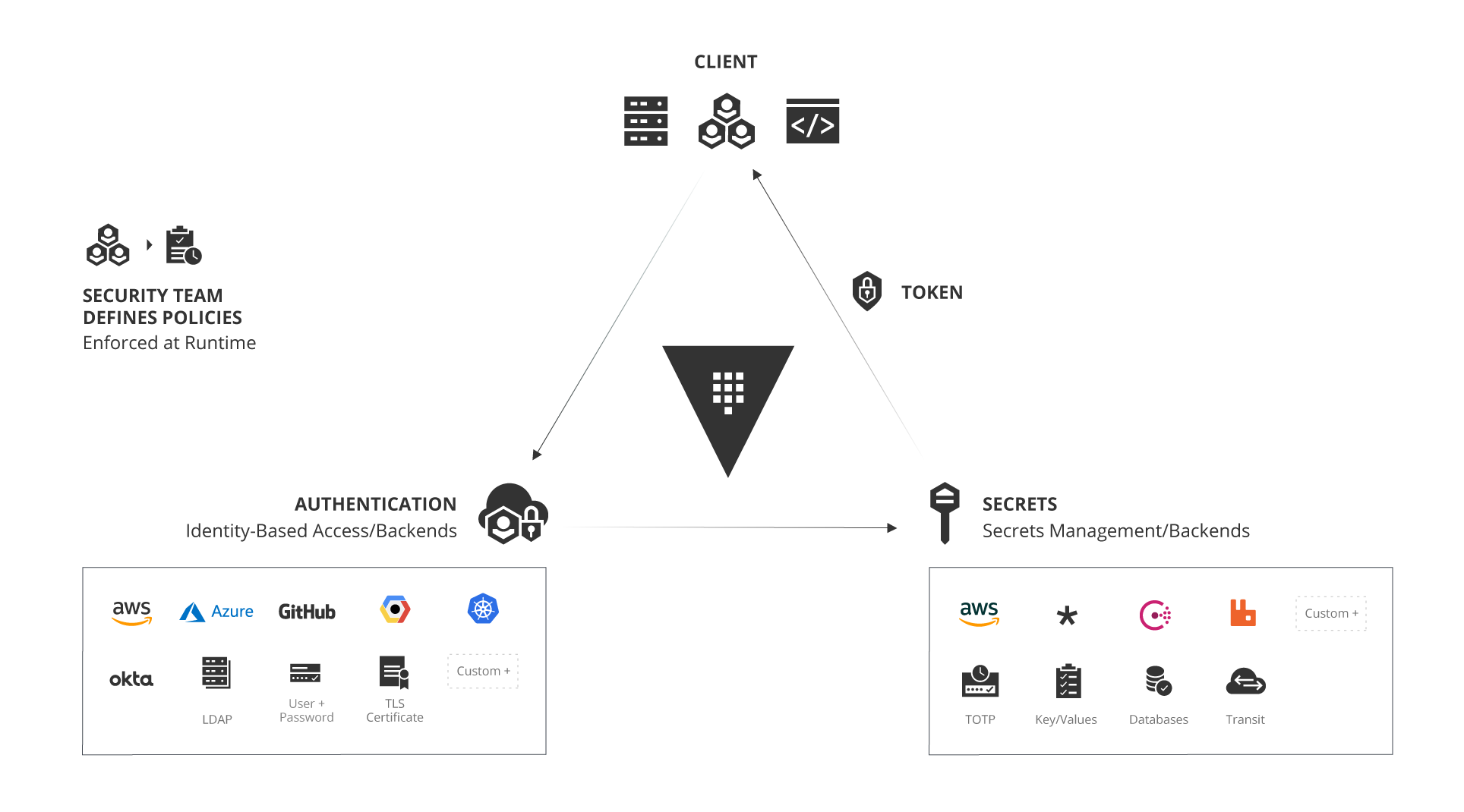

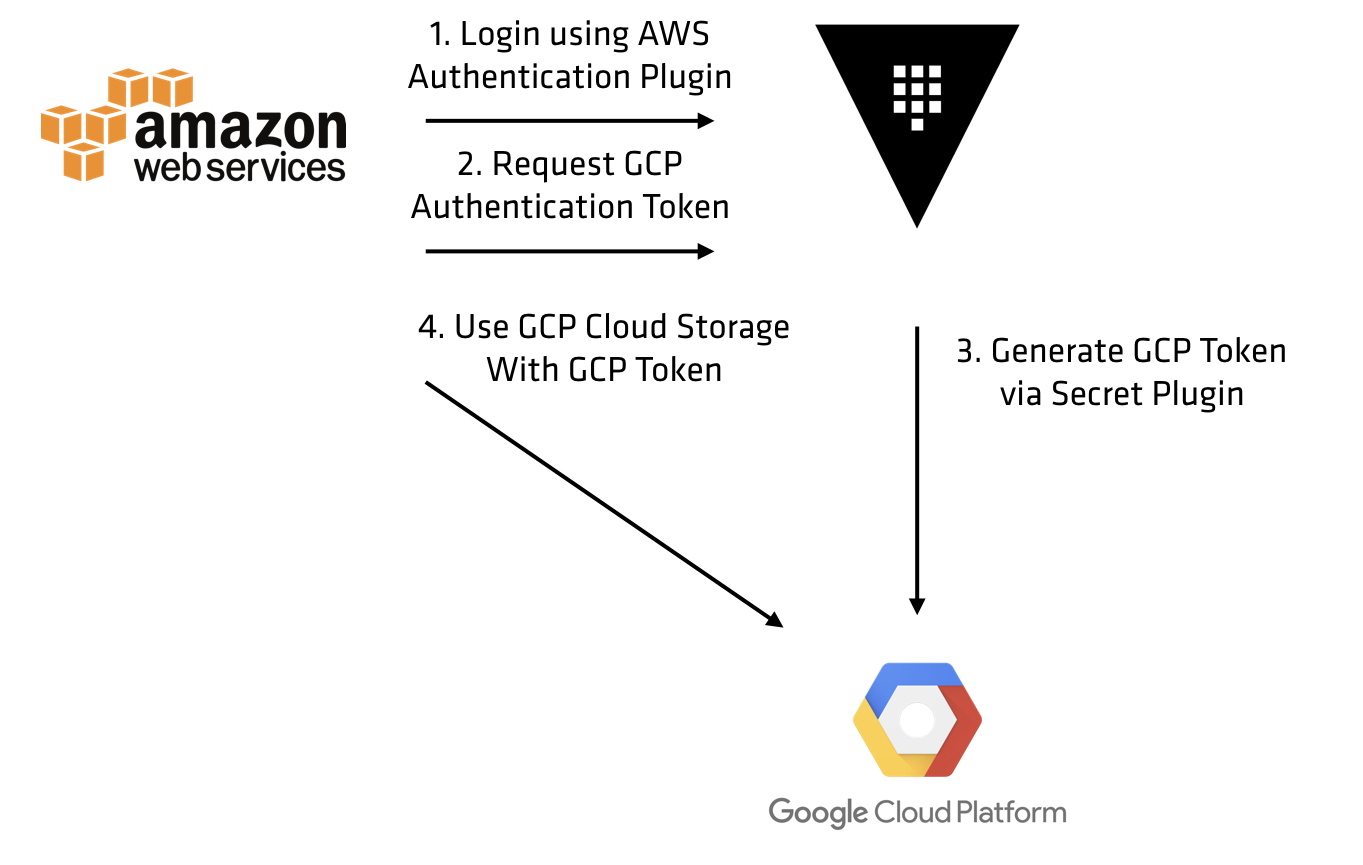

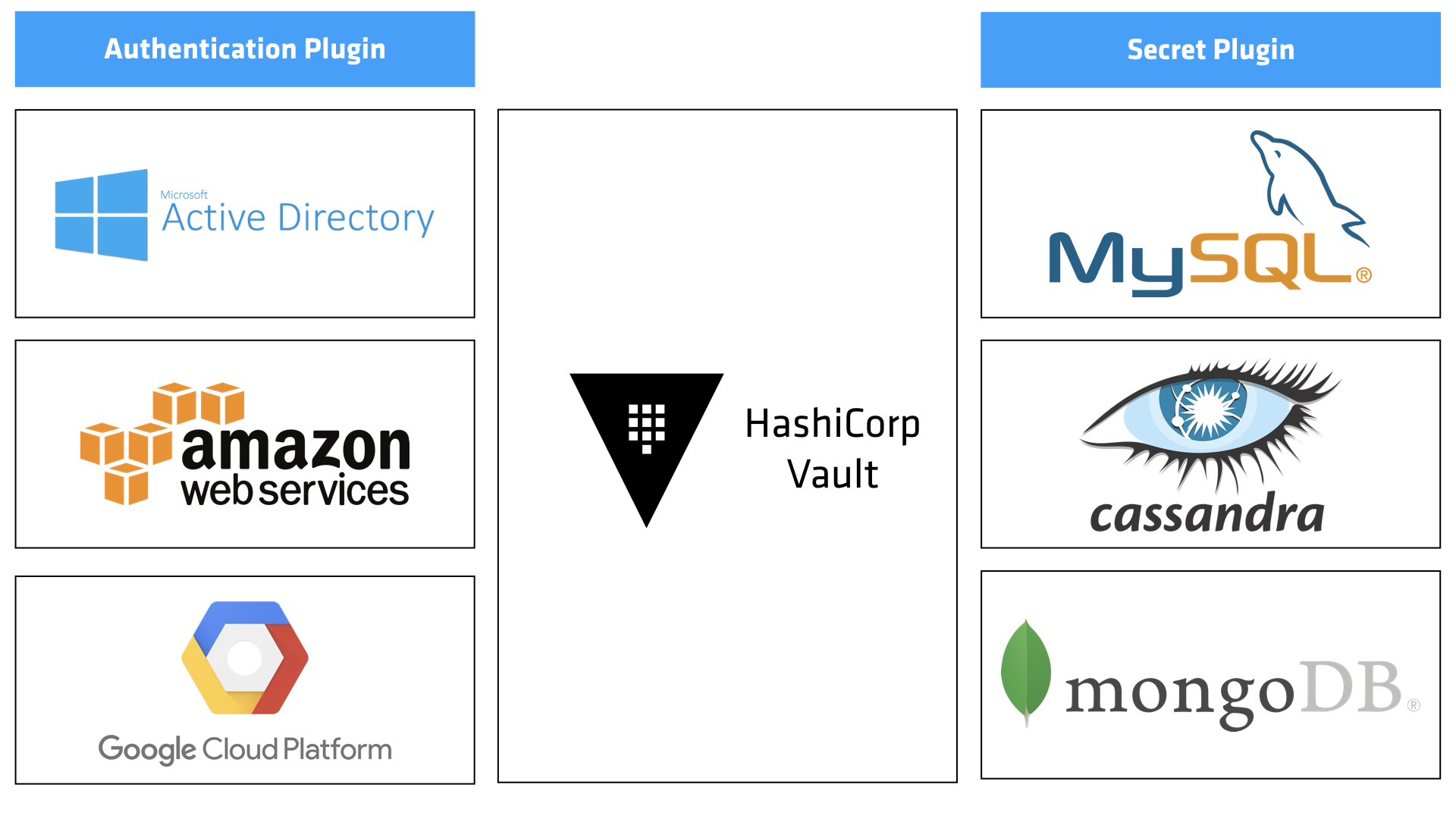

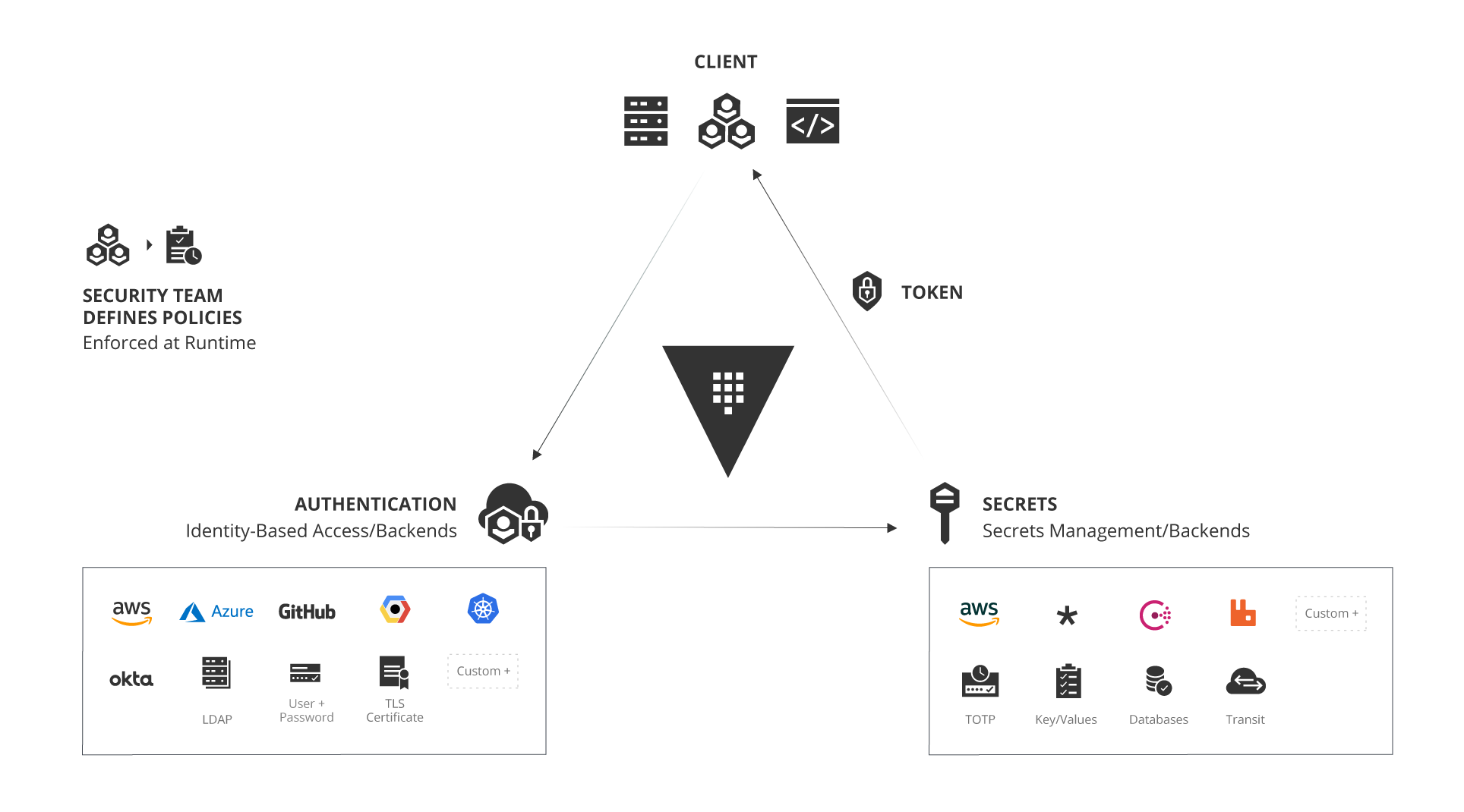

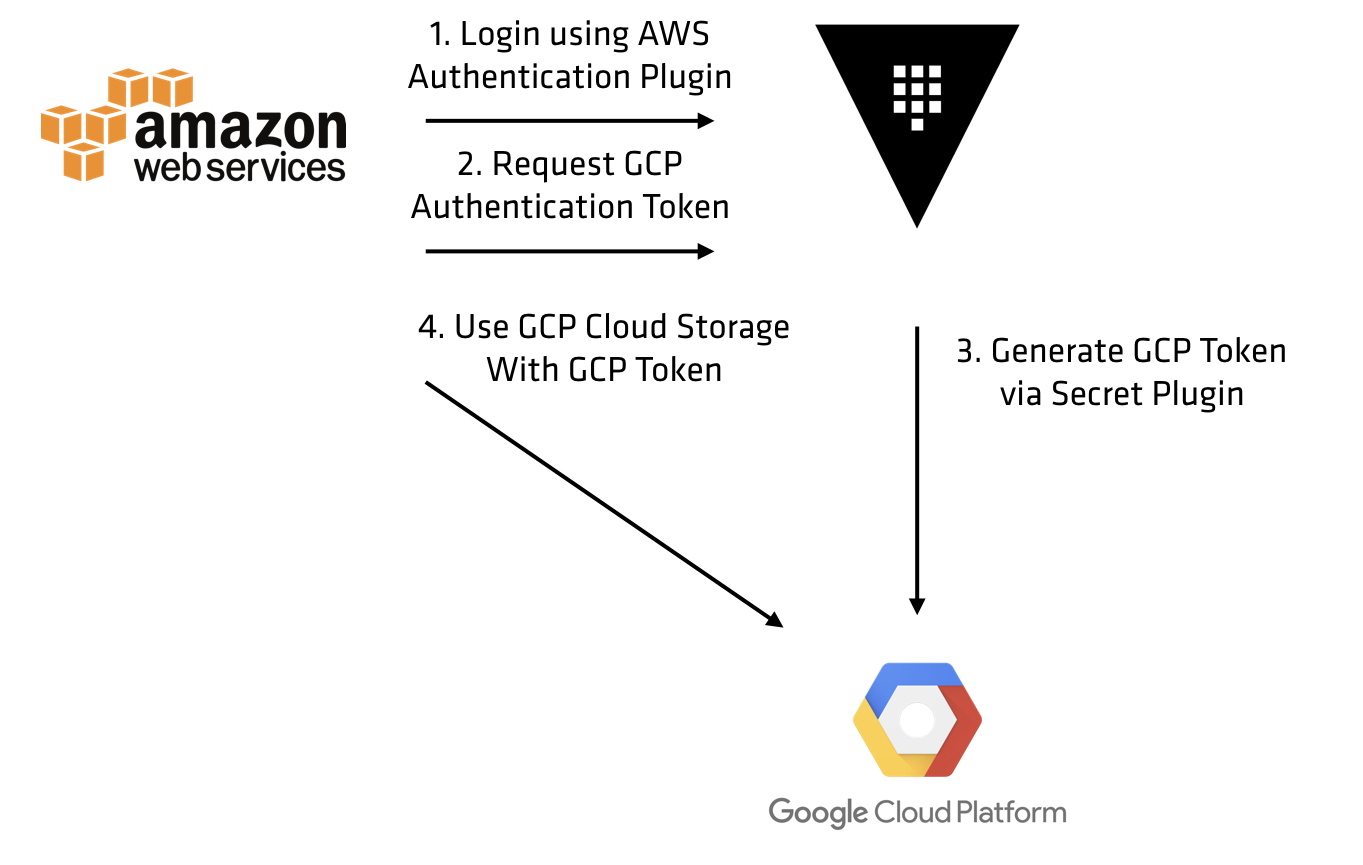

- Cloud architectures and DevOps principles necessitate systems that are lightweight, loosely-coupled and extensible, but security remains siloed, implemented outside the development lifecycle and most often delivered via proprietary, “black-box” products.

Application-centric: The logic went: if I secure the host, then the app is safe. But in a world of distributed apps that

are dynamically scheduled on ephemeral

compute building blocks, you likely don’t even know where your app is running, so how can you secure it?

Security and policy must be baked into the application. It’s no longer sufficient to secure the network and the endpoint, rather it’s the workload

itself that must be secured.

Developer-driven: If security is to be application-centric it must

be 1) integrated into the application

lifecycle and 2) implemented and managed like programmable infrastructure, which implies that

security must scale with your application and your cloud and be open and extensible. Tools like

Hashicorp’s Vault offer a glimpse of the future.

Built inside-out, not outside-in: Building security inside-out

necessitates prioritizing a new operational

toolchain that enables continuous monitoring and testing, policy-driven controls and fine-grained authorization and access management

.Most importantly, it requires a cognitive shift away from prevention and towards control and response.

https://thenewstack.io/the-devopsification-of-security/

- RSA SecurID Access for Cloud Applications

A Single MFA Solution for

ALL Your Cloud Apps

https://www.rsa.com/en-us/products/rsa-securid-suite/rsa-securid-access/mfa-for-critical-systems/securing-cloud-applications-with-mfa

If you are already using a federation server or cloud identity provider (IdP), you can easily integrate with Duo so

that it automatically secures any application that

is connected to it.

Duo’s multi-factor authentication (MFA) solution allows administrators

to easily integrate with federation services like

Active Directory Federation Services (ADFS)

and other cloud

IdPs such as

OneLogin,

Centrify,

Okta,

Ping,

OpenLDAP,

Google OIDC,

Azure OIDC

and SAML

IdPs to protect cloud applications

https://duo.com/product/every-application/supported-applications/cloud-apps

- 10 Steps to Evaluate Cloud Service Providers for FedRAMP Compliance

The Federal Risk and Authorization Management Program (

FedRAMP) is a framework that provides a standardized approach to

authorizing, monitoring and conducting security assessments on cloud services.

Any accredited federal agency,

authorized cloud service provider (CSP) or third-party assessment organization (3PAO) can

be associated with

FedRAMP.

1. Cloud Risk Assessment

Organizations must categorize the data they plan to store and share in the cloud by type and sensitivity.

You may also want to perform a security assessment to determine whether a public, private or hybrid cloud solution carries more or less risk than

simply hosting the data on-premises.

2. Security Policies

The next step is to create a security policy to define the controls and risks associated with the cloud service. This policy should cover which data, services and applications are secure enough to migrate to the cloud

.Work with legal counsel before engaging a CSP to ensure that all internal controls meet the organization’s needs.

3. Encryption

it’s crucial to consider the security of the encryption keys provided by the CSP.

4. Data Backup

A business continuity and disaster recovery plan is even more critical and should

be tested periodically to avoid outages

5. Authentication

Most CSPs require an authentication method that facilitates mutual validation of identities between the organization and provider.

These protocols depend on the secret sharing of information that completes an authentication task, which protects cloud-bound data from man-in-the-middle (

MitM), distributed denial-of-service (

DDoS) and relay attacks.

Other methods, such as smart cards, strong passwords and multifactor authentication, defend data against brute-force attacks.

elliptical curve cryptography and steganography help prevent both internal and external impersonation schemes

6. Determine CSP Capabilities

Cloud providers offer a variety of services, such as software-as-a-service (SaaS), platform-as-a-service (

PaaS) and infrastructure-as-a-service (

IaaS) offerings.

These common cloud services should be evaluated according to the organization’s cloud security policy and risk assessment.

7. CSP Security Policies and Procedures

This involves

obtaining an independent audit report from an accredited assessor.

It is also important to review these procedures to guarantee compliance with other frameworks, such as the International Standards Organization (ISO) 27000 series.

8. Legal Implications

CSPs must adhere to global data security and privacy laws, meaning they must disclose

any and all breaches to the

appropriate government agencies.

9. Data Ownership

Implement continuous local backups to make sure any

cloud outages do not cause permanent data loss. Security leaders should insist that the CSP uses end-to-end encryption on data in motion and at rest. Also remember that different jurisdictions can affect the security of data that

is stored and/or transmitted in a foreign country

10. Data Deletion

You must also consider the difficulty of tracing the deletion of encrypted data. Some cloud providers use

one-time encryption keys that

are subsequently deleted along with the encrypted data, rendering it permanently useless.

https://securityintelligence.com/10-steps-to-

evaluate-cloud-service-providers-for-fedramp-compliance/

- Key Cloud Service Provider (CSP) Documents

Digital Identity Requirements

This document has been developed to provide guidance on Digital Identity requirements

in support of achieving and maintaining a security authorization that meets FedRAMP requirements. FedRAMP is following NIST guidance

and this document describes how FedRAMP intends to implement it.

Penetration Test Guidance

The purpose of this document is to provide guidelines for organizations on planning and conducting Penetration Testing and analyzing and reporting on findings.

https://www.fedramp.gov./documents/

- Cloud Computing Security Requirements Guide (SRG)

The migration of DoD Applications and Services out of DoD owned and operated data centers to commercial cloud services while maintaining the

security of, and the control over, all DoD data IAW DoD policies

https://iase.disa.mil/cloud_security/Documents/u-cloud_computing_srg_v1r1_final.pdf

- The Department of Defense (DoD) Cloud Computing Security Requirements Guide (SRG) provides a standardized assessment and authorization process for cloud service providers (CSPs) to gain a DoD provisional authorization, so that they can serve DoD customers. The AWS provisional authorization from the Defense Information Systems Agency (DISA) provides a reusable certification that attests to AWS compliance with DoD standards, reducing the time necessary for a DoD mission owner to assess and authorize one of their systems for operation in AWS

https://aws.amazon.com/compliance/dod/

- SciPass is an OpenFlow application designed to help network security scale to 100Gbps. In its simplest mode of operation, SciPass turns an OpenFlow switch into an IDS load balancer capable of considering sensor load in its balancing decisions. When operating in Science DMZ mode, SciPass uses Bro to detect "good" data transfers and programs bypass rules to avoid forwarding through institutional firewalls, improving transfer performance and reducing load on IT infrastructure.

SciPass provides the features needed to deploy as a load balancer for an Inline or passive IDS cluster,

in addition to its ability to provide the basis for a Science DMZ. At its heart SciPass is an interactive load balancer, On top of this core function, SciPass provides a set of web services that are

typcially used by IDS sensors or other system to guide forwarding behavior.

Sensor Load Report API - sensors can report their load which lets

scipass adjust

volume of traffic sent to that sensor

Blacklist API - Used to define traffic which should

be dropped at the switch

FastPath API - used to define the traffic which is good and thus should not traverse the firewall or IDS sensor.

IDS load balancer - Balancing of traffic across sensors based on traffic dynamics and sensor load.

CLI - for administration and troubleshooting

https://docs.globalnoc.iu.edu/sdn/scipass.html

- OpenFlow‐based intrusion detection and prevention systems (IDPS) solution, called FlowIPS, that focuses on the intrusion prevention in the cloud virtual networking environment. The chapter discusses the technical background of the software‐defined networking (SDN) and intrusion detection system.

https://onlinelibrary.wiley.com/doi/abs/10.1002/9781119042655.ch11

OpenID is an open standard and decentralized protocol by the non-profit

OpenID Foundation that allows

users to be authenticated by certain co-operating sites (known as Relying Parties or RP) using a third party service. This eliminates the need for webmasters to provide their own ad hoc systems and allowing users to

consolidate their digital identities.

https://en.wikipedia.org/wiki/OpenID

The OpenID Foundation is a non-profit international standardization organization of individuals and companies committed to enabling, promoting and protecting OpenID technologies.

http://openid.net/

We build digital identity ecosystems to connect these users, devices, and things for premier businesses and governments worldwide.

https://www.forgerock.com

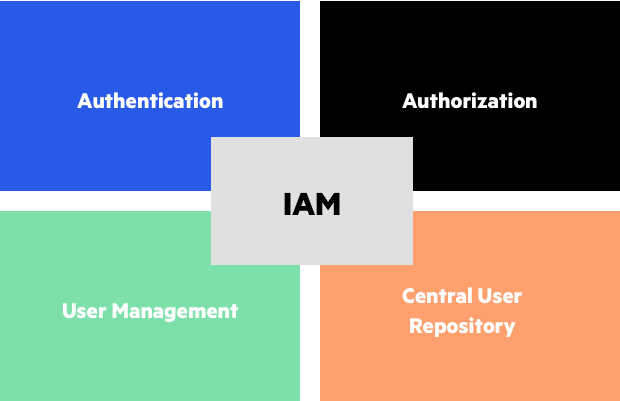

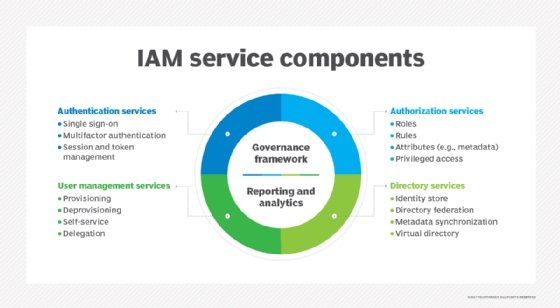

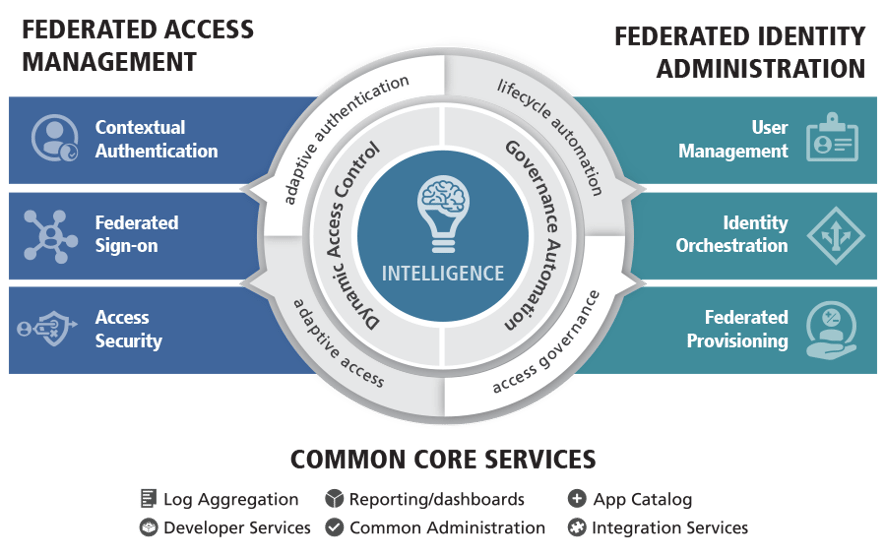

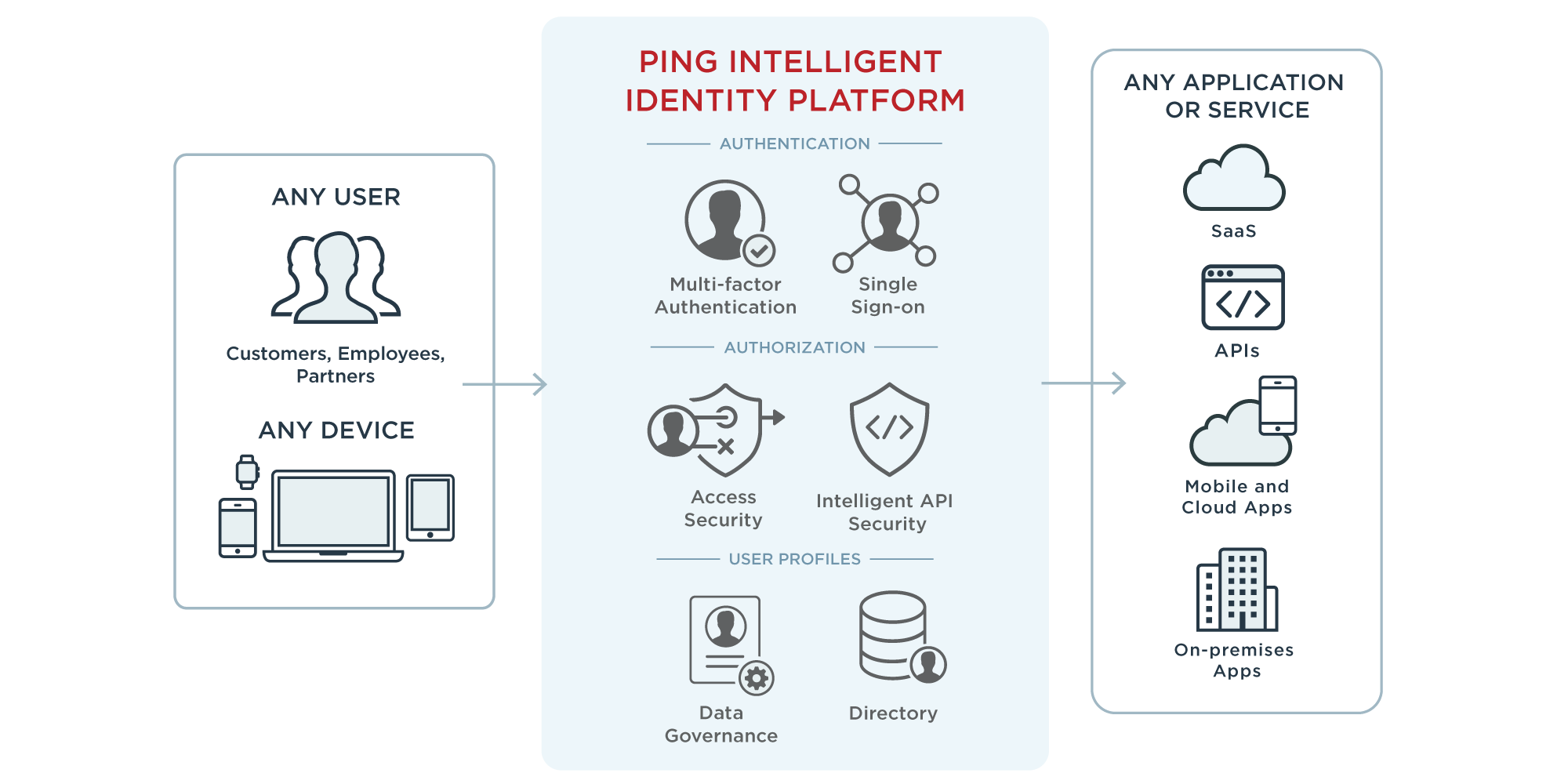

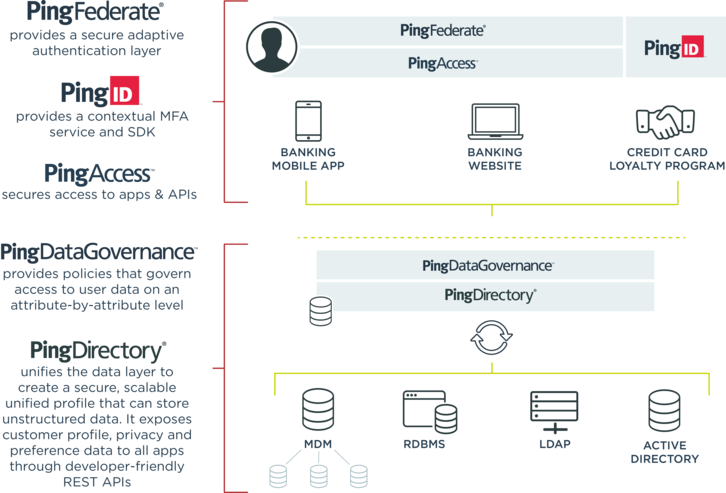

- Identity and access management (IAM) is the security discipline that enables the right individuals to access the right resources at the right times for the right reasons.IAM addresses the mission-critical need to ensure appropriate access to resources across increasingly heterogeneous technology environments, and to meet increasingly rigorous compliance requirements.

https://www.gartner.com/it-glossary/identity-and-access-management-iam

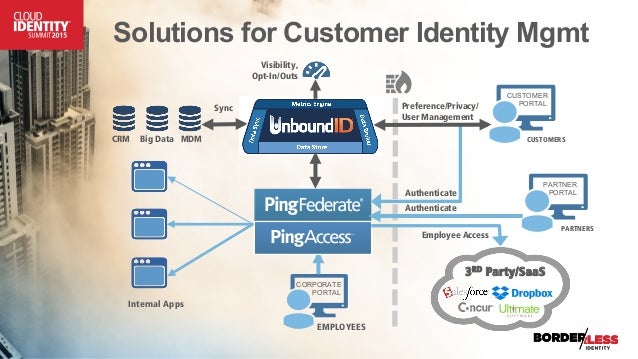

- Today's organizations need to govern and enforce user access across multiple channels, including mobile, social and cloud

IBM® Security identity and access management solutions help strengthen compliance and reduce risk by protecting and monitoring user access in today's multi-perimeter environments.

http://www-03.ibm.com/software/products/en/category/identity-access-management

- Eliminate the complexities and time-consuming processes that are often required to govern identities, manage privileged accounts and control access

http://software.dell.com/solutions/identity-and-access-management/

The inherently casual and decentralised nature of cloud services will increasingly push organisations to reconsider their identity and access management (IAM) infrastructure

- That leaves businesses with no idea what accounts their employees are using on what cloud-based services – and no way to control the business data that might be stored on those services. Although social-media services like Facebook and Twitter have pioneered identity federation by enabling logons to a range of third-party services, integrating those identities with corporate directory services remains a sticking point

https://www.cso.com.au/article/524164/cloud-enabled_shadow_it_driving_imperative_iam_reinvention_ovum/

- Google Cloud Identity & Access Management (IAM) lets administrators authorize who can take action on specific resources, giving you full control and visibility to manage cloud resources centrally. For established enterprises with complex organizational structures, hundreds of workgroups and potentially many more projects, Cloud IAM provides a unified view into security policy across your entire organization, with built-in auditing to ease compliance processes.

https://cloud.google.com/iam/

- Identity and Access Management (IdAM)

The

NCCoE has released the final version of NIST Cybersecurity Practice Guide SP 1800-2, Identity and Access Management (

IdAM).

To help the energy sector address this cybersecurity challenge, security engineers at the National Cybersecurity Center of Excellence (

NCCoE) developed an example solution that utilities can use to more securely and efficiently manage access to the networked devices and facilities upon which power generation, transmission, and distribution depend. The solution

demonstrates a centralized

IdAM platform that can provide a comprehensive view of all users within the enterprise across all silos, and

the access rights users have been granted, using multiple commercially available products.

https://www.nccoe.nist.gov/projects/use-cases/idam

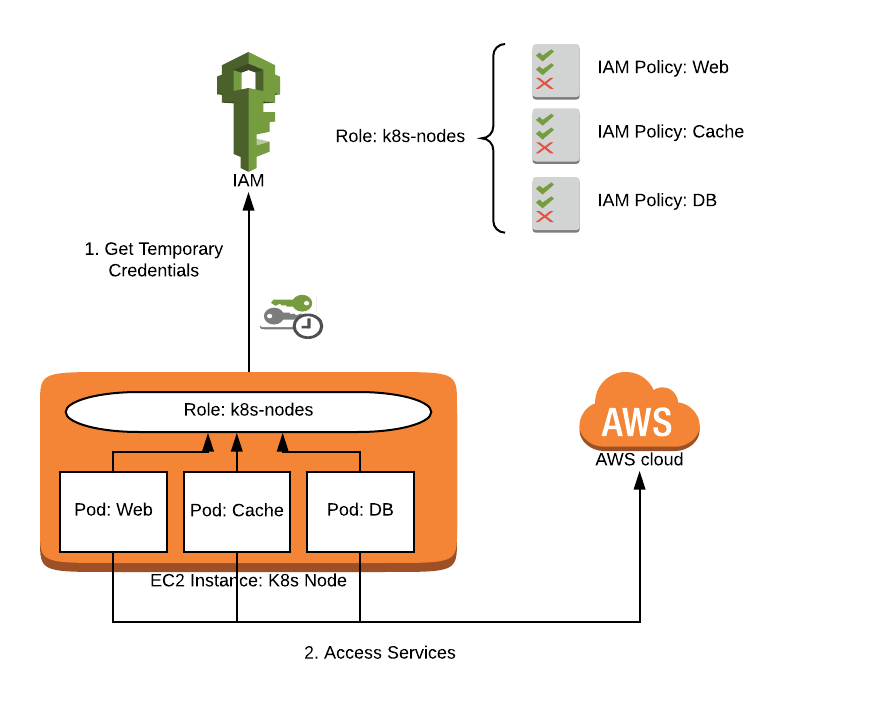

Understanding How IAM Works

How to automate SAML federation to multiple AWS accounts from Microsoft Azure Active Directory

How to Restrict Amazon S3 Bucket Access to a Specific IAM Role

IAM Access in

Kubernetes: The AWS Security Problem

Securing Identity and Access Management Solutions Cyber Ark

6/27 Complete Security for Your AWS Deployment |

Identiverse 2018

Strengthen the Security of Your IAM System with

RadiantOne’s Advanced Security Features

identity enables a

secure,connected and agile enterprise

CIS 2015 Modernize IAM with UnboundID and Ping Identity - Terry Sigle & B. Allyn Fay

IAM/SSO Provider Feature: Ping Identity

When It Comes to Regulatory Compliance, Identity and Access Management Rules

Protecting

PingFederate Users with RSA

SecurID Access

CA Security - Deloitte IAM Summit - Vasu

How to Move Away From CA SiteMinder to Open Source

Authn /

Authz

Integrate CA SiteMinder® with CA Identity Manager

Identity and Access Management Solution Overview

The Attribute Exchange Network (AXN™)

- Microsoft Cloud App Security

Identify and combat cyberthreats across all your cloud services with Microsoft Cloud App Security (MCAS), a cloud access security broker (CASB) that provides multifunction visibility, control over data travel, and sophisticated analytics.

https://www.microsoft.com/en-ww/security/business/cloud-app-security

- Integrate Cloud App Security with Zscaler

If you work with both Cloud App Security and Zscaler, you can integrate the two products to enhance your security Cloud Discovery experience. Zscaler, as a standalone cloud proxy, monitors your organization's traffic enabling you to set policies for blocking transactions. Together, Cloud App Security and Zscaler provide the following capabilities:

Seamless deployment of Cloud Discovery - Use Zscaler to proxy your traffic and send it to Cloud App Security. This eliminates the need for installation of log collectors on your network endpoints to enable Cloud Discovery.

Zscaler's block capabilities are automatically applied on apps you set as unsanctioned in Cloud App Security.

Enhance your Zscaler portal with Cloud App Security's risk assessment for 200 leading cloud apps, which can be viewed directly in the Zscaler portal.

https://docs.microsoft.com/en-us/cloud-app-security/zscaler-integration

- Integrate Cloud App Security with iboss

If you work with both Cloud App Security and iboss, you can integrate the two products to enhance your security Cloud Discovery experience. iboss is a standalone secure cloud gateway that monitors your organization's traffic and enables you to set policies that block transactions. Together, Cloud App Security and iboss provide the following capabilities:

Seamless deployment of Cloud Discovery - Use iboss to proxy your traffic and send it to Cloud App Security. This eliminates the need for installation of log collectors on your network endpoints to enable Cloud Discovery.

iboss's block capabilities are automatically applied on apps you set as unsanctioned in Cloud App Security.

Enhance your iboss admin portal with Cloud App Security's risk assessment of the top 100 cloud apps in your organization, which can be viewed directly in the iboss admin portal.

https://docs.microsoft.com/bs-cyrl-ba/cloud-app-security/iboss-integration

- Integrate Cloud App Security with Corrata

If you work with both Cloud App Security and Corrata, you can integrate the two products to enhance your security Cloud Discovery experience for mobile app use. Corrata, as a local Mobile gateway, monitors your organization's traffic from mobile devices enabling you to set policies for blocking transactions. Together, Cloud App Security and Corrata provide the following capabilities:

Seamless deployment of Cloud Discovery - Use Corrata to collect your mobile device traffic and send it to Cloud App Security. This eliminates the need for installation of log collectors on your network endpoints to enable Cloud Discovery.

Corrata's block capabilities are automatically applied on apps you set as unsanctioned in Cloud App Security.

Enhance your Corrata portal with Cloud App Security's risk assessment for leading cloud apps, which can be viewed directly in the Corrata portal.

https://docs.microsoft.com/bs-cyrl-ba/cloud-app-security/corrata-integration

- Integrate Cloud App Security with Menlo Security

If you work with both Cloud App Security and Menlo Security, you can integrate the two products to enhance your security Cloud Discovery experience. Menlo Security, as a standalone Secure Web Gateway, monitors your organization's traffic enabling you to set policies for blocking transactions. Together, Cloud App Security and Menlo Security provide the following capabilities:

Seamless deployment of Cloud Discovery - Use Menlo Security to proxy your traffic and send it to Cloud App Security. This eliminates the need for installation of log collectors on your network endpoints to enable Cloud Discovery.

Menlo Security's block capabilities are automatically applied on apps you set as unsanctioned in Cloud App Security.

https://docs.microsoft.com/bs-cyrl-ba/cloud-app-security/menlo-integration

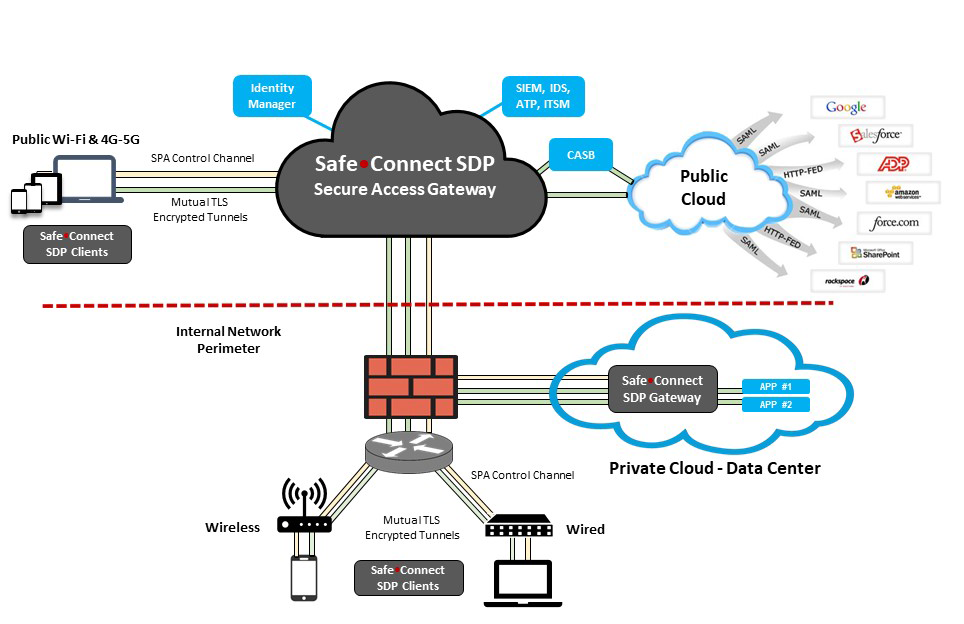

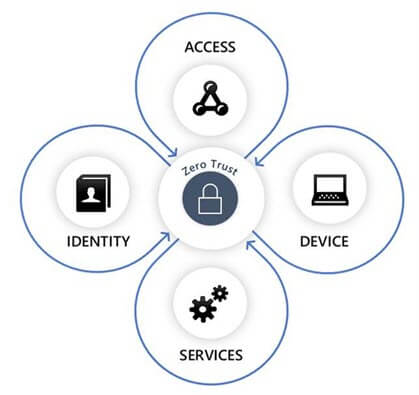

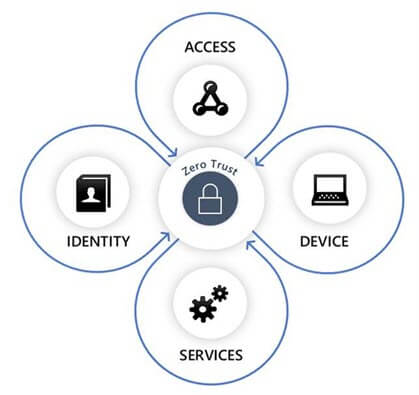

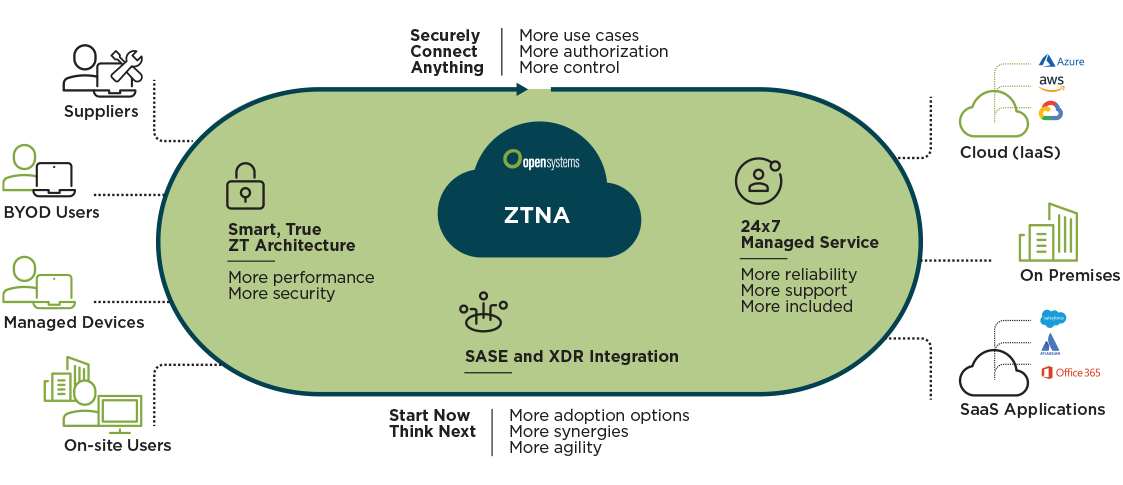

Zero Trust Network Access (ZTNA) is an IT security solution that provides secure remote access to an organization’s applications, data, and services based on clearly defined access control policies

ZTNA differs from virtual private networks (VPNs) in that they grant access only to specific services or applications, where VPNs grant access to an entire network.

How does ZTNA work?

When ZTNA is in use, access to specific applications or resources are granted only after the user has been authenticated to the ZTNA service.Once authenticated, the ZTNA then grants the user access to the specific application using a secure, encrypted tunnel which offers an extra layer of security protection by shielding applications and services from IP addresses that would otherwise be visible.

In this manner, ZTNAs act very much like software defined perimeters (SDPs), relying on the same ‘dark cloud’ idea to prevent users from having visibility into any other applications and services they are not permissioned to access. This also offers protection against lateral attacks, since even if an attacker gained access they would not be able to scan to locate other services.

https://www.vmware.com/topics/glossary/content/zero-trust-network-access-ztna

- The Cloud Security Alliance's (CSA) "Cloud Controls Matrix" can help you define your requirements when developing or refining your enterprise cloud security strategy.

The Cloud Security Alliance Cloud Controls Matrix (CCM) is specifically designed to provide fundamental security principles to guide cloud vendors and to assist prospective cloud customers in assessing the overall security risk of a cloud provider

The CSA CCM provides a controls framework that gives detailed understanding of security concepts and principles that are aligned to the Cloud Security Alliance guidance in 13 domain

The foundations of the Cloud Security Alliance Controls Matrix rest on its customized relationship to other industry-accepted security standards, regulations, and controls frameworks such as the ISO 27001/27002, ISACA COBIT, PCI, NIST, Jericho Forum and NERC CIP

https://cpl.thalesgroup.com/faq/data-security-cloud/what-cloud-controls-matrix