- Docker

a container system making use of LXC containers

Build, Ship, and Run Any App, Anywhere

http://www.docker.com

- Docker is an open-source project that automates the deployment of applications inside software containers, by providing an additional layer of abstraction and automation of operating-system-level virtualization on Linux, Mac OS and Windows.

https://hub.docker.com/_/docker/

- The Registry is a stateless, highly scalable server-side application that stores and lets you distribute Docker images. The Registry is open-source, under the permissive Apache license.

You should use the Registry if you want to:

tightly control where your images are being stored

fully own your images distribution pipeline

integrate image storage and distribution tightly into your in-house development workflow

https://docs.docker.com/registry/

- both ENTRYPOINT and CMD give you a way to identify which executable should be run when a container is started from your image.

In fact, if you want your image to be runnable (without additional docker run command line arguments) you must specify an ENTRYPOINT or CMD.

Combining ENTRYPOINT and CMD allows you to specify the default executable for your image while also providing default arguments to that executable which may be overridden by the user.

https://www.ctl.io/developers/blog/post/dockerfile-entrypoint-vs-cmd/

- An ENTRYPOINT helps you to configure a container that you can run as an executable.

The ENTRYPOINT specifies a command that will always be executed when the container starts.

ENTRYPOINT: command to run when container starts.

ENTRYPOINT command and parameters will not be overwritten from command line. Instead, all command line arguments will be added after ENTRYPOINT parameters.

The main purpose of a CMD is to provide defaults for an executing container.

The CMD specifies arguments that will be fed to the ENTRYPOINT.

CMD will be overridden when running the container with alternative arguments.

CMD should be used as a way of defining default arguments for an ENTRYPOINT command or for executing an ad-hoc command in a container.

CMD: command to run when container starts or arguments to ENTRYPOINT if specified.

CMD sets default command and/or parameters, which can be overwritten from command line when docker container runs.

Both CMD and ENTRYPOINT instructions define what command gets executed when running a container. There are few rules that describe their co-operation.

Dockerfile should specify at least one of CMD or ENTRYPOINT commands

https://stackoverflow.com/questions/21553353/what-is-the-difference-between-cmd-and-entrypoint-in-a-dockerfile

- It is generally recommended that you separate areas of concern by using one service per container. That service may fork into multiple processes (for example, Apache web server starts multiple worker processes).

It’s ok to have multiple processes but to get the most benefit out of Docker, avoid one container being responsible for multiple aspects of your overall application. You can connect multiple containers using user-defined networks and shared volumes.

If you need to run more than one service within a container, you can accomplish this in a few different ways.

Put all of your commands in a wrapper script, complete with testing and debugging information. Run the wrapper script as your CMD.

Use a process manager like supervisord. This is a moderately heavy-weight approach that requires you to package supervisord and its configuration in your image (or base your image on one that includes supervisord), along with the different applications it manages. Then you start supervisord, which manages your processes for you.

https://docs.docker.com/config/containers/multi-service_container/

- By default, Docker containers are “unprivileged” and cannot, for example, run a Docker daemon inside a Docker container. This is because by default a container is not allowed to access any devices, but a “privileged” container is given access to all devices

https://docs.docker.com/engine/reference/run/#runtime-privilege-and-linux-capabilities

- Docker is a platform that sits between apps and infrastructure. By building apps on Docker, developers and IT operations get freedom and flexibility. That’s because Docker runs everywhere that enterprises deploy apps: on-prem (including on IBM mainframes, enterprise Linux and Windows) and in the cloud. Once an application is containerized, it’s easy to re-build, re-deploy and move around, or even run in hybrid setups that straddle on-prem and cloud infrastructure.

https://blog.docker.com/2017/10/kubernetes-docker-platform-and-moby-project/

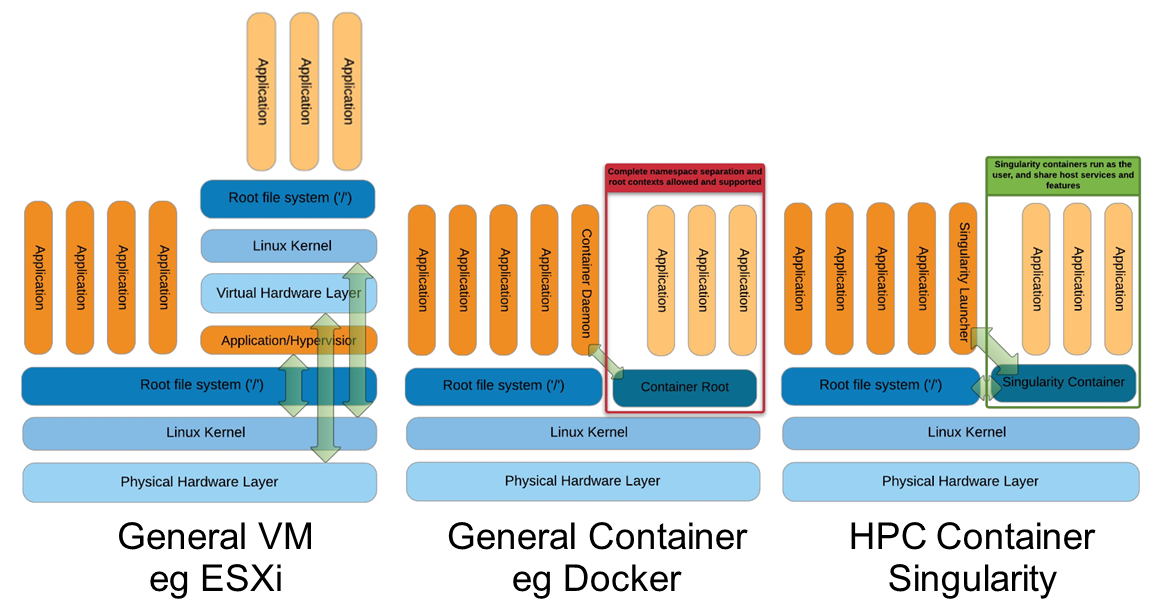

- The architecture of a Docker container includes a physical machine with a host operating system. On top of the host operating system, a Docker engine is deployed, which helps create a virtual container for hosting applications. Docker engines create isolated containers on which applications can be deployed. Unlike a typical hypervisor solution, Docker eliminates the requirement of creating a separate VM for each application, as well as the requirement of a guest OS for each VM.

In hypervisor-based application virtualization, a virtualization platform (for example Hyper-V or VMware) is deployed on a physical server with a host OS. On top of the virtualization platform, virtual machines are created, each of which has an independent guest OS. On top of all these layers, the application is deployed. Hosting so many virtual machines, each having an independent guest OS, makes this architecture much more resource-intensive than Docker containers.

http://www.networkcomputing.com/data-centers/docker-containers-9-fundamental-facts/1537300193

- By default, when you launch a container, you will also use a shell command

sudo docker run –it centos /bin/bash

We used this command to create a new container and then used the Ctrl+P+Q command to exit out of the container. It ensures that the container still exists even after we exit from the container

We can verify that the container still exists with the Docker ps command.

There is an easier way to attach to containers and exit them cleanly without the need of destroying them.

https://www.tutorialspoint.com/docker/docker_containers_and_shells.htm

- Docker Hub is a registry service on the cloud that allows you to download Docker images that are built by other communities. You can also upload your own Docker built images to Docker hub

sudo docker pull jenkins

sudo docker run -p 8080:8080 -p 50000:50000 jenkins

https://www.tutorialspoint.com/docker/docker_hub.htm

- we can use the CentOS image available in Docker Hub to run CentOS on our Ubuntu machine

https://www.tutorialspoint.com/docker/docker_images.htm

- In our example, we are going to use the Apache Web Server on Ubuntu to build our image

https://www.tutorialspoint.com/docker/building_web_server_docker_file.htm

- Docker also gives you the capability to create your own Docker images, and it can be done with the help of Docker Files.

A Docker File is a simple text file with instructions on how to build your images.

Step 1 − Create a file called Docker File and edit it using vim. Please note that the name of the file has to be "Dockerfile" with "D" as capital.

Step 2 − Build your Docker File using the following instructions.

#This is a sample Image

FROM ubuntu

MAINTAINER demousr@gmail.com

RUN apt-get update

RUN apt-get install –y nginx

CMD [“echo”,”Image created”]

https://www.tutorialspoint.com/docker/docker_file.htm

- You might have the need to have your own private repositories.

You may not want to host the repositories on Docker Hub. For this, there is a repository container itself from Docker.

Registry is the container managed by Docker which can be used to host private repositories.

sudo docker run –d –p 5000:5000 –-name registry registry:2

https://www.tutorialspoint.com/docker/docker_private_registries.htm

- In Docker, the containers themselves can have applications running on ports. When you run a container, if you want to access the application in the container via a port number, you need to map the port number of the container to the port number of the Docker host.

https://www.tutorialspoint.com/docker/docker_managing_ports.htm

- sudo docker run -p 8080:8080 -p 50000:50000 jenkins

The left-hand side of the port number mapping is the Docker host port to map to and the right-hand side is the Docker container port number.

The output of the inspect command gives a JSON output. If we observe the output, we can see that there is a section of "ExposedPorts" and see that there are two ports mentioned. One is the data port of 8080 and the other is the control port of 50000.

https://www.tutorialspoint.com/docker/docker_managing_ports.htm

- You might have a Web Server and a Database Server.

When we talk about linking Docker Containers, what we are talking about here is the following:

We can launch one Docker container that will be running the Database Server.

We will launch the second Docker container (Web Server) with a link flag to the container launched in Step 1. This way, it will be able to talk to the Database Server via the link name.

This is a generic and portable way of linking the containers together rather than via the networking port that we saw earlier in the series

Check out Docker Compose, which provides a mechanism to do the linking but by specifying the containers and their links in a single file

https://rominirani.com/docker-tutorial-series-part-8-linking-containers-69a4e5bf50fb

- By linking containers, you provide a secure channel via which Docker containers can communicate with each other.

This is a generic and portable way of linking the containers together rather than via the networking port that we saw earlier in the series

https://rominirani.com/docker-tutorial-series-part-8-linking-containers-69a4e5bf50fb

- One of the many in-built docker's features is networking. Docker networking feature can be accessed by using a --link flag which allows to connect any number of docker containers without the need to expose container's internal ports to the outside world.

https://linuxconfig.org/basic-example-on-how-to-link-docker-containers

- NGINX is a popular lightweight web application that is used for developing server-side applications.

#This is a nginx Image sample

FROM ubuntu

MAINTAINER pumpkin@gmail.com

RUN apt-get update

RUN apt-get install –y nginx

CMD [“echo”,”nginx Image created”]

https://www.tutorialspoint.com/docker/docker_setting_nginx.htm

- In Docker, you have a separate volume that can be shared across containers. These are known as data volumes. Some of the features of the data volume are −

They are initialized when the container is created.

They can be shared and also reused amongst many containers.

Any changes to the volume itself can be made directly.

They exist even after the container is deleted.

Now suppose you wanted to map the volume in the container to a local volume

https://www.tutorialspoint.com/docker/docker_storage.htm

- Docker takes care of the networking aspects so that the containers can communicate with other containers and also with the Docker Host.

If you do an ifconfig on the Docker Host, you will see the Docker Ethernet adapter. This adapter is created when Docker is installed on the Docker Host.

This is a bridge between the Docker Host and the Linux Host

One can create a network in Docker before launching containers

You can now attach the new network when launching the container.

https://www.tutorialspoint.com/docker/docker_networking.htm

- The Docker server creates and configures the host system’s docker0 interface as an Ethernet bridge inside the Linux kernel that could be used by the docker containers to communicate with each other and with the outside world, the default configuration of the docker0 works for most of the scenarios but you could customize the docker0 bridge based on your specific requirements.

The docker0 bridge is virtual interface created by docker, it randomly chooses an address and subnet from the private range defined by RFC 1918 that are not in use on the host machine, and assigns it to docker0. All the docker containers will be connected to the docker0 bridge by default, the docker containers connected to the docker0 bridge could use the iptables NAT rules created by docker to communicate with the outside world.

https://developer.ibm.com/recipes/tutorials/networking-your-docker-containers-using-docker0-bridge/

- the basics of creating your own custom Docker spins.

you can roll your own from your favorite Linux distribution

because running Linux inside a container is very different from running it in the usual way, installed directly on your hardware, or in a virtual machine

simple setup that installs and starts a barebones Apache server into the official Ubuntu image

https://www.linux.com/learn/how-build-your-own-custom-docker-images

- Create a simple parent image using scratch

You can use Docker’s reserved, minimal image, scratch, as a starting point for building containers. Using the scratch “image” signals to the build process that you want the next command in the Dockerfile to be the first filesystem layer in your image.

https://docs.docker.com/develop/develop-images/baseimages/#create-a-full-image-using-tar

- 2. Creating Base Image using Scratch

In the Docker registry, there is a special repository known as Scratch, which was created using an empty tar file:

https://linoxide.com/linux-how-to/2-ways-create-docker-base-image/

- Create a base image

A parent image is an image that your image is based on. It refers to the contents of the FROM directive in the Dockerfile.

A base image either has no FROM line in its Dockerfile or has FROM scratch.

Create a full image using tar

In general, start with a working machine that is running the distribution you’d like to package as a parent image, though that is not required for some tools like Debian’s Debootstrap, which you can also use to build Ubuntu images.

There are more example scripts for creating parent images in the Docker GitHub Repo:

BusyBox

CentOS / Scientific Linux CERN (SLC) on Debian/Ubuntu or on CentOS/RHEL/SLC/etc.

Debian / Ubuntu

Note: Because Docker for Mac and Docker for Windows use a Linux VM, you need a Linux binary, rather than a Mac or Windows binary. You can use a Docker container to build it:

https://docs.docker.com/develop/develop-images/baseimages/

- An image developer can define image defaults related to:

detached or foreground running

container identification

network settings

runtime constraints on CPU and memory

Detached vs foreground

When starting a Docker container, you must first decide if you want to run the container in the background in a “detached” mode or in the default foreground mode:

To start a container in detached mode, you use -d=true or just -d option.

https://docs.docker.com/engine/reference/run/#general-form

- Detached (-d)

To start a container in detached mode, you use -d=true or just -d option. By design, containers started in detached mode exit when the root process used to run the container exits unless you also specify the --rm option. If you use -d with --rm, the container is removed when it exists or when the daemon exits, whichever happens first.

Do not pass a service x start command to a detached container. For example, this command attempts to start the nginx service.

$ docker run -d -p 80:80 my_image service nginx start

This succeeds in starting the nginx service inside the container. However, it fails the detached container paradigm in that, the root process (service nginx start) returns and the detached container stops as designed. As a result, the nginx service is started but could not be used.

To do input/output with a detached container use network connections or shared volumes. These are required because the container is no longer listening to the command line where docker run was run.

To reattach to a detached container, use docker attach command.

https://docs.docker.com/engine/reference/run/#detached--d

- Foreground

In foreground mode (the default when -d is not specified), docker run can start the process in the container and attach the console to the process’s standard input, output, and standard error. It can even pretend to be a TTY (this is what most command line executables expect) and pass along signals. All of that is configurable:

https://docs.docker.com/engine/reference/run/#foreground

- If you would like to keep your container running in detached mode, you need to run something in the foreground.

An easy way to do this is to tail the /dev/null device as the CMD or ENTRYPOINT command of your Docker image.

CMD tail -f /dev/null

Docker containers, when running in detached mode (the most common -d option), are designed to shut down immediately after the initial entry point command (a program that should be run when the container is built from image) is no longer running in the foreground. This can cause problems because often servers or services running in Docker containers are run in the background, causing your container to shut down before you want it to.

http://bigdatums.net/2017/11/07/how-to-keep-docker-containers-running/

- Kitematic is an open source project built to simplify and streamline using Docker on a Mac or Windows PC. Kitematic automates the Docker installation and setup process and provides an intuitive graphical user interface (GUI) for running Docker containers. Kitematic integrates with Docker Machine to provision a VirtualBox VM and installs the Docker Engine locally on your machine.

https://docs.docker.com/kitematic/userguide/#overview

- Docker Flow is a project aimed towards creating an easy to use continuous deployment flow. It depends on Docker Engine, Docker Compose, Consul, and Registrator. Each of those tools is proven to bring value and are recommended for any Docker deployment

https://technologyconversations.com/2016/04/18/docker-flow/

- Portainer is a lightweight management UI which allows you to easily manage your Docker host or Swarm cluster.

https://hub.docker.com/r/portainer/portainer/

- Docker in Docker(Dind)

Docker in Docker(Dind) allows Docker engine to run as a Container inside Docker.

Following are the two primary scenarios where Dind can be needed:

Folks developing and testing Docker need Docker as a Container for faster turnaround time.

Ability to create multiple Docker hosts with less overhead. “Play with Docker” falls in this scenario.

For Continuous integration(CI) use cases, it is needed to build Containers from CI system. In the case of Jenkins, it is needed to build Docker containers from Jenkins master or Jenkins slave. Jenkins master or slave run as Container themselves. For this scenario, it is not needed to have Docker engine running within Jenkins Container. It is needed to have Docker client in Jenkins container and use Docker engine from the host machine. This can be achieved by mounting “/var/run/docker.sock” from the host machine.

The primary purpose of Docker-in-Docker was to help with the development of Docker itself

Simply put, when you start your CI container (Jenkins or other), instead of hacking something together with Docker-in-Docker, start it with:

Now this container will have access to the Docker socket, and therefore will be able to start containers. Except that instead of starting “child” containers, it will start “sibling” containers.

https://jpetazzo.github.io/2015/09/03/do-not-use-docker-in-docker-for-ci/

- “Play with Docker”

The application is hosted in the public cloud and can be accessed as SaaS service using the following link. The application can also be run in the local machine. Following are some capabilities that I have tried:

“Play with Docker” can also be installed in a local machine. The advantage here is that we can tweak the application according to our need. For example, we can install custom Docker version, increase the number of Docker hosts, keep the sessions always up etc.

https://sreeninet.wordpress.com/2016/12/23/docker-in-docker-and-play-with-docker/

- Although running Docker inside Docker (DinD) or Docker outside of Docker (DooD) is generally not recommended, there are some legitimate use cases, such as the development of Docker itself or for local CI testing

http://blog.teracy.com/2017/09/11/how-to-use-docker-in-docker-dind-and-docker-outside-of-docker-dood-for-local-ci-testing/

- Images:

The filesystem and metadata needed to run containers.

They can be thought of as an application packaging format that includes all of the dependencies to run the application, and default settings to execute that application.

The metadata includes defaults for the command to run, environment variables, labels, and health check command.

Containers:

An instance of an isolated application.

A container needs the image to define its initial state and uses the read-only filesystem from the image along with a container specific read-write filesystem.

A running container is a wrapper around a running process, giving that process namespaces for things like the filesystem, network, and PIDs.

References:

To the docker engine, an image is just an image id. This is a unique immutable hash. A change to an image results in creating a new image id. However, you can have one or more references pointing to an image id, not unlike symbolic links

https://stackoverflow.com/questions/21498832/in-docker-whats-the-difference-between-a-container-and-an-image

- The goal is to offer a distro and vendor-neutral environment for the development of Linux container technologies.

Our

main focus is on system containers. That is containers which offer an environment as close to

possible as the one you'd get from a VM but without the overhead that comes with running a separate kernel and simulating all the hardware.

This is achieved through a combination of kernel security features such as

namespaces, mandatory access control, and control groups.

https://linuxcontainers.org/

- Containers do not launch a separate OS for each application, but share the host kernel while maintaining the isolation of resources and processes where required"

- Basically, a container encapsulates applications and defines their interface to the surrounding system, which should make it simpler to drop applications into VMs running Docker in different clouds.

In a virtual machine, you'll find a full operating system install with the associated overhead of virtualized device drivers, memory management, etc., while containers use and share the OS and device drivers of the host. Containers are therefore smaller than VMs, start up much faster, and have better performance, however, this comes at the expense of less isolation and greater compatibility requirements

due to sharing the

host’s kernel.

The right way to think about Docker is thus to view each container as an encapsulation of one program with all its dependencies. The container can

be dropped into (almost) any host and it has everything it needs to operate.

- Simply put, containers provide OS-level process isolation whereas virtual machines offer isolation at the hardware abstraction layer (i.e., hardware virtualization). So in IaaS use cases machine virtualization is an ideal fit, while containers are best suited for packaging/shipping portable and modular software. Again, the two technologies can be used in conjunction with each other for added benefits—for example, Docker containers can be created inside VMs to make a solution ultra-portable.

Docker containers are generally faster and less resource-intensive than virtual machines, but full VMware virtualization still has its unique core benefits—namely, security and isolation. Since virtual machines enable true hardware-level isolation, the chance for interference and/or exploitation less likely than with Docker containers. So for application/software portability, Docker is your safest bet. For machine portability and greater isolation, go with VMware

Though both VMware and Docker can be categorized as virtualization technologies, optimal use cases for each can be quite different. For example, VMware emulates virtual hardware and must account for all the underlying system requirements— subsequently, virtual machine images are significantly larger than containers. That said, it’s also possible to run many discreet OS instances in parallel on a single host with VMware—allowing organizations to build true IaaS solutions in-house.

Docker replaced LXC with its own libcontainer library written in Go, allowing for broader native support for different vendors. Additionally, Docker now offers native support for Window, streamlining the management of Docker hosts and containers on Windows development machines.

https://www.upguard.com/articles/docker-vs.-vmware-how-do-they-stack-up

- Docker containers wrap a piece of software in a complete filesystem that contains everything needed to run: code, runtime, system tools, system libraries – anything that can be installed on a server. This guarantees that the software will always run the same, regardless of its environment.

COMPARING CONTAINERS AND VIRTUAL MACHINES

VIRTUAL MACHINES

Virtual machines include the application, the

necessary binaries and libraries, and an entire guest operating system

-- all of which can amount to

tens of GBs.

CONTAINERS

Containers include the application and all of its dependencies

--but share the kernel with other containers, running as isolated processes in user space on the host operating system.

Docker containers are not tied to any specific infrastructure: they run on any computer, on any infrastructure, and in any cloud

https://www.docker.com/what-docker

- Docker vs. VMs? Combining Both for Cloud Portability Nirvana

Docker and container technology in general is very interesting to us because it promises to help simplify cloud portability — that is, how to run the same application in different clouds — as well as some aspects of configuration management. Today the RightScale multi-cloud management platform provides a portability framework that combines multi-cloud base images with configuration management tools such as Chef and Puppet to allow users to deploy workloads across clouds and hypervisors

http://www.rightscale.com/blog/cloud-management-best-practices/docker-vs-vms-combining-both-cloud-portability-nirvana

- Docker is an open source application deployment container that evolved from the LinuX Containers (LXCs) used for the past decade. LXCs allow different applications to share operating system (OS) kernel, CPU, and RAM.The VM model blends an application, a full guest OS, and disk emulation. In contrast, the container model uses just the application's dependencies and runs them directly on a host OS.

http://www.bogotobogo.com/DevOps/Docker/Docker_Container_vs_Virtual_Machine.php

- Linux Containers:

System-wide changes are visible in each container. For example, if you upgrade an application on the host machine, this change will apply to all sandboxes that run instances of this application.

https://stackoverflow.com/questions/20578039/difference-between-kvm-and-lxc

- KVM virtualization

KVM virtualization lets you boot full operating systems of different kinds, even non-Linux systems.

LXC - Linux Containers

Containers are a lightweight alternative to full machine virtualization offering lower overhead

LXC is an operating-system-level virtualization environment for running multiple isolated Linux systems on a single Linux control host.

LXC works as userspace interface for the Linux kernel containment features.

It share the kernel hypervisors and you can't customize it or load new modules.

Areas of application for the different technologies

KVM

Rendering, VPN, Routing, Gaming

Systems that require an own running kernel

Windows or BSD O.S.

LXC

Websites, Web Hosting, DB Intensive or Gameservers

local Application Development

Microservice architecture

http://www.seflow.net/2/index.php/it/blog/mycore-difference-between-kvm-and-lxc-virtualization?d=1

XEN allows several guest operating systems to execute on the same computer hardware and it is also included with RHEL 5.5. But, why use KVM over XEN? KVM is part of the official Linux kernel and fully supported by both Novell and Redhat. Xen boots from GRUB and loads a modified host operating system such as RHEL into the dom0 (host domain). KVM do not have concept of dom0 and domU. It uses /dev/kvm interface to setup the guest operating systems and provides required drivers. See the official wiki for more information.

A Note About libvirt

libvirt is an open source API and management tool for managing platform virtualization. It is used to manage Linux KVM and Xen virtual machines through graphical interfaces such as Virtual Machine Manager and higher level tools such as oVirt. See the official website for more information.

A Note About QEMU

QEMU is a processor emulator that relies on dynamic binary translation to achieve a reasonable speed while being easy to port on new host CPU architectures. When used as a virtualizer, QEMU achieves near native performances by executing the guest code directly on the host CPU. QEMU supports virtualization when executing under the Xen hypervisor or using the KVM kernel module in Linux. When using KVM, QEMU can virtualize x86, server and embedded PowerPC, and S390 guests. See the official website for more information.

A Note About Virtio Drivers

Virtio is paravirtualized drivers for kvm/Linux. With this you can can run multiple virtual machines running unmodified Linux or Windows VMs. Each virtual machine has private virtualized hardware a network card, disk, graphics adapter, etc. According to Redhat:

Para-virtualized drivers enhance the performance of fully virtualized guests. With the para-virtualized drivers guest I/O latency decreases and throughput increases to near bare-metal levels. It is recommended to use the para-virtualized drivers for fully virtualized guests running I/O heavy tasks and applications.

https://www.cyberciti.biz/faq/centos-rhel-linux-kvm-virtulization-tutorial

- QEMU

What is QEMU? QEMU is a generic and open source machine emulator and virtualizer.

https://www.qemu.org/

- QEMU - Wikipedia

QEMU is a free and open-source hosted hypervisor that performs hardware virtualization QEMU is a hosted virtual machine monitor: it emulates CPUs through dynamic binary translation and provides a set of device models, enabling it to run a variety of unmodified guest operating systems.

https://en.wikipedia.org/wiki/QEMU

- Linux Containers Compared to KVM Virtualization

The main difference between the KVM virtualization and Linux Containers is that virtual machines require a separate kernel instance to run on, while containers can be deployed from the host operating system. This significantly reduces the complexity of container creation and maintenance. Also, the reduced overhead lets you create a large number of containers with faster startup and shutdown speeds. Both Linux Containers and KVM virtualization have certain advantages and drawbacks that influence the use cases in which these technologies are typically applied

KVM virtualization

Virtual machines are resource-intensive so you can run only a limited number of them on your host machine.Running separate kernel instances generally means better separation and security. If one of the kernels terminates unexpectedly, it does not disable the whole system. On the other hand, this isolation makes it harder for virtual machines to communicate with the rest of the system, and therefore several interpretation mechanisms must be used.

Guest virtual machine is isolated from host changes, which lets you run different versions of the same application on the host and virtual machine

- LinuX Containers (LXC) is an operating system-level virtualization method for running multiple isolated Linux systems (containers) on a single control host (LXC host)

https://wiki.archlinux.org/index.php/Linux_Containers

- Linux containers (LXC), is an open source, lightweight operating system-level virtualization software that helps us to run a multiple isolated Linux systems (containers) on a single Linux host. LXC provides a Linux environment as close as to a standard Linux installation but without the need for the separate kernel. LXC is not a replacement of standard virtualization software’s such as VMware, VirtualBox, and KVM, but it is good enough to provide an isolated environment that has its own CPU, memory, block I/O, network.

https://www.itzgeek.com/how-tos/linux/ubuntu-how-tos/setup-linux-container-with-lxc-on-ubuntu-16-04-14-04.html

- LXC

To understand LXD you first have to understand LXC

LXC—short for “Linux containers”, is a solution for virtualizing software at the operating system level within the Linux kernel

LXC lets you run single applications in virtual environments, although you can also virtualize an entire operating system inside an LXC container if you’d like.

LXC’s main advantages include making it easy to control a virtual environment using userspace tools from the host OS, requiring less overhead than a traditional hypervisor and increasing the portability of individual apps by making it possible to distribute them inside containers.

If you’re thinking that LXC sounds a lot like Docker or CoreOS containers, it’s because LXC used to be the underlying technology that made Docker and CoreOS tick

LXD

It’s an extension of LXC.

The more technical way to define LXD is to describe it as a REST API that connects to libxlc, the LXC software library.

LXD, which is written in Go, creates a system daemon that apps can access locally using a Unix socket, or over the network via HTTPS.

LXD offers advanced features not available from LXC, including live container migration and the ability to snapshot a running container.

LXC+LXD vs. Docker/CoreOS

LXD is designed for hosting virtual environments that “will typically be long-running and based on a clean distribution image,” whereas “Docker focuses on ephemeral, stateless, minimal containers that won’t typically get upgraded or re-configured but instead just be replaced entirely.

you should consider the type of deployment you will have to manage before making a choice regarding LXD or Docker (or CoreOS, which is similar to Docker in this regard). Are you going to be spinning up large numbers of containers quickly based on generic app images? If so, go with Docker or CoreOS. Alternatively, if you intend to virtualize an entire OS, or to run a persistent virtual app for a long period, LXD will likely prove a better solution

https://www.sumologic.com/blog/code/lxc-lxd-explaining-linux-containers/

- What is LXC?

LXC is a userspace interface for the Linux kernel containment features. Through a powerful API and simple tools, it lets Linux users easily create and manage system or application containers

What is LXD?

LXD isn't a rewrite of LXC, in fact it's building on top of LXC to provide a new, better user experience. Under the hood, LXD uses LXC through liblxc and its Go binding to create and manage the containers. It's basically an alternative to LXC's tools and distribution template system with the added features that come from being controllable over the network

https://stackshare.io/stackups/lxc-vs-lxd

- LXD is a container hypervisor providing a ReST API to manage LXC containers.

https://tutorials.ubuntu.com/tutorial/tutorial-setting-up-lxd-1604#0

- LXD, pronounced Lex-Dee, is an expansion of LXC, the Linux container tecnology behind Docker. Specifically, according to Stéphane Graber, an Ubuntu project engineer, LXD is a "daemon exporting an authenticated representational state transfer application programming interface (REST API) both locally over a unix socket and over the network using https. There are then two clients for this daemon, one is an OpenStack plugin, the other a standalone command line tool.main features are to include:

Secure by default (unprivileged containers, apparmor, seccomp, etc.)

Image based workflow (no more locally built rootfs)

Support for online snapshotting, including running state with Checkpoint/Restore in User (CRIU)

Live migration support

Shell command control

You can run Docker inside LXD technology.

We see LXD as being complementary with Docker, not a replacement.

"With Xen or KVM you create a space in memory where you emulate a PC and then you install the kernel and the operating system.

There's only one kernel, the host kernel. instead of running another kernel in memory space, you just run say a CentOS file system.

So while LXD will take up more resources than a pure container, it won't take up as much memory room as a VM approach.

In addition, an LXD container will have access to the resources and speed of the hardware without a VM's need to emulate hardware.

http://www.zdnet.com/article/ubuntu-lxd-not-a-docker-replacement-a-docker-enhancement/

- linuxcontainers.org is the umbrella project behind LXC, LXD and LXCFS.

The goal is to offer a distro and vendor neutral environment for the development of Linux container technologies.

Our main focus is system containers. That is, containers which offer an environment as close as possible as the one you'd get from a VM but without the overhead that comes with running a separate kernel and simulating all the hardware.

LXC is the well known set of tools, templates, library and language bindings. It's pretty low level, very flexible and covers just about every containment feature supported by the upstream kernel.

LXD is the new LXC experience. It offers a completely fresh and intuitive user experience with a single command line tool to manage your containers. Containers can be managed over the network in a transparent way through a REST API. It also works with large-scale deployments by integrating with OpenStack.

LXCFS

Userspace (FUSE) filesystem offering two main things:

https://linuxcontainers.org/

- The relationship between LXD and LXC

LXD works in conjunction with LXC and is not designed to replace or supplant LXC. Instead, it’s intended to make LXC-based containers easier to use through the addition of a back-end daemon supporting a REST API and a straightforward CLI client that works with both the local daemon and remote daemons via the REST API.

https://blog.scottlowe.org/2015/05/06/quick-intro-lxd/

- With the Docker vs LXC discussion, we have to take into account IT operations including dev and test environments. While BSD jails have focused on IT Operations, Docker has focused on the development and test organizations.

A simple way to package and deliver applications and all their dependencies, one that enables seamless application portability and mobility.

Docker benefits:

Reduces a container to a single process which is then easily managed with Docker tools.

Encapsulates application configuration and delivery complexity to dramatically simplify and eliminate the need to repeat these activities manually.

Provides a highly efficient compute environment for applications that are stateless and micro-services based, as well as many stateful applications like databases, message bus

Is used very successfully by many groups, particularly Dev and Test, as well as microservices-based production environments.

Docker limitations:

-Treats containers differently from a standard host, such as sharing the host’s IP address and providing access to the container via a selectable port. This approach can cause management issues when using traditional applications and management tools that require access to Linux utilities such as cron, ssh, daemons, and logging.

-Uses layers and disables storage persistence, which results in reduced disk subsystem performance

-Is not ideal for stateful applications due to limited volume management in case of container failover.

initiating the LXC vs docker discussion, because of it:

-Is essentially a lightweight VM with its own hostname, IP address, file systems, and full OS init.d, and it provides direct SSH access.

-Performs nearly as well as bare metal, and better than traditional VMs in almost all use cases, and particularly when the application can take advantage of parallelism.

-Can efficiently run one or more multi-process applications.

-An LXC-based container can run almost any Linux-based application without sacrificing performance or operational ease of use. This makes LXC an ideal platform for containerizing performance-sensitive, data-intensive enterprise applications.

LXC Benefits:

Provides a “normal” OS environment that supports all the features and capabilities that are available in the Linux environment.

Supports layers and enables Copy-On-Write cloning and snapshots, and is also file-system neutral.

Uses simple, intuitive, and standard IP addresses to access the containers and allows full access to the host file.

Supports static IP addressing, routable IPs, multiple network devices.

Provides full root access.

Allows you to create your own network interfaces.

LXC Limitations:

Inconsistent feature support across different Linux distributions. LXC is primarily being maintained & developed by Canonical on Ubuntu platform.

Docker is a great platform for building new web-scale, microservices applications or optimized Dev/Test organizations, while LXC containers provide a lightweight, zero-performance-impact alternative to traditional hypervisor-based virtualization, and is thus better suited for I/O-intensive data applications

https://robinsystems.com/blog/linux-containers-comparison-lxc-docker/

- Docker vs LXD

Docker specializes in deploying apps

LXD specializes in deploying (Linux) Virtual Machines

Containers are a lightweight virtualization mechanism that does not require you to set up a virtual machine on an emulation of physical hardware.

lxc: userspace interface for the Linux kernel containment features. This is the guy who manages Kernel namespaces, Apparmor and SELinux profiles, Chroots , Kernel capabilities and every other kernel related stuff

lxd: is a container "hypervisor". It is composed by a daemon(lxd), the command-line interface(lxc) and a OpenStack plugin. This guy was developed to provide more flexibility and features to lxc, while it still uses it under-the-hood.

a Self-Contained OS userpace is created with it´s isolated infrastructure.

You create many virtual machines, that have userspace and kernel isolations, but they are not complete virtual machines since they are not running separeted kernels, neither are paravirtualized for the same reason

Docker used lxc techonogy as underlying to communicate with the kernel but today, it uses it´s own library, libcontainer.

The filesystem is an abstraction to Docker, while lxc uses filesystem features directly. Network is also an abstraction while with lxc you can set up ip addresses and routing configurations more easily.

https://unix.stackexchange.com/questions/254956/what-is-the-difference-between-docker-lxd-and-lxc

- Singularity enables users to have full control of their environment. Singularity containers can be used to package entire scientific workflows, software and libraries, and even data. This means that you don’t have to ask your cluster admin to install anything for you-you can put it in a Singularity container and run. Did you already invest in Docker? The Singularity software can import your Docker images without having Docker installed or being a superuser. Need to share your code? Put it in a Singularity container and your collaborator won’t have to go through the pain of installing missing dependencies. Do you need to run a different operating system entirely? You can “swap out” the operating system on your host for a different one within a Singularity container

http://singularity.lbl.gov/

- The Scientific Filesystem (SCIF) provides internal modularity of containers, and it makes it easy for the creator to give the container implied metadata about software. For example, installing a set of libraries, defining environment variables, or adding labels that belong to app foo makes a strong assertion that those dependencies belong to foo. When I run foo, I can be confident that the container is running in this context, meaning with foo's custom environment, and with foo’s libraries and executables on the path. This is drastically different from serving many executables in a single container because there is no way to know which are associated with which of the container’s intended functions

https://singularity.lbl.gov/docs-scif-apps

- Shifter enables container images for HPC. In a nutshell, Shifter allows an HPC system to efficiently and safely allow end-users to run a docker image. Shifter consists of a few moving parts 1) a utility that typically runs on the compute node that creates the run time environment for the application 2) an image gateway service that pulls images from a registry and repacks it in a format suitable for the HPC system (typically squashfs) 3) and example scripts/plugins to integrate Shifter with various batch scheduler systems.

https://github.com/NERSC/shifter

Docker vs Singularity vs Shifter in an HPC environment

- build is the “Swiss army knife” of container creation. You can use it to download and assemble existing containers from external resources like Singularity Hub and Docker Hub.

In addition, the build can produce containers in three different formats. Formats types can

be specified by passing the following options to build.

compressed read-only

squashfs file system suitable for production (default)

writable ext3 file system suitable for interactive development (--writable option)

writable (

ch)root directory called a sandbox for interactive development (--sandbox option)

But default the container will

be converted to a compressed, read-only

squashfs file.

If you want your container in a different format use the

--writable or

--sandbox options

https://singularity.lbl.gov/docs-build-container

- Although scif is not exclusively for containers, in that a container can provide an encapsulated, reproducible environment, the scientific filesystem works optimally when contained. Containers traditionally have one entry point, one environment context, and one set of labels to describe it. A container created with a Scientific Filesystem can expose multiple entry points, each that includes its own environment, metadata, installation steps, tests, files, and a primary executable script. SCIF thus brings internal modularity and programmatic accessibility to encapsulated, reproducible environments

https://sci-f.github.io/

want to provide modular tools for your lab or other scientists.

A SCIF recipe or container with a filesystem could serve different environments for tools for your domain of interest.

want

to easily expose different interactive environments. For example,

SCIF could be used to expose the same python virtual environment, but exporting different variables to the environment to determine the machine learning backend to use.

https://sci-f.github.io/examples

- Containers are encapsulations of system environments

Docker is the most well known and

utilized container platform

Designed primarily for network micro-service virtualization

Facilitates creating, maintaining and distributing container images

Containers are kinda reproducible

Easy to install, well documented, standardized

If you ever need to scale beyond your local resources, it may be a

dead end path!

Docker, and other enterprise-focused containers,

are not designed for, efficient or

even compatible with traditional HPC

Singularity: Design Goals

Architected to support “Mobility of Compute”, agility, BYOE, and portability

Single file based container images

Facilitates distribution, archiving, and sharing

Very efficient for parallel file systems

No system, architectural or workflow changes necessary to integrate on HPC

Limits user’s privileges (inside user == outside user)

No root owned container daemon

Simple integration with resource managers,

InfiniBand, GPUs, MPI, file

systems, and supports multiple architectures (x86_64, PPC, ARM, etc..)

Container technologies

utilize the host’s kernel and thus

have the ability to run contained

applications with the same performance characteristics as native applications.

There is a minor theoretical performance penalty as the kernel must now navigate

namespaces.

Singularity, on the other hand, is not designed around

the idea of micro-service process

isolation.

Therefore it uses the smallest number of

namespaces necessary to achieve its primary

design goals.

This gives Singularity a much lighter footprint, greater performance potential and easier

integration than container platforms that

are designed for full isolation.

http://www.hpcadvisorycouncil.com/events/2017/stanford-workshop/pdf/GMKurtzer_Singularity_Keynote_Tuesday_02072017.pdf#43

"Singularity enables users to have full control of their environment.

Singularity containers can be used to package entire scientific workflows, software and libraries, and even data. This means

that you don’t have to ask your cluster admin to install anything for you-you can put it in a Singularity container and run."

http://www.sdsc.edu/support/user_guides/tutorials/singularity.html

- With Singularity, developers who like to be able to easily control their own environment will love Singularity's flexibility. Singularity does not provide a pathway for escalation of privilege (as do other container platforms which are thus not applicable for multi-tenant resources) so you must be able to become root on the host system (or virtual machine) in order to modify the container.

https://github.com/singularityware/singularity

- OpenVZ is a container-based virtualization for Linux. OpenVZ creates multiple secure, isolated Linux containers (otherwise known as VEs or VPSs) on a single physical server enabling better server utilization and ensuring that applications do not conflict. Each container performs and executes exactly like a stand-alone server; a container can be rebooted independently and have root access, users, IP addresses, memory, processes, files, applications, system libraries and configuration files.

https://openvz.org/Main_Page

- unlike VMs, containers have a dual lens you can view them through: are they infrastructure (aka “lightweight VMs”) or are they application management and configuration systems? The reality is that they are both. If you are an infrastructure person you likely see them as the former and if a developer you likely see them as the latter.

VMware ESX and

Xen both used Intel-VTx by default. This also allowed

the creation of KVM which depends 100% on the Intel-VTx chipset for these capabilities.

Unfortunately, while you can run an unmodified kernel on Intel-VTx, system calls that touch networking and disk still wind up hitting emulated hardware. Intel-VTx primarily solved the issues of segregating, isolating, and allowing high-performance access to direct CPU calls and memory access (via Extended Page Table [EPT]). Intel-VT does not solve access to network and disk, although SR-IOV, VT-d, and related attempted to address this issue, but never quite got there

In order to eke out better performance from networking and disk,

all of the major hypervisors turn to

paravirtualized drivers

Paravirtualized drivers are very similar to

Xen’s

paravirtualized kernels.

Within the hypervisor and

it’s guest operating system there is a special

paravirtualization driver for the network or disk. You can think of this driver as being “split” between the hypervisor kernel and the guest kernel, allowing greater throughput.

Containers and Security

It’s a popular refrain to talk about containers as being “less secure” than hypervisors,

despite the fact that for some of us,

containers were originally conceived as an application security mechanism. They allow packaging up an application into a very low attack surface, running it as an unprivileged user, in an isolated jail!

[4] That’s far better than a typical VM-based approach where you lug along most of an operating system that has to

be patched and maintained regularly.

But many will point to the magic voodoo that a hypervisor can do to provide isolation, such as Extended Page Table (EPT). Yet, EPT, and many other capabilities in the hypervisor are no longer provided by the hypervisor itself, but by the Intel-

VTx instruction set. And there is nothing special that keeps the Linux kernel from calling those instructions. In fact, there is already code out of Stanford from the DUNE project that does just this for regular applications. Integrating it to container platforms would be trivial.

You can expect Intel to continue to enrich the Intel-

VTx instruction set and for the Linux kernel and containers to take advantage of those capabilities without the hypervisor as an intermediary.

Combined with removing most of the operating system wrapped arbitrarily around the application in a hypervisor VM, containers may actually already be more secure than the hypervisor model

This then leads us to understand that hypervisors sole value

resides primarily around supporting many operating systems using PV drivers, something that is not a requirement in the next generation

datacenter.

if you don’t care about multiple guest operating systems, if you integrate the DUNE libraries from Stanford into the container

(s), if you depend on standard Linux user permissions, if you just talk directly to the physical resources, containers are:

Highly

performant

Probably as secure as any hypervisor if configured properly

Significantly simpler than a hypervisor with less overhead and operating system bloat

as we become container-centric, we’re inherently becoming application-centric

The apps and modern cloud-native app developer just cares about the infrastructure contract: I call an API, I get the infrastructure resource, it either performs to

it’s SLAs or doesn’t, if it doesn’t I kill it and replace it with another, and if I

start to run out of oomph with the infrastructure I ordered, I order another one (horizontal scaling).

More and more, customers

choose to use OpenStack and KVM VMs as a substrate to run on top of

http://cloudscaling.com/blog/cloud-computing/will-containers-replace-hypervisors-almost-

certainly/

- Dune provides ordinary user programs with safe and efficient access to privileged CPU features that are traditionally only available to kernels. It does so by leveraging modern virtualization hardware, enabling direct execution of privileged instructions in an unprivileged context. We have implemented Dune for Linux, using Intel's VT-x virtualization architecture to expose access to exceptions, virtual memory, privilege modes, and segmentation. By making these hardware mechanisms available at user-level, Dune creates opportunities to deploy novel systems without specialized kernel modifications.

http://dune.scs.stanford.edu/

- Windows Server Containers

Windows Server Containers are a lightweight operating system virtualization method used to separate applications or services from other services that are running on the same container host. To enable this, each container has its own view of the operating system, processes, file system, registry, and IP addresses

Container endpoints can

be attached to a local host network (e.g. NAT), the physical network or an

overlay virtual network created through the Microsoft Software Defined Networking (SDN) stack.

https://docs.microsoft.com/en-us/windows-server/networking/sdn/technologies/containers/container-networking-overview

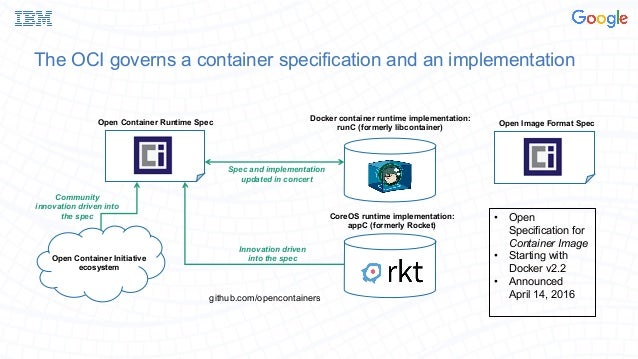

- Established in June 2015 by Docker and other leaders in the container industry, the OCI currently contains two specifications: the Runtime Specification (runtime-spec) and the Image Specification (image-spec). The Runtime Specification outlines how to run a “filesystem bundle” that is unpacked on disk. At a high-level, an OCI implementation would download an OCI Image then unpack that image into an OCI Runtime filesystem bundle. At this point, the OCI Runtime Bundle would be run by an OCI Runtime.

https://www.opencontainers.org/

- Kata Containers is an open source project and community working to build a standard implementation of lightweight Virtual Machines (VMs) that feel and perform like containers, but provide the workload isolation and security advantages of VMs.

The Kata Containers project has six components: Agent, Runtime, Proxy, Shim, Kernel and packaging of QEMU 2.11.

It is designed to be architecture agnostic, run on multiple hypervisors and be compatible with the OCI specification for Docker containers and CRI for

Kubernetes.

https://katacontainers.io/

- Firecracker runs in user space and uses the Linux Kernel-based Virtual Machine (KVM) to create microVMs.

The fast startup time and low memory overhead of each

microVM enable you to pack thousands of

microVMs onto the same machine.

Firecracker is an alternative to QEMU, an established VMM with a general purpose and

broad feature set that allows it to host a variety of guest operating systems.

What is the difference between Firecracker and Kata Containers and QEMU?

Kata Containers is an OCI-compliant container runtime that executes containers within QEMU based virtual machines. Firecracker is a cloud-native alternative to QEMU that is

purpose-built for running containers safely and efficiently, and nothing more. Firecracker provides a minimal required device model to the guest operating system while excluding non-essential functionality (there are only 4 emulated devices:

virtio-net,

virtio-block, serial console, and a 1-button keyboard controller used only to stop the

microVM).

https://firecracker-microvm.github.io/

- CoreOS is an open-source lightweight operating system based on the Linux kernel and designed for providing infrastructure to clustered deployments, while focusing on automation, ease of application deployment, security, reliability and scalability. As an operating system, CoreOS provides only the minimal functionality required for deploying applications inside software containers, together with built-in mechanisms for service discovery and configuration sharing

https://en.wikipedia.org/wiki/CoreOS

- With Container Linux and Kubernetes, CoreOS provides the key components to secure, simplify and automatically update your container infrastructure.

https://coreos.com/

- rkt is an application container engine developed for modern production cloud-native environments. It features a pod-native approach, a pluggable execution environment, and a well-defined surface area that makes it ideal for integration with other systems.

https://coreos.com/rkt/

Docker is a new containerization technology built on top of the LXC kernel container system, a component of the Linux OS.

Platform

Turbo

was designed for the Windows platform and

its containerization system is built on top of the Turbo application virtualization engine.

Docker

was designed for use on Linux environments and

is built on top of the LXC application virtualization system.

the Turbo VM plays the same role for Turbo containers as LXC does for Docker

LXC can be viewed as

a type of application virtualization implementation.

Turbo supports both desktop and server Windows applications, and works on all desktop and server editions of Windows from Windows Vista forward

Turbo does not require modifications to the base operating system kernel.

Turbo does not execute a parallel copy of the base operating system.

Turbo containers support many Windows-specific constructs, such as Windows Services, COM/DCOM components, named kernel object isolation,

WinSxS side-by-side versioning, shell registration, clipboard data, and other mechanisms that do not directly apply to Linux operating systems.

Turbo also provides a desktop client with many features (GUI tool to launch applications, file extension associations, Start Menu integration) that allow containerized applications to interact with the user in the same way as traditionally installed desktop applications. Turbo also provides a small browser plugin that allows users to launch and stream containerized applications directly from any web browser.

Layering

For example, to build a container for a Java application that uses a MongoDB database, a Turbo user could combine a Java runtime layer with a MongoDB database layer, then stack the application code and content in an application layer on top of its dependency layers. Layers make it

extremely easy to re-use shared components such as runtimes, databases, and plugins.

Layers can also be used to apply application configuration information. For example, one might have a layer that specifies the default homepage, favorites, and security settings for a browser.

This can be applied on top of a base browser layer to impose those settings onto a non-customized browser environment.

Layers can

be applied dynamically and programmatically, so you can present distinct application configurations to specific groups of users without rebuilding the base application container

.Continuing the browser example, one might create a base browser layer with a particular configuration layer for the development team, then use the same base browser with a different configuration layer for the sales team.

Docker does not distinguish between content that

is imported for use only during the build process and content required by the application container at runtime.

Multi-base image support

By contrast, Docker does not support creation of images from multiple base images.

In other words, Turbo supports "multiple inheritance" through source layering, and "polymorphism" through post-layering

Continuation

Turbo's unique continue command allows execution to

be continued from a specified state identifier.

Isolation Modes

Unlike Docker,

Turbo containers are not required to

be completely isolated from the host device resources. Turbo can fully or partially isolate objects as needed at a fine granularity.

For example, it is possible to specify that one directory

subtree should

be fully isolated while another one is visible from the host device.

Networking

Docker relies on root access to the host device at two levels.

First, the LXC/

libcontainer containerization engine that

Docker is built on

is implemented within the Linux kernel

Second, the Docker daemon itself runs with root privileges.

The use of code with kernel or root privileges opens the possibility of "break out" into privileged system resources.

By contrast, Turbo is designed to run entirely in user mode with no privileges.

Turbo containerization inherits this ability from the user mode Turbo app virtualization engine, which operates on top of (rather than within) the OS kernel.

This approach has two critical advantages:

Turbo can be used by unprivileged users on locked down desktops without elevation, improving accessibility and reducing administrative complexity;

in the event of malware execution or a vulnerability in the Turbo implementation, the affected process does not have access to root privileges on the device

Toolchain

Like Docker, Turbo provides command-line interfaces (turbo) and a scripting language (

TurboScript) for automating build processes.

Configuration

In addition to dynamic configuration via a console or script, Turbo also supports configuration via a static XML-based specification that declares the files, registry keys, environment variables, and other virtual machine states that will

be presented to the container

Streaming

Turbo, like Docker, supports the use of local containers and the ability to push and pull containers from a central repository

.Turbo provides the ability

to efficiently stream containers over the Internet. The Turbo system includes a predictive streaming engine to launch containers efficiently over wide area networks (WANs) without requiring the endpoint to download the entire VM image. Because many applications can be multiple gigabytes in size, this prevents a large startup latency for remote end users. Turbo predictive streaming uses statistical techniques to predict application resource access patterns based on profiles of previous user interactions. Turbo then adapts the stream data flow based on predictions of subsequent required resources.

https://turbo.net/docs/about/turbo-and-docker#platform

Turbo allows you to package applications and their dependencies into a lightweight, isolated virtual environment called a "container."

Containerized applications can then be run on any Windows machine that has Turbo installed, no matter the underlying infrastructure. This eliminates installs, conflicts, breaks, and missing dependencies.

Turbo containers are built on top of the Turbo Virtual Machine, an application virtualization engine that provides lightweight

namespace isolation of core operating system objects such as the filesystem, registry, process, networking, and threading subsystems.

With Turbo, testers can:

Run development code in a pre-packaged, isolated environment with software-configurable networking

Rapidly rollback changes and execute tests across

a span of application versions and test environments

Test in multiple client, server, and browser environments concurrently on a single physical device

Accelerate test cycles by eliminating the need to install application dependencies and

modify configuration

With Turbo, system administrators can:

Remove errors

due to inconsistencies between staging, production, and end-user environments

Allow users to test out new or beta versions of applications without interfering with existing versions

Simplify deployment of desktop applications by eliminating dependencies (

.NET, Java, Flash) and conflicts

Improve security by locking down desktop and server environments while preserving application access

Do Turbo containers work by running a full OS virtual machine?

No. Turbo containers use a special, lightweight application-level VM called Turbo VM. Turbo VM runs in user mode on top of a single instance of the base operating system.

When I run a container with multiple base images, does it link multiple containers or make a single new container?

Running with multiple base images creates a single container with

all of the base images combined. However, this

is implemented in an optimized way that avoids explicit copying of the base image container contents into the new container.

Does Turbo support virtual networking?

Yes. Controlling both inbound and outbound traffic

is supported

Does Turbo support linking multiple containers?

Yes. See the

--link and

--network commands

Is there a difference between server and desktop application containers?

No, there is no special distinction. And desktop containers can contain services/servers and vice versa.

https://turbo.net/docs/about/what-is-turbo#why-use-turbo