Building a Security Operations Center

building

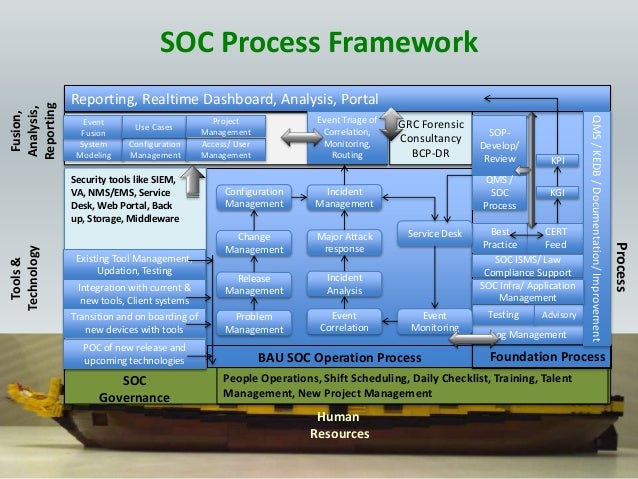

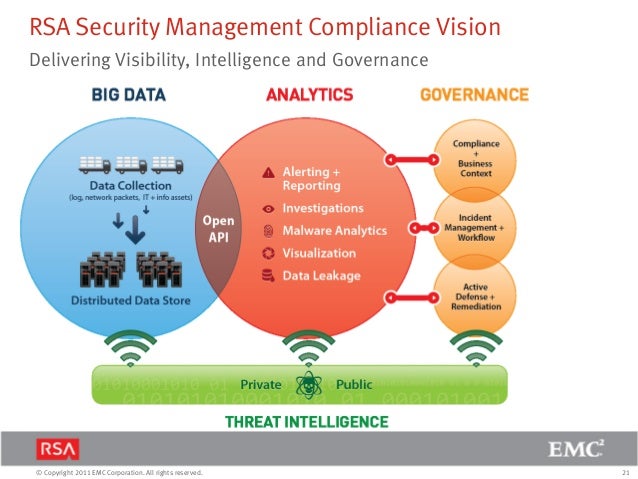

a SOC requires collaboration and communication among multiple functions (people), disparate security products (technology), and varying processes and procedures (processes)

https://finland.emc.com/collateral/white-papers/rsa-advanced-soc-solution-sans-soc-roadmap-white-paper.pdf

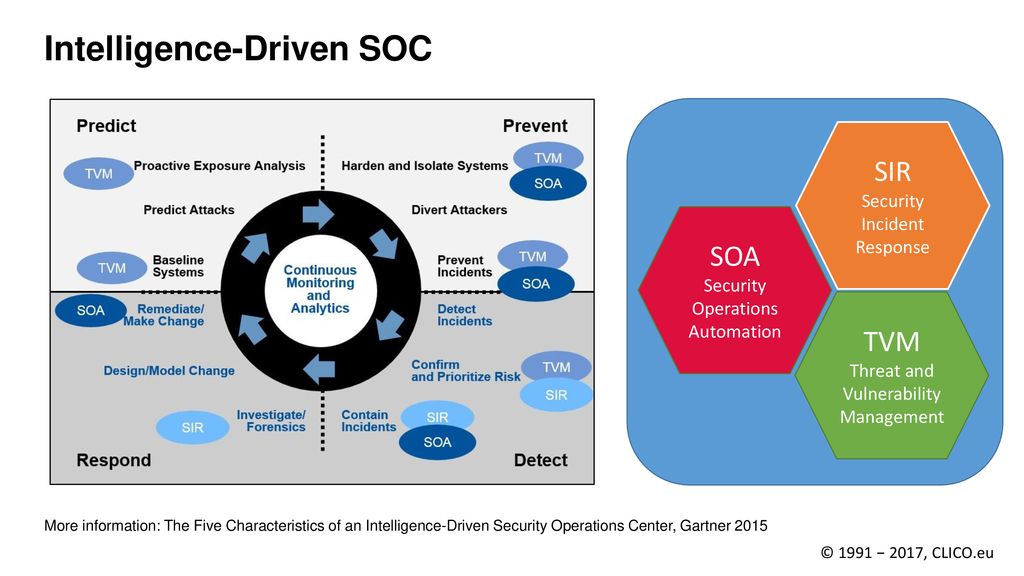

- The Five Characteristics of an Intelligence-Driven Security Operations Center

Key Challenges

Traditional security operations centers:

Rely primarily on prevention technologies, and rule and signature-based detection mechanisms that require prior knowledge of attacker methods, both of which

are insufficient to protect against current threats

Treat intelligence (TI) as a one-way product to

be consumed, rather than as a process, leading to an intelligence-poor security strategy

Treat incident response as an exception-based process, versus a continuous one

Recommendations

Adapt a mindset that

is based on the assumption

that they have already been compromised

an intelligence-driven SOC approach with these five characteristics:

use

multisourced threat intelligence strategically and tactically;

use advanced analytics to

operationalize security intelligence

automate whenever

feasible

adopt an adaptive security architecture

proactively hunt and investigate

https://www.ciosummits.com/Online_Assets_Intel_Security_Gartner.pdf

- An information security operations center (or "SOC") is a location where enterprise information systems (web sites, applications, databases, data centers and servers, networks, desktops and other endpoints) are monitored, assessed, and defended.

https://en.wikipedia.org/wiki/Information_security_operations_center

Security Operations Center (SOC) - DIY or Outsource?

Discover ideas about Security Solutions

DTS Solution - Building

a SOC (Security Operations Center)

What is a Security Operations Center (SOC)?

SOC Team Presentation 1 - Security Operations Center

Security Operation Center - Design & Build

- network operations center(NOC) is one or more locations from which control is exercised over a computer, television broadcast, or telecommunications network.

Fortinet Security Fabric

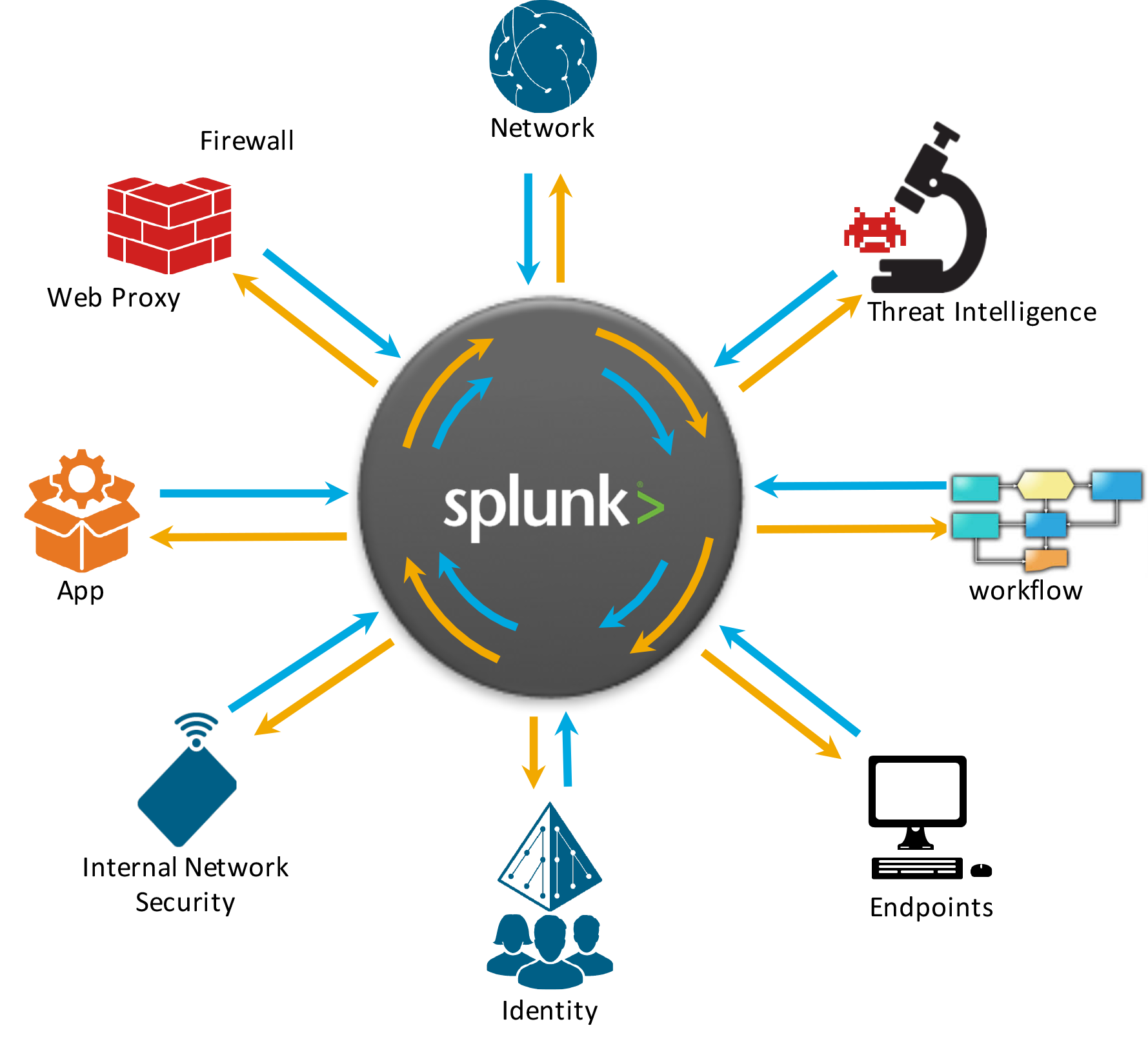

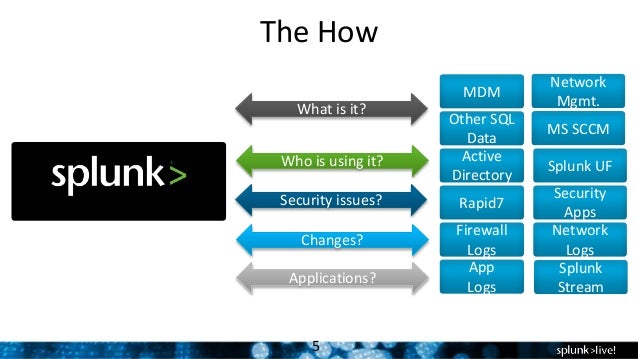

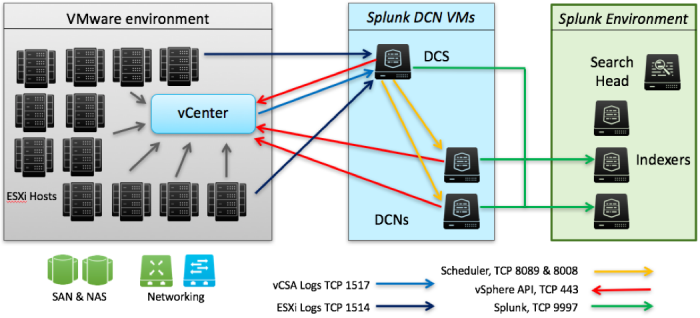

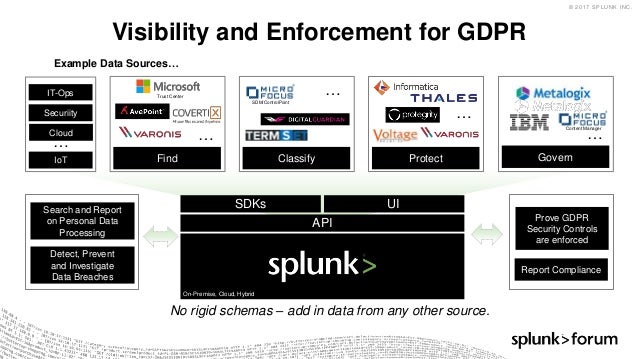

Splunk: Using Big Data for Cybersecurity

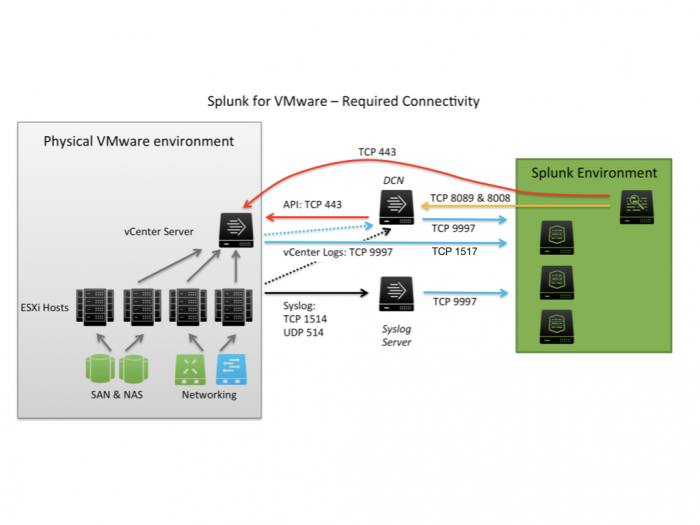

Splunk Inc. provides the leading software platform for real-time Operational Intelligence.

How to configure Splunk Log Forwarder in McAfee ESM If you will

be forwarding events from various devices through a

syslog relay

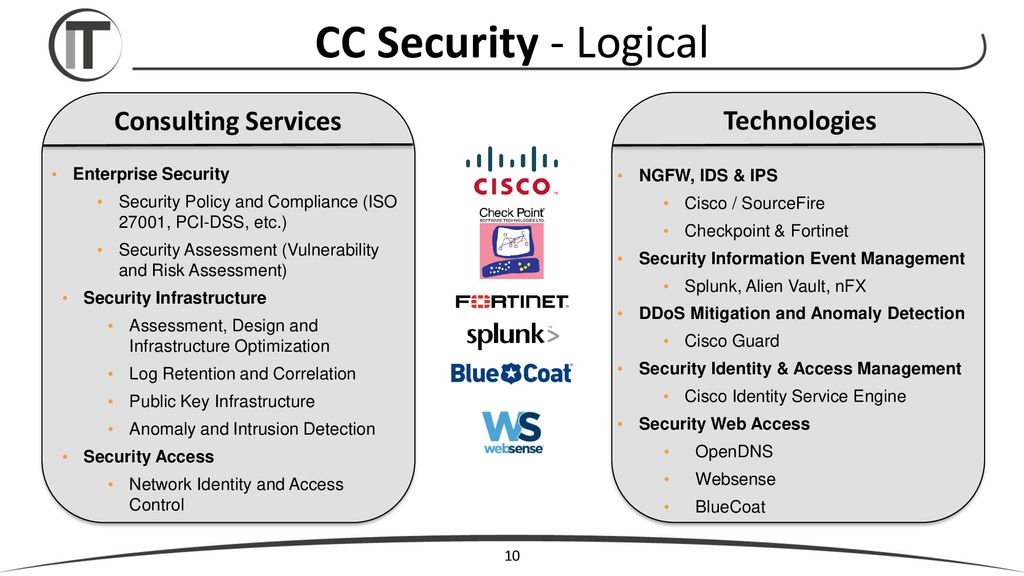

Enterprise Security

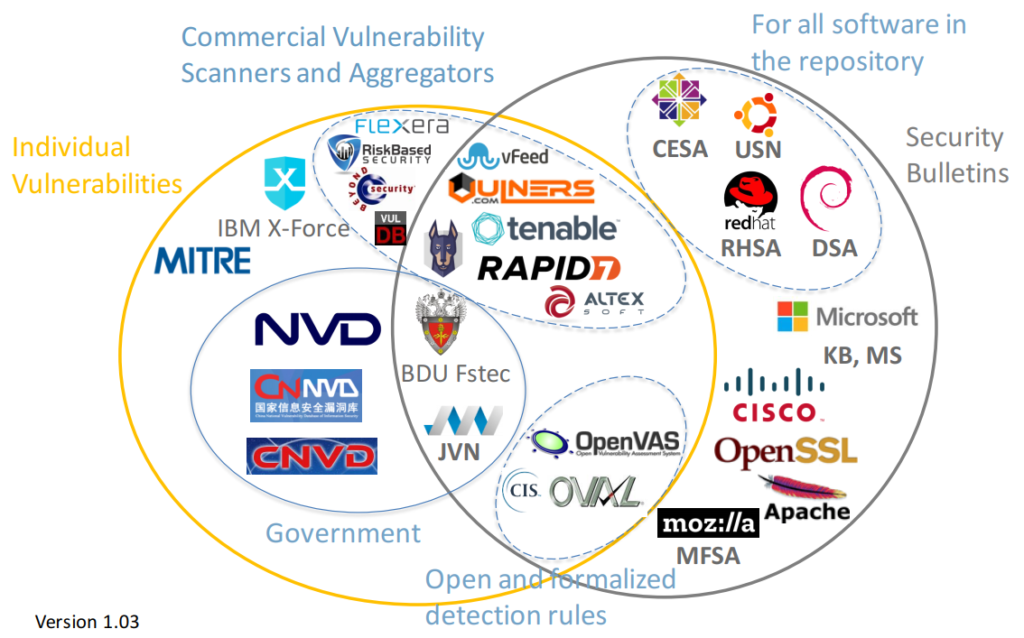

(TVM) Threat and Vulnerability Management

(SOIR) security operations incident response

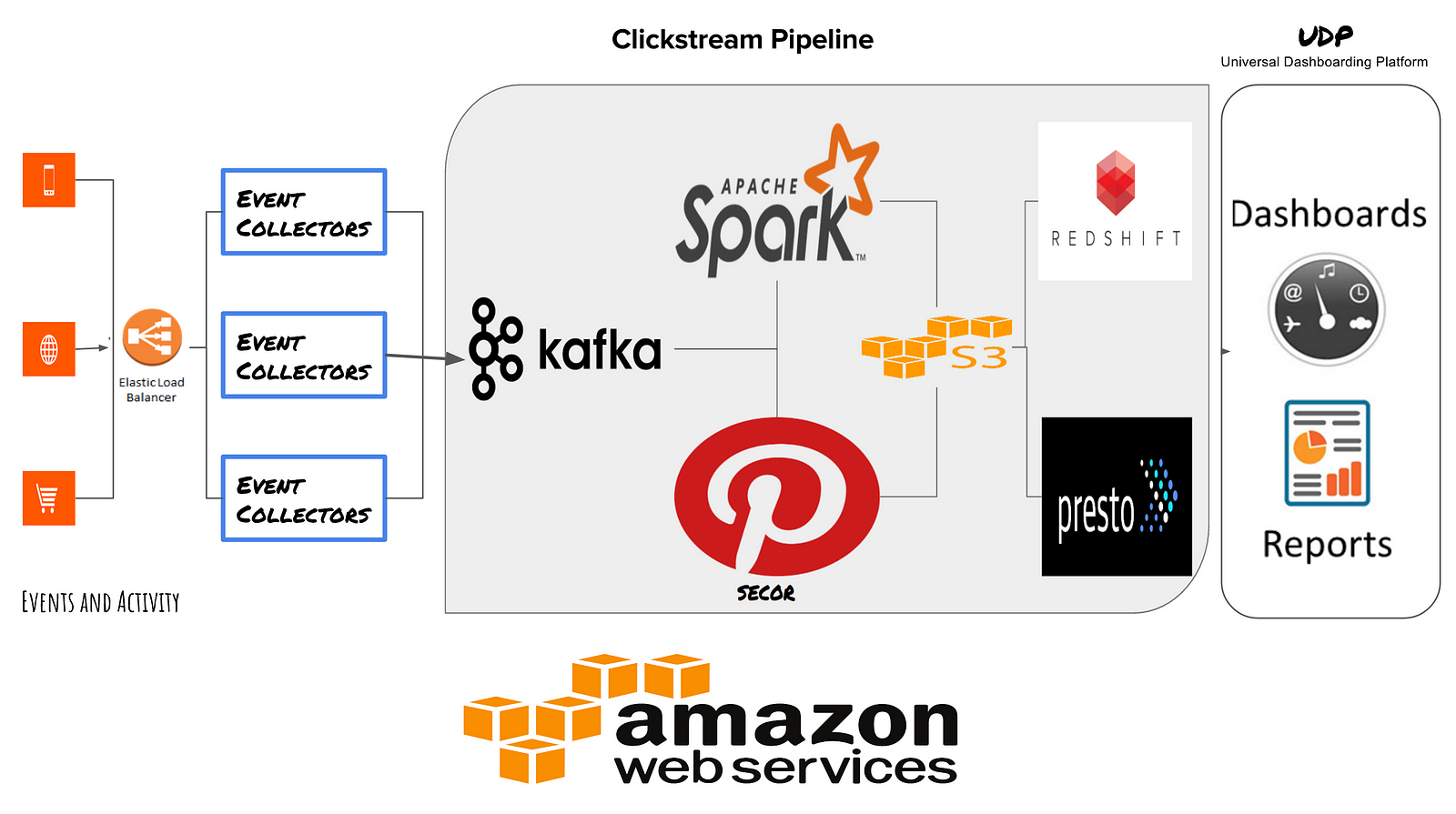

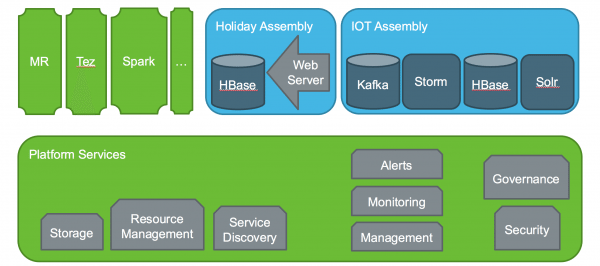

Real-Time Big Data Processing with Spark and MemSQL

Data

Lake 3.0, Part II: A Multi-Colored YARN

Best Practices for Building a Data Lake with Amazon S3

- You see servers and devices, apps and logs, traffic and clouds. We see data—everywhere. Splunk® offers the leading platform for Operational Intelligence.

Machine-generated data is one of the fastest growing and complex areas of big data. It's also one of the most valuable, containing a definitive record of all user transactions, customer behavior, machine behavior, security threats, fraudulent activity and more. Splunk turns machine data into valuable insights no matter what business you're in. It's what we call Operational Intelligence

http://www.splunk.com/

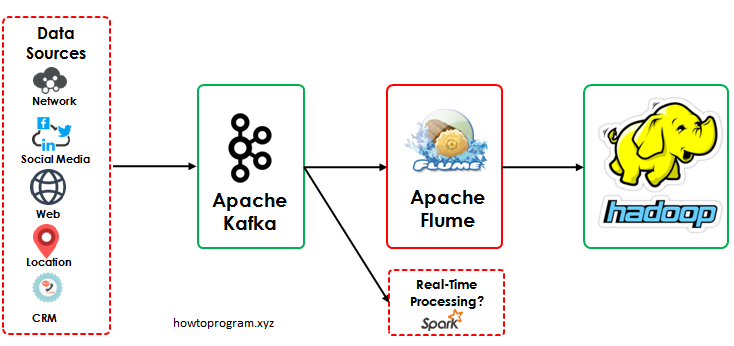

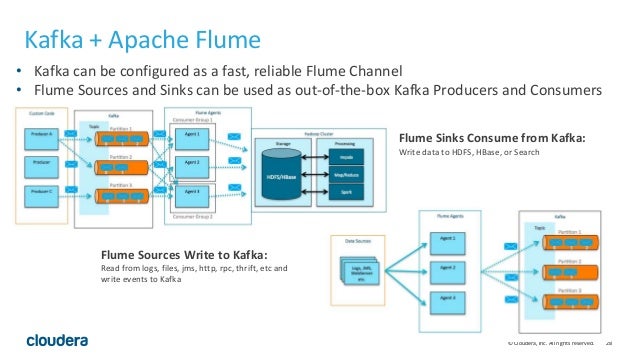

Flume is a distributed, reliable, and

available service for efficiently collecting, aggregating, and moving large amounts of log data. It has a simple and flexible architecture based on streaming data flows. It is robust and fault tolerant with tunable reliability mechanisms and many failover and recovery mechanisms. It uses a

simple extensible data model that allows for online analytic

applicatio

https://flume.apache.org/

Flume Architecture

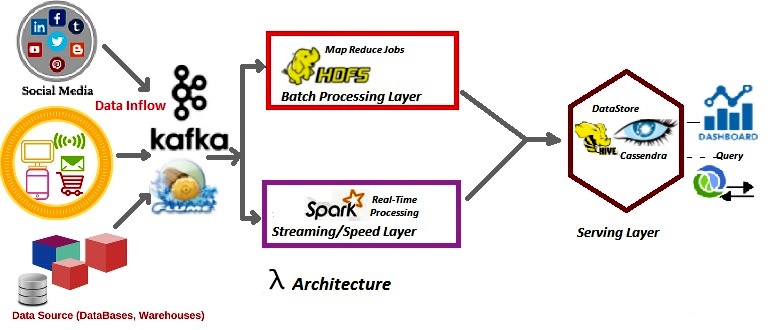

Real Time Data Processing using Spark Streaming

Why Lambda Architecture in Big Data Processing

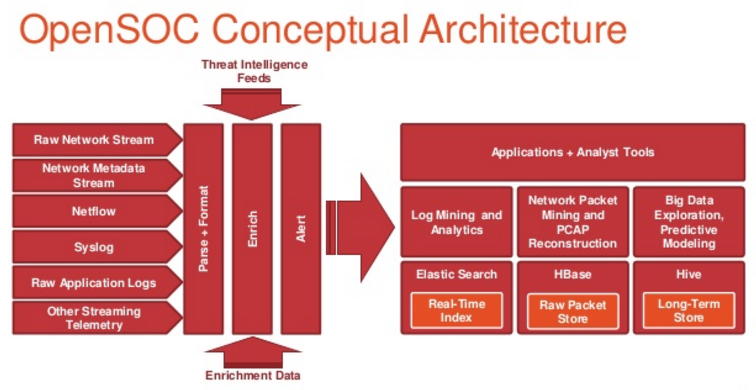

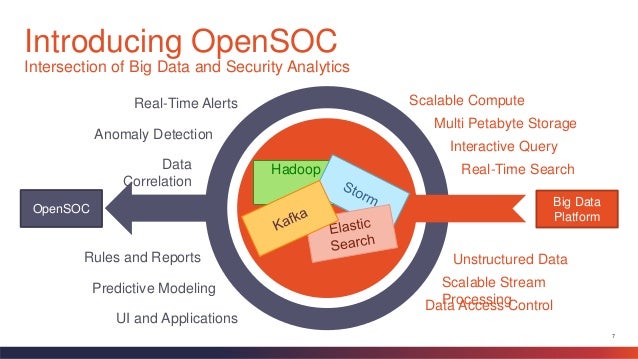

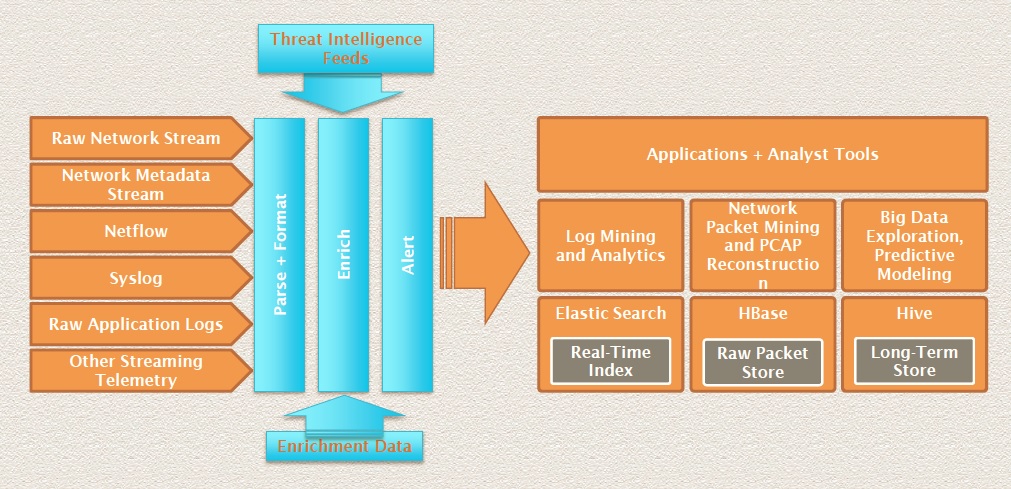

OpenSOC is a Big Data security analytics framework designed to consume and monitor network traffic and machine exhaust data of a data center.

OpenSOC is extensible and

is designed to work at a massive scale.

The

OpenSOC project is a collaborative open source development project dedicated to providing an extensible and scalable advanced security analytics tool. It has strong foundations in the Apache Hadoop Framework and values collaboration for high-quality community-based open source development

http://opensoc.github.io/

Navigating the maze of Cyber Security Intelligence and Analytics

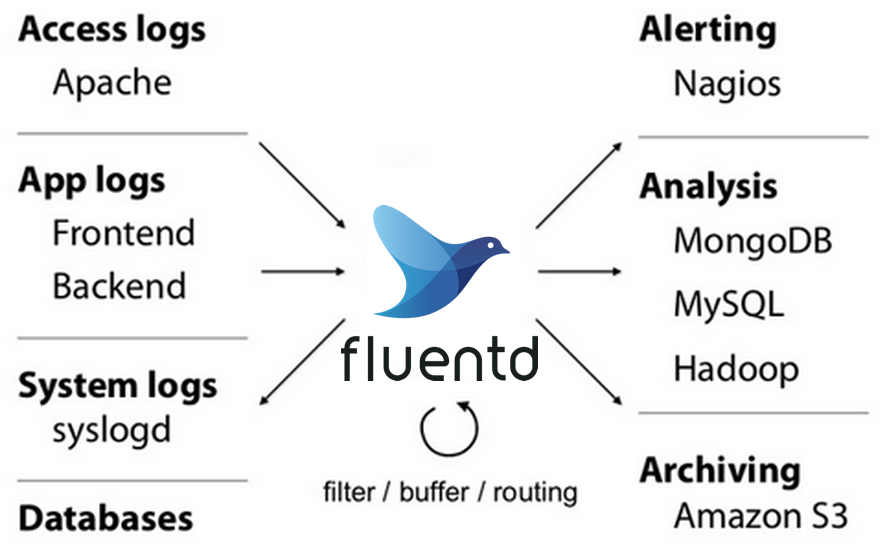

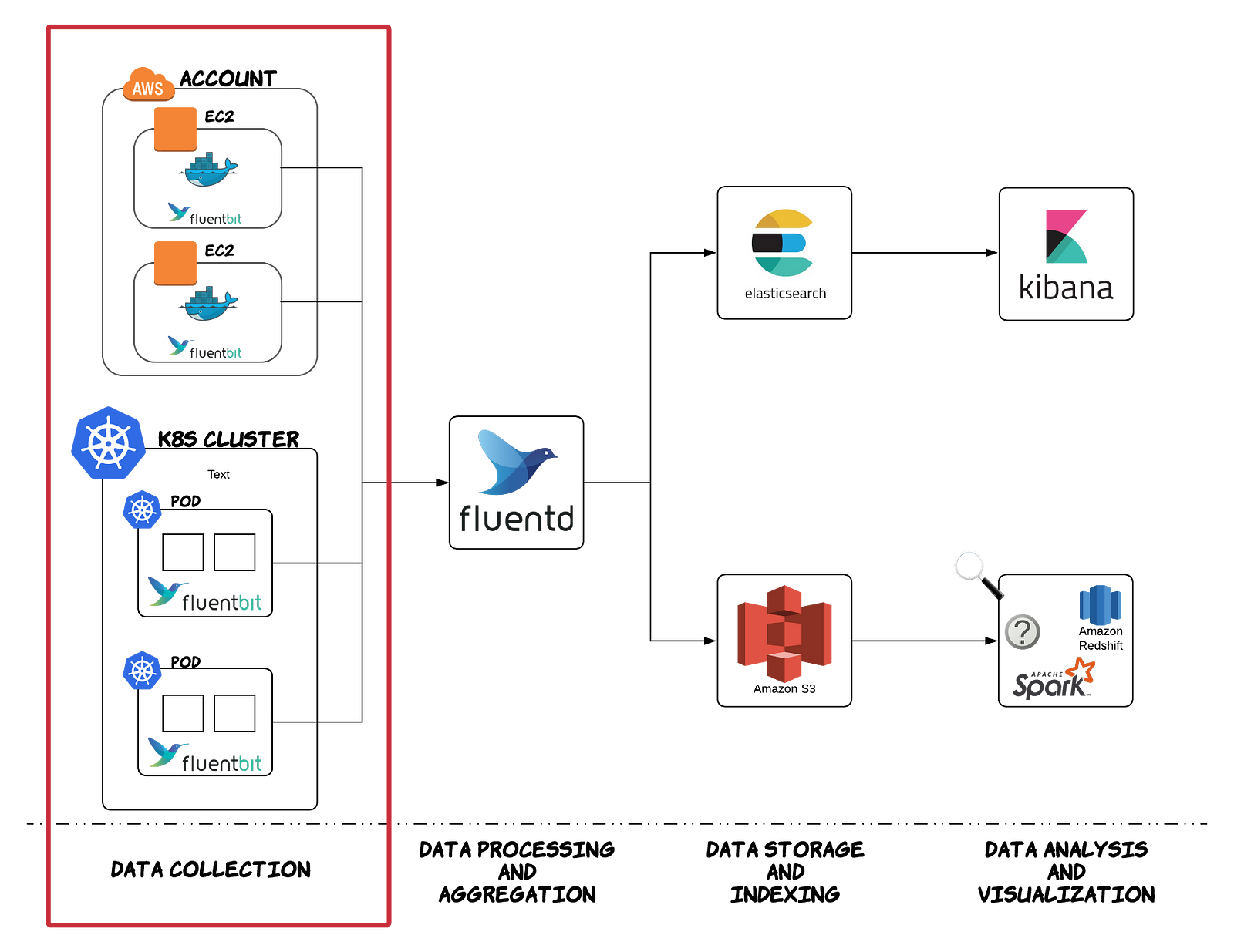

- Fluentd is an open source data collector for unified logging layer.

Fluentd allows you to unify data collection and consumption for a better use and understanding of data

https://www.fluentd.org/

Building an Open Data Platform: Logging with

Fluentd and Elasticsearch

Cloud Data Logging with Raspberry Pi