Paravirtual RDMA (PVRDMA) is a newPCIe virtual NIC

Multiple virtual devices can share an HCA without SR-IOV

Supports

https://www.openfabrics.org/images/eventpresos/2016presentations/102parardma.pdf

- Para-virtualization has

been commonly used in virtualized environments to improve system efficiency and to optimize management workloads. In the era of Performance Cloud Computing and Big Data Use Cases, cloud providers and data centers focus on developingparavirtualization solutions that provide fast and efficient I/O. Thanks to its nature of high bandwidth, low latency and kernel bypass, Remote Direct Memory Access (RDMA) interconnectsare now widely adopted in HPC and Cloud centers asan I /O performance booster. Tobenefit from these advantages that RDMA offer, network communication supportingInfiniBand and RDMA over Converged Ethernet (RoCE ) must be made ready for the underlying virtualized devices

-

eBPF - extended Berkeley Packet Filter

The Berkeley Packet Filter (BPF) started (article 1992) as a special-purpose virtual machine (register based filter evaluator) for filtering network packets, best known for its use in tcpdump .

Areas using eBPF :

XDP - eXpress Data Path

Traffic control

Sockets

Tracing

https://prototype-kernel.readthedocs.io/en/latest/bpf/

- XDP or

eXpress Data Path provides a high performance, programmable network data path in the Linux kernel. XDP provides bare metal packet processing at the lowest point in the software stack. Much of the huge speed gain comes from processing RX packet-pages directly out of drivers RX ring queue before any allocations of meta-data structures like SKBs occur

https://prototype-kernel.readthedocs.io/en/latest/networking/XDP/introduction.html#what-is-xdp

- XDP or

eXpress Data Path provides a high performance, programmable network data path in the Linux kernel as part of the IO Visor Project. Use cases for XDP includethe following :

Pre-stack processing like filtering to support DDoS mitigation

Forwarding and load balancing

Batching techniques such as in Generic Receive Offload

Flow sampling, monitoring

ULP processing (i.e. message delineation)

XDP and DPDK

XDP is sometimes juxtaposed with DPDK when both are perfectly fine approaches. XDP offers another option for users who want performance while still leveraging the programmability of the kernel. Some of the functions that XDP delivers include the following :

Removes the need for 3rd party code and licensing

Allows option of busy polling or interrupt driven networking

Removes the need to allocate large pages

Removes the need for dedicated CPUs as users have more options on structuring work between CPUs

Removes the need to inject packets into the kernel from a 3rd party userspace application

Removes the need to define a new security model for accessing networking hardware

https://www.iovisor.org/technology/xdp

It supports many processor architectures and bothFreeBSD and Linux.

https://github.com/spdk/dpdk

It runs mostly in Linuxuserland . A FreeBSD port is available for a subset of DPDK features.

DPDK is not a networking stack and does not provide functions such as Layer-3 forwarding,IPsec , firewalling , etc . Within the tree, however, various application examples are included to help developing such features.

http://www.dpdk.org/

https://doc.dpdk.org/guides/nics/intel_vf.html

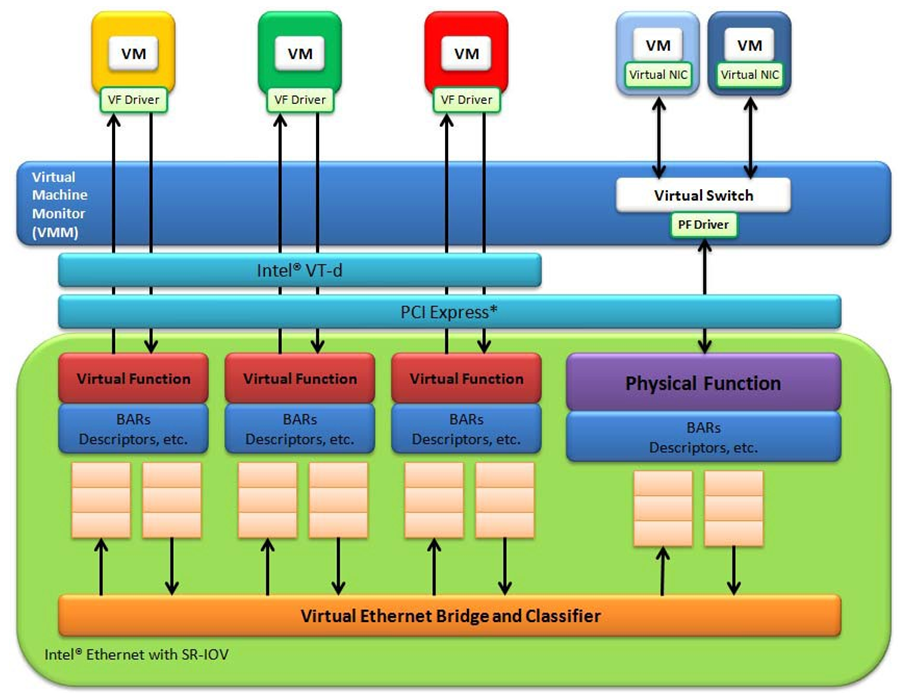

The SR-IOV capable device provides a configurable number of independent Virtual Functions, each with its own PCI Configuration space. The Hypervisor assigns one or more Virtual Functions to a virtual machine by mapping the actual configuration space the VFs to the configuration space presented to the virtual machine by the VMM

https://www.slideshare.net/lnxfei/sr-iovlkong

https://blog.sflow.com/2009/10/sr-iov.html

Intel Scalable I/O Virtualization

VF directly assignable to

Traditional Virtual Machine (VM)

Bare metal container/process

VM container

https://www.lfasiallc.com/wp-content/uploads/2017/11/Intel%C2%AE-Scalable-I_O-Virtualization_Kevin-Tian.pdf

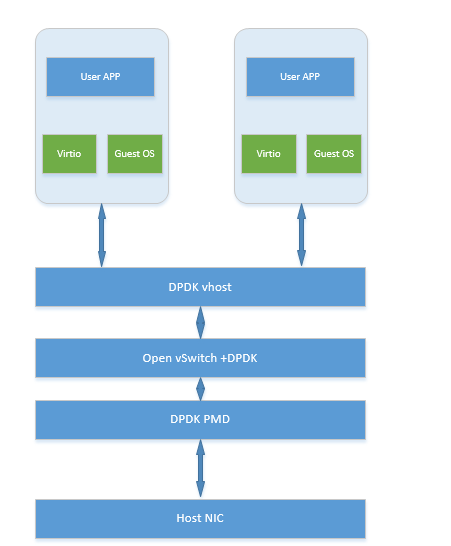

- DPDK is a set of libraries and optimized NIC drivers for fast packet processing in

userspace . DPDK provides a framework and common API for high-speed networking applications. Data Plane Development Kit (DPDK)

- DPDK is a set of libraries and

drivers for fast packet processing.

It supports many processor architectures and both

https://github.com/spdk/dpdk

- DPDK is a set of libraries and

drivers for fast packet processing. Itis designed to run on any processors. The first supported CPU was Intel x86and it is now extended to IBM POWER and ARM.

It runs mostly in Linux

DPDK is not a networking stack and does not provide functions such as Layer-3 forwarding,

http://www.dpdk.org/

- The DPDK uses the SR-IOV feature for hardware-based I/O sharing in IOV mode. Therefore, it is possible to partition SR-IOV capability on Ethernet

controller NIC resources logically and expose them to a virtual machine as a separate PCI function called a “Virtual Function”

https://doc.dpdk.org/guides/nics/intel_vf.html

- SR-IOV Introduce

The SR-IOV capable device provides a configurable number of independent Virtual Functions, each with its own PCI Configuration space. The Hypervisor assigns one or more Virtual Functions to a virtual machine by mapping the actual configuration space the VFs to the configuration space presented to the virtual machine by the VMM

https://www.slideshare.net/lnxfei/sr-iovlkong

- The Single Root I/O Virtualization (SR-IOV) standard being implemented by 10G network adapter vendors provides hardware support for virtualization,

https://blog.sflow.com/2009/10/sr-iov.html

- Today SR-IOV is the standard framework for PCI

Expressdevices

Intel Scalable I/O Virtualization

VF directly assignable to

Traditional Virtual Machine (VM)

Bare metal container/process

VM container

https://www.lfasiallc.com/wp-content/uploads/2017/11/Intel%C2%AE-Scalable-I_O-Virtualization_Kevin-Tian.pdf

No comments:

Post a Comment