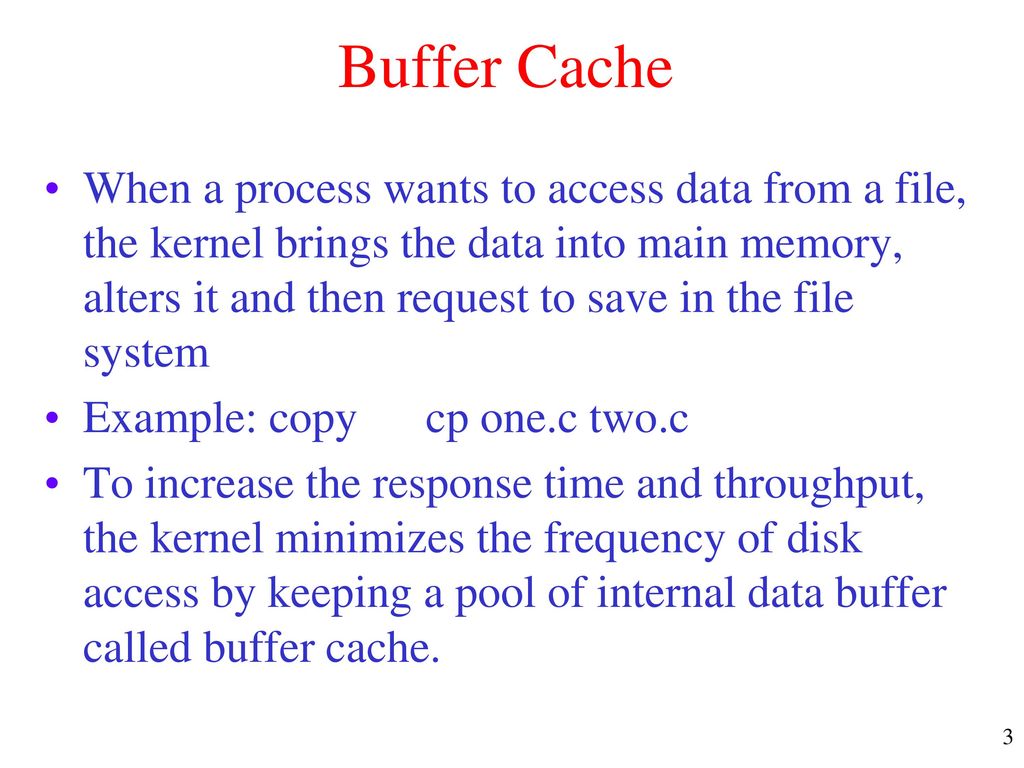

- Buffer

container to hold data for a short period of time

normal speed storage

mostly used for I/O operation

only part of RAM

made from dynamic RAM

policy is first

Cache

storage for speeding up

high speed storage area

used during R/W operation

part of disk

made from static RAM

policy is least recently used.

- Reading from a disk is very slow compared to accessing (real) memory.

Since memory is,scarce resource,the

When the cache fills up, the data that has been unused for the longest time is discarded the memory thus freed is used

http://www.tldp.org/LDP/sag/html/buffer-cache.html

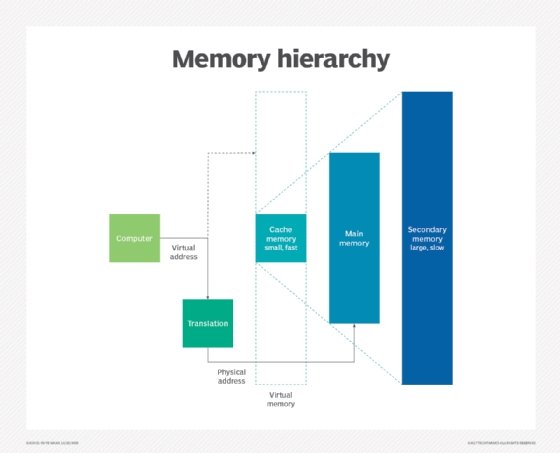

- (Computer) Memory = Every computer system must have 2 kinds of memory.

Primary Memory/Storage: (also known as internal memory) Is the only one directly accessible to the CPU. The CPU continuously reads instructions stored there and executes them as required.

Secondary Memory/storage: (also known as external memory) is not directly accessible by the CPU. The computer usually uses its I/O channels to access secondary storage and transfers the desired data using data buffer (intermediate area) in primary storage. Secondary storage does not lose the data when the device is powered non-volatile

https://www.quora.com/What-is-the-difference-between-computer-RAM-and-memory

- Buffer vs. cache

there are fundamental differences in intent between the process of the process of

With read caches, a data item must have been fetched residing by virtue be fetched residing

With write caches, a performance increase of writing a data item may be realized by virtue residing

With typical caching implementations, a data item that is read effectively ; in the case of . Additionally, the are deferred

The portion of a caching protocol where individual reads are deferred

A buffer is a temporary memory location that is traditionally used . addressable memory is used

a whole buffer of data is usually transferred

so buffering itself sometimes increases transfer performance

reduces the variation or jitter of the transfer's latency as opposed to caching where the intent is to reduce the latency

A cache also increases transfer performance.

https://en.wikipedia.org/wiki/Cache_(computing)

- Buffer

1. Container to hold data for a short

2. Buffer is normal speed storage

3. Buffer

4. Buffer is part of ram only

5. Buffer

6. Buffer's policy is

Cache

1. A cache is a storage for speeding up certain operation

2. Cache is

3. Cache

4. Cache is part of disk also

5. Cache

6. Cache's policy is Least Recently Used

https://www.youtube.com/watch?v=BYDIekbwz-o

- Read-Through Cache

Read-through cache

Both cache-aside and read-through strategies load data lazily, that is,

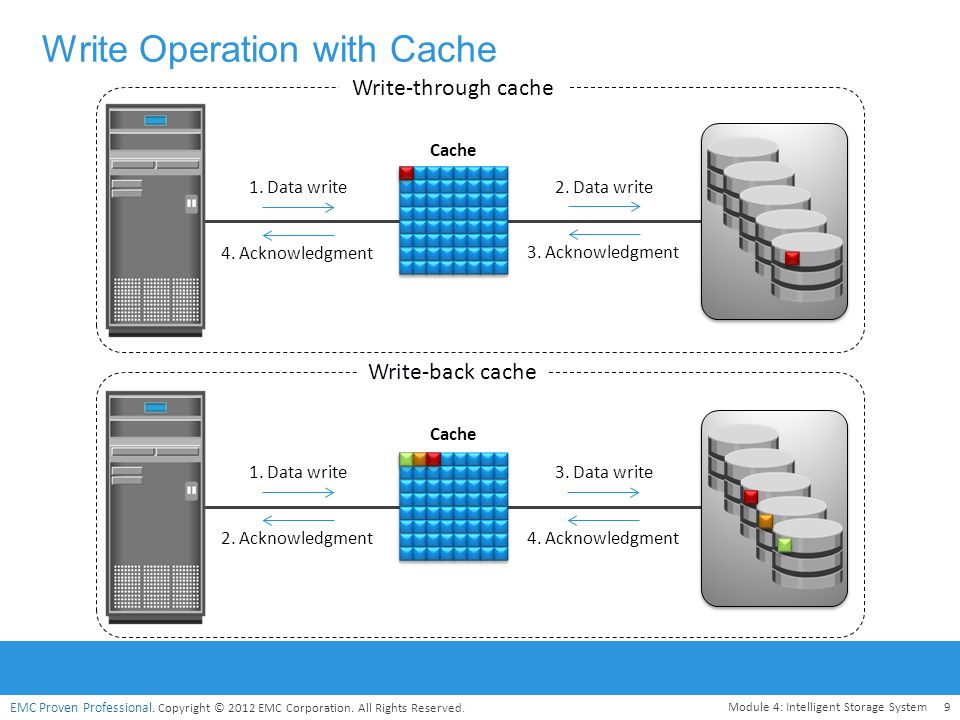

- Write-Through Cache

data is first written to the cache and then to the database. cache in-line

https://codeahoy.com/2017/08/11/caching-strategies-and-how-to-choose-the-right-one/

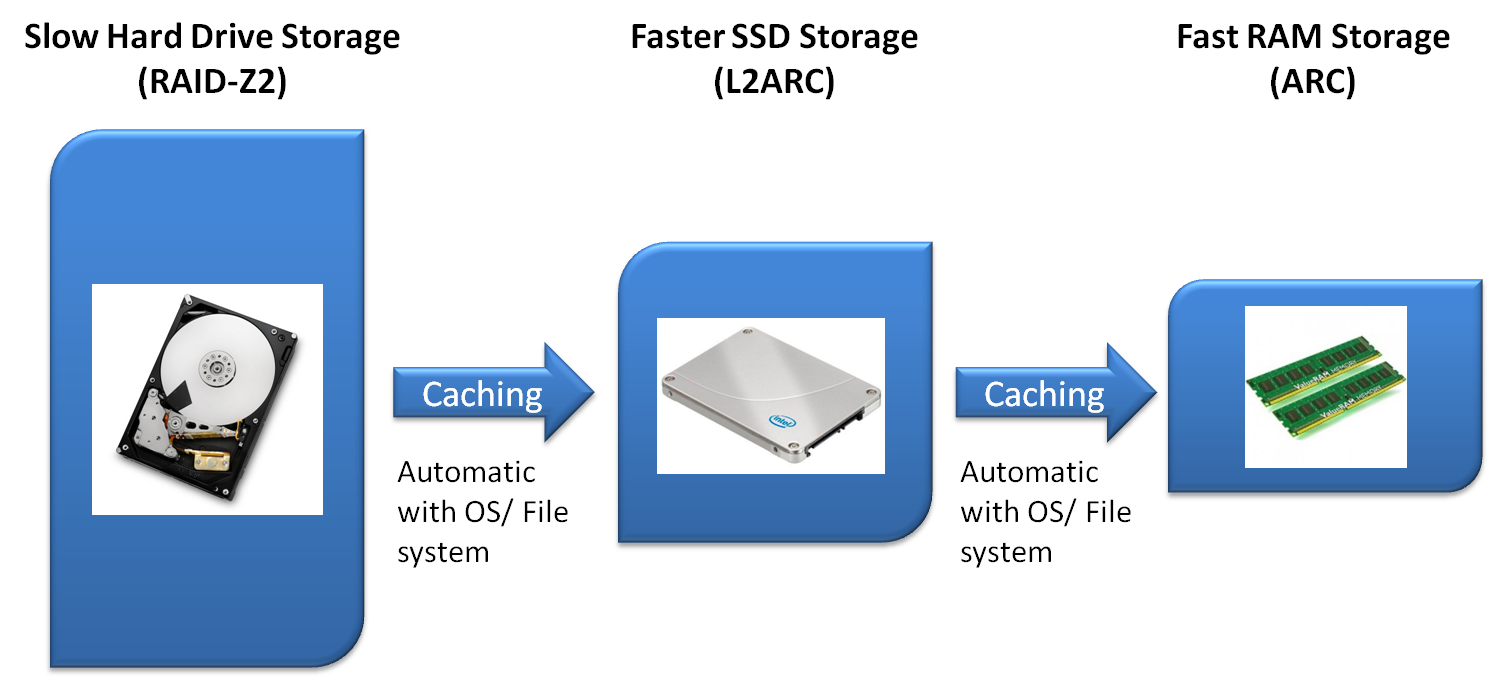

dm -cache is a component (more specifically, more slower effectively

The design of dm-cache requires three physical storage devices for the creation of a single hybrid volume; dm-cache uses those storage devices to separately store

https://en.wikipedia.org/wiki/Dm-cache

- The latest lvm2 tools have support for

lvmcache

What’s dm-cache?

Dm-cache is a device-mapper level solution for caching blocks of data from mechanical hard drives to solid state SSDs. The goal is to significantly speed

There are three ways you can do this:

Create two traditional partitions

Use device mapper’s dm-linear feature to split up a single partition

Use LVM as a front-end to device mapper

https://blog.kylemanna.com/linux/ssd-caching-using-dmcache-tutorial/

- It is possible to achieve the same solution in Red Hat Enterprise Linux by configuring an SSD to act as a cache device for a larger HDD. This has the added benefit of allowing you to choose your storage vendor without relying on their cache implementation. As SSD prices drop and capacities increase,

the cache devices can be replaced

A supported solution in Red Hat Enterprise Linux is to use a dm-cache device. Since this is part of devicemapper don’t need to performe

https://www.redhat.com/en/blog/improving-read-performance-dm-cache

- Modern operating systems

do not normally that be used to

direct (use direct

sync (likewise, but also for metadata)

For measuring write performance, the data to

If this is not possible, a normal file in the file system (such as

https://www.thomas-krenn.com/en/wiki/Linux_I/O_Performance_Tests_using_dd

- /dev/zero is a special file in Unix-

like are read

A hybrid HDD/SSD caching setup has

PC users commonly pair a

https://www.pcworld.com/article/248828/how_to_setup_intel_smart_response_ssd_caching_technology.html

- Linux device mapper

writecache

A computer cache is a component (typically leveraging some sort of performant memory) that temporarily stores data for current write and future read I/O requests.

As for read operations, the general idea is to read it from the slower device no more than once and maintain that data in memory for as long as it is still needed .

Historically, operating systems have been designed to enable local (and volatile) random access memory (RAM) to act as this temporary cache.

Using I/O Caching

Unlike its traditional spinning hard disk drive (HDD) counterpart, SSDs comprise a collection of computer chips (non-volatile NAND memory) with no movable parts.

To keep costs down and still invest in the needed capacities, one logical solution is to buy a large number of HDDs and a small number of SSDs and enable the SSDs to act as a performant cache for the slower HDDs.

Common Methods of Caching

However, you should understand that the biggest pain point for a slower HDD is not accessing sectors for read and write workloads sequentially, it is random workloads and, to be more specific, random small I/O workloads that is the issue.

Write-through caching. This mode writes new data to the target while still maintaining it in cache for future reads.

Write-around caching or a general-purpose read cache. Write-around caching avoids caching new write data and instead focuses on caching read I/O operations for future read requests.

Many userspace libraries, tools, and kernel drivers exist to enable high-speed caching

dm-cache

The dm-cache component of the Linux kernel's device mapper.

bcache

Very similar to dm-cache, bcache too is a Linux kernel driver, although it differs in a few ways. For instance, the user is able to attach more than one SSD as a cache and is designed to reduce write amplification by turning random write operations into sequential writes .

dm-writecache

Fairly new to the Linux caching scene, dm-writecache was officially merged into the 4.18 Linux kernel

Unlike the other caching solutions mentioned already, the focus of dm-writecache is strictly writeback caching and nothing more: no read caching, no write-through caching. The thought process for not caching reads is that read data should already be in the page cache, which makes complete sense.

Other Caching Tools

Memcached. A cross-platform userspace library with an API for applications, Memcached also relies on RAM to boost the performance of databases and other applications.

ReadyBoost. A Microsoft product, ReadyBoost was introduced in Windows Vista and is included in later versions of Windows. Similar to dm-cache and bcache , ReadyBoost enables SSDs to act as a cache for slower HDDs.

http://www.admin-magazine.com/HPC/Articles/Linux-writecache?utm_source=ADMIN+Newsletter&utm_campaign=HPC_Update_130_2019-11-14_LInux_writecache&utm_medium=email

- Building and Installing the

rapiddisk kernel modules and utilities

https://github.com/pkoutoupis/rapiddisk/

No comments:

Post a Comment