Slurm and Moab are two workload manager systems that have

What is a Workload Manager?

The typical LC cluster is a finite resource

In

In order to fairly and efficiently

Commonly called a Workload Manager. May also

Batch system

Batch scheduler

Workload scheduler

Job scheduler

Resource manager (usually considered a component of a Workload Manager)

Tasks commonly performed by a Workload Manager:

Provide a means for users to specify and submit work as "jobs"

Provide a means for users to monitor,

Manage, allocate and provide access to

Manage pending work in job queues

Monitor and troubleshoot jobs and machine resources

Provide accounting and reporting facilities for jobs and machine resources

Efficiently balance work over machine resources; minimize wasted resources

https://computing.llnl.gov/tutorials/moab/

Deploying a Burstable and Event-driven HPC Cluster on AWS Using SLURM, Part 1

Google Codelab for creating two federated Slurm clusters on Google Cloud Platform

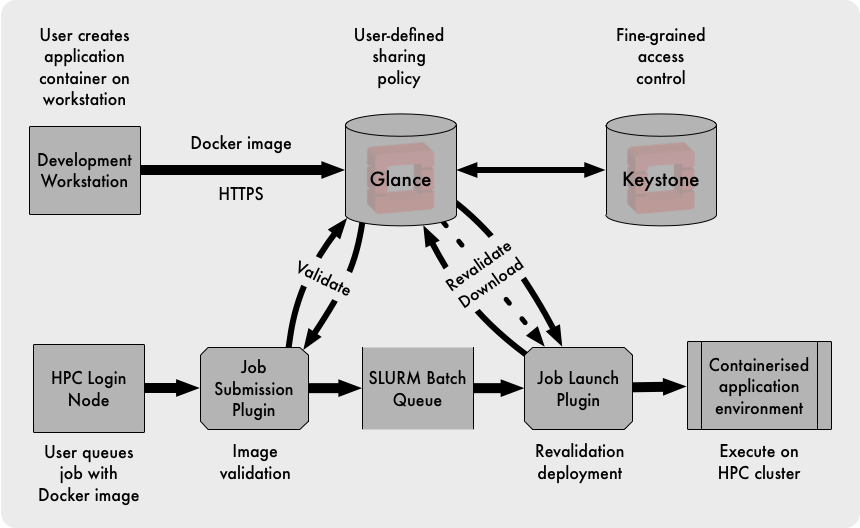

OpenStack and HPC Workload Management

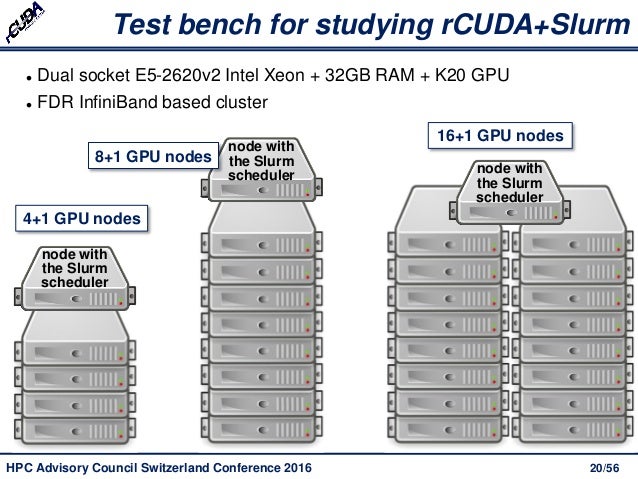

Increasing Cluster Performance by Combining rCUDA with Slurm

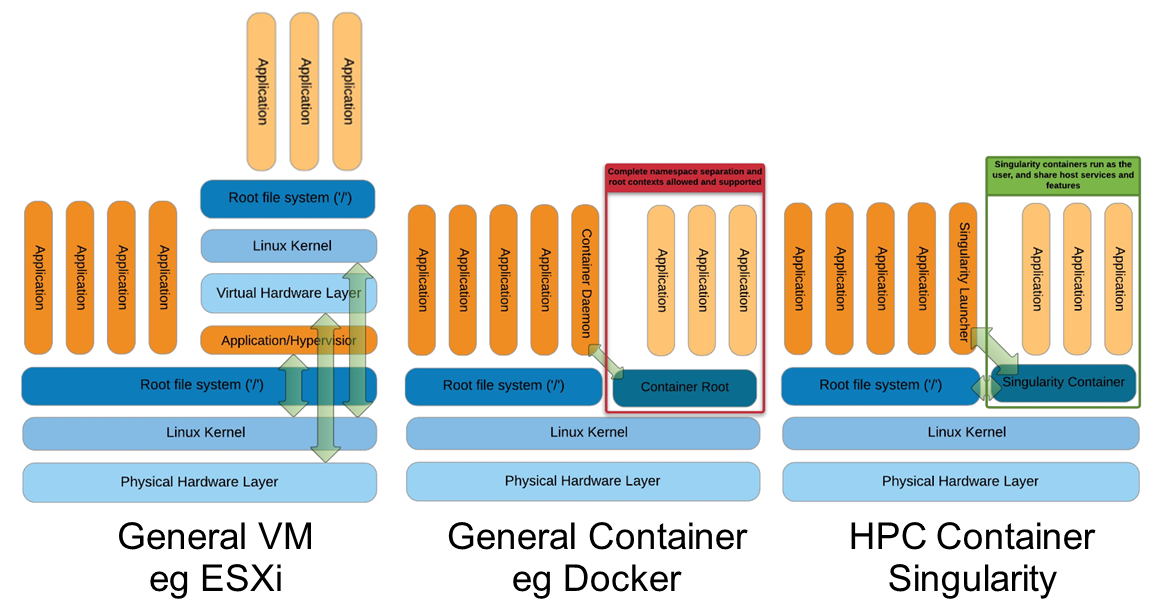

Docker vs Singularity vs Shifter in an HPC environment

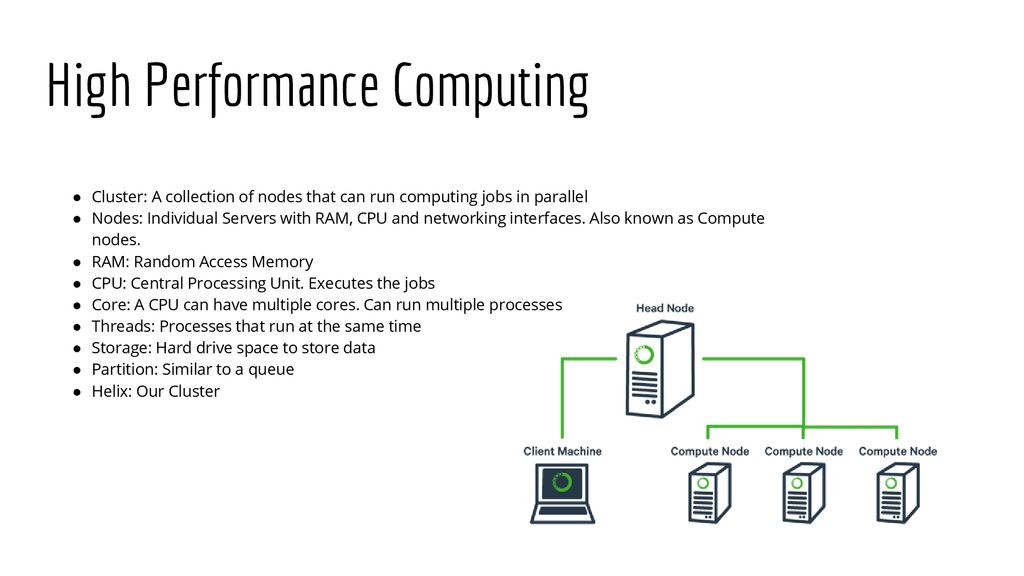

Helix - HPC/SLURM Tutorial

- SchedMD® is the core company behind the

Slurm workload manager software, a free open-source workload managerdesigned specifically to satisfy the demanding needs of high performance computing.

https://www.schedmd.com/

Slurm vs Moab/Torque onDeepthought HPC clusters

Intro and Overview: What is a scheduler?

A high performance computing (HPC) cluster (

This is

The original

In 2009, we migrated to the Moab scheduler, still keeping Torque as our resource manager and Gold for allocation management

http://hpcc.umd.edu/hpcc/help/slurm-vs-moab.html

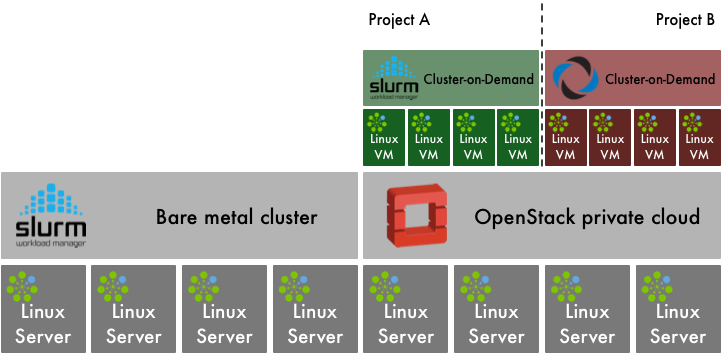

Intelligent HPC Workload Management Across Infrastructure and Organizational Complexity

Running computations on the Torque cluster

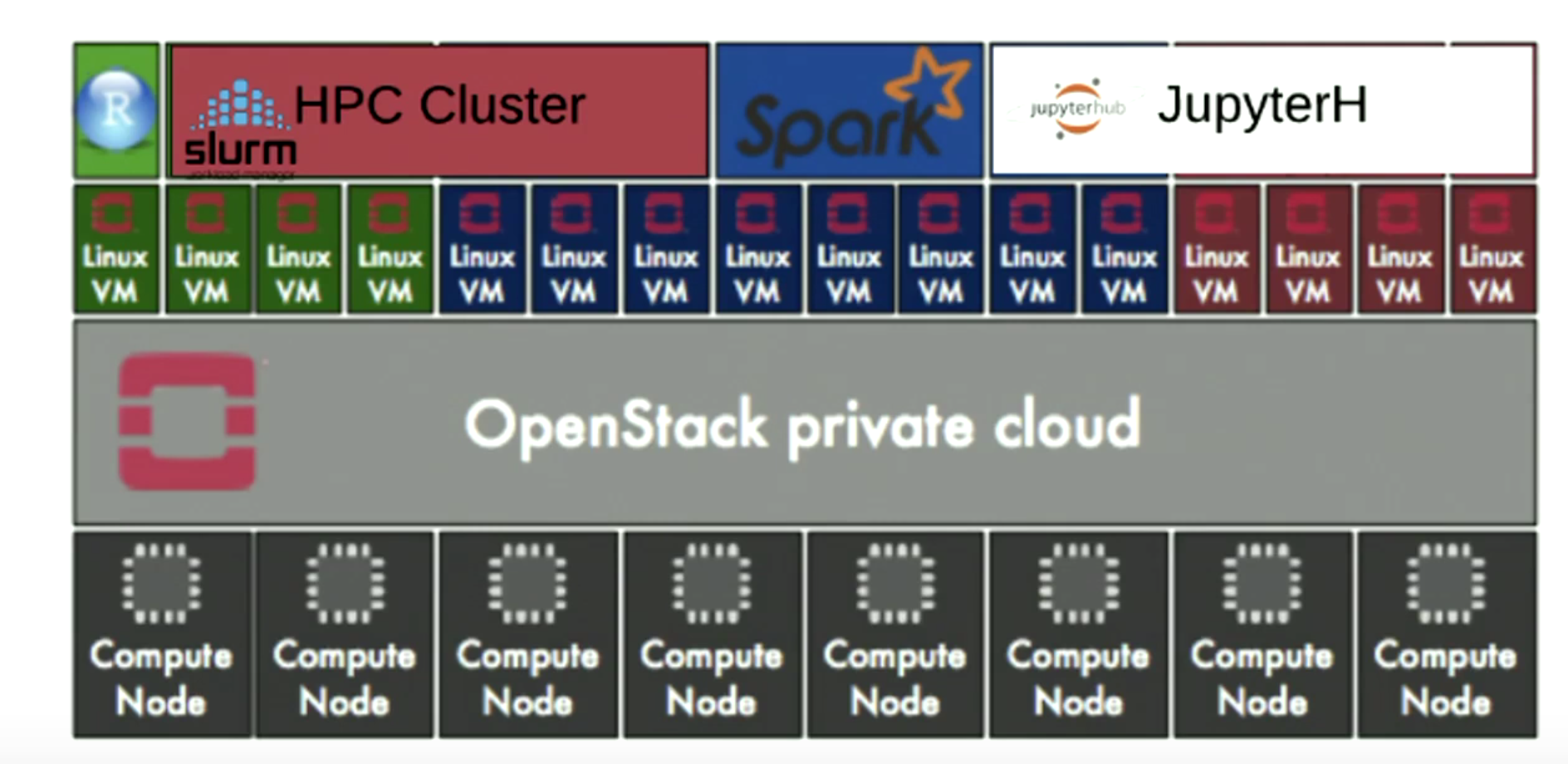

Workload Management in HPC and Cloud

Cluster as a Service: Managing multiple clusters foropenstack clouds and other diverse frameworks

Workload Management in HPC and Cloud

Cluster as a Service: Managing multiple clusters for

Overview of the UL HPC Viridis cluster, with its OpenStack-based private Cloud setup.

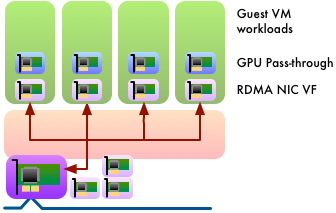

OpenStack and Virtualised HPC

How the Vienna Biocenter powers HPC with OpenStack

No comments:

Post a Comment