Performance isn’t the only reason to limit API requests, either. API limiting, which is also known as rate limiting, is an essential component of Internet security, as DoS attacks can tank a server with unlimited API requests.

Rate limiting also helps make your API scalable. If your API blows up in popularity, there can be unexpected spikes in traffic, causing severe lag time.

What Is API Rate Limiting?

Rate limiting is a critical component of an API product’s scalability.

API owners typically measure processing limits in Transactions Per Second (TPS). Some systems may have physical limitations on data transference. Both are part of the Backend Rate Limiting.

To prevent an API from being overwhelmed, API owners often enforce a limit on the number of requests, or the quantity of data clients can consume. This is called Application Rate Limiting.

If a user sends too many requests, API rate limiting can throttle client connections instead of disconnecting them immediately. Throttling lets clients still use your services while still protecting your API.

However, keep that in mind there is always the risk of API requests timing out, and the open connections also raise the risk of DoS attacks.

Best Practices For API Rate Limiting

One approach to API rate limiting is to offer a free tier and a premium tier, with different limits for each. There are many things to consider when deciding what to charge for premium API access

API providers will still need to consider the following when setting up their API rate limits.

Are requests throttled when they exceed the limit?

Do new calls and requests incur additional fees?

Do new calls and requests receive a particular error code and, if so, which one

What You Need To Know About Rate Limiting

Many services that use REST APIs feature API limiting as a defense against DoS attacks and overloaded servers.

Some APIs feature soft limits, which allow users to exceed the limits for a short period. Others have a more hardline approach, immediately returning an HTTP 429 error and timing out, forcing the user to send a brand new query.

Setting a timeout is the easiest way to limit API requests.

Three Methods Of Implementing API Rate-Limiting

1. Request Queues

Android Volley

Amazon Simple Queue Service (ASQS)

Setting Rules For Request Queues

2. Throttling

Throttling is another common way to practically implement rate-limiting. It lets API developers control how their API is used by setting up a temporary state, allowing the API to assess each request. When the throttle is triggered, a user may either be disconnected or simply have their bandwidth reduced

3. Rate-limiting Algorithms

Leaky Bucket

Fixed Window

Sliding Log

Sliding Window

https://nordicapis.com/everything-you-need-to-know-about-api-rate-limiting/

- How Rate Limiting thwarts Layer 7 DDoS attacks

The changing nature of the DDoS attack

brute force attacks against Layer 3 and 4, the network and transport layers of the internet, were devastating

But Layer 3 and 4 attacks are not as effective as they used to be because content delivery networks (CDNs) have massive capacity capable of absorbing sudden spikes in network traffic.

As defenses evolve, so do the threats from sophisticated attackers. They are now employing more targeted attacks against the application layer, or Layer 7. A Layer 7 attack may look like a legitimate HTTP request, and it doesn’t take thousands of infected machines to launch; it only requires a small number of resources, an automated script and knowledge of a web application’s bottlenecks. Though this type of attack takes a bit more expertise than a brute force Layer 3 or 4 attack, when executed well, its effectiveness and high ROI means that it is likely to become more common.

Application layer DDoS attacks take advantage of this vulnerability. To find the right spot to target, an attacker will look for parts of your web application that often require queries to your application or database backend

On an e-commerce site, this might be a page that makes API calls to load a list of products, pricing, and product availability

On a password-protected site, that might be a login request that checks credentials from the request body against known usernames and passwords.

But instead of making the request once or twice – a normal browsing behavior – a botnet involved in an application layer attack can make the request hundreds of thousands of times per second to overwhelm your backend services.

Without a mechanism to track the rate of HTTP requests from a client, each of these can appear to be a legitimate request, and a traditional DDoS mitigation system or firewall that inspects an individual HTTP request won’t detect and mitigate it

How rate limiting works

It’s vital to control the rate of backend requests or login attempts at the edge to limit the damage from application DDoS attacks. The first step is to determine which parts of your website or application are most vulnerable to a DDoS attack. Once you have found those pages or API endpoints that involve backend queries, you can then determine the maximum allowed request rate for them.

For example, if you know that a typical user physically cannot submit login credential to your login endpoint more than five times per second, then you know that any user request more frequent than this is likely malicious.

for example, set the rate limit on your login endpoint at five per second and lock out any client, that is to say, IP or IP and user-agent pair that violates that rule.

However, you might place a higher limit on how often a user can refresh your homepage or product details page since these pages make fewer backend queries or can be served from CDN cache.

You can also set different penalties for clients who violate rate limiting rules on different parts of the application. In one place, a violation might result in their subsequent requests getting blocked for five minutes; in another, you might redirect them to a CAPTCHA page.

https://www.verizondigitalmedia.com/blog/how-rate-limiting-thwarts-layer-7-ddos-attacks/

- Status Code

The Status-Code element is a 3-digit integer where first digit of the Status-Code defines the class of response and the last two digits do not have any categorization role. There are 5 values for the first digit:

S.N. Code and Description

1 1xx: Informational

This means request received and continuing process.

2 2xx: Success

This means the action was successfully received, understood, and accepted.

3 3xx: Redirection

This means further action must be taken in order to complete the request.

4 4xx: Client Error

This means the request contains bad syntax or cannot be fulfilled

5 5xx: Server Error

The server failed to fulfill an apparently valid request

https://www.tutorialspoint.com/http/http_quick_guide.htm

- 3 Common Methods of API Authentication Explained

three major methods of adding security to an API — HTTP Basic Auth, API Keys, and OAuth.

HTTP Basic Authentication

In this approach, an HTTP user agent simply provides a username and password to prove their authentication. This approach does not require cookies, session IDs, login pages, and other such specialty solutions, and because it uses the HTTP header itself, there’s no need to handshakes or other complex response systems.

The problem is that, unless the process is strictly enforced throughout the entire data cycle to SSL for security, the authentication is transmitted in open on insecure lines.

even if SSL is enforced, this results in a slowing of the response time. And even ignoring that, in its base form, HTTP is not encrypted in any way. It is encapsulated in base64, and is often erroneously proclaimed as encrypted due to this.

In an internal network, especially in IoT situations where speed is of no essence, having an HTTP Basic Authentication system is acceptable as a balance between cost of implementation and actual function.

API Keys

In this approach, a unique generated value is assigned to each first time user, signifying that the user is known. When the user attempts to re-enter the system, their unique key (sometimes generated from their hardware combination and IP data, and other times randomly generated by the server which knows them) is used to prove that they’re the same user as before.

The problem, however, is that API keys are often used for what they’re not – an API key is not a method of authorization, it’s a method of authentication.

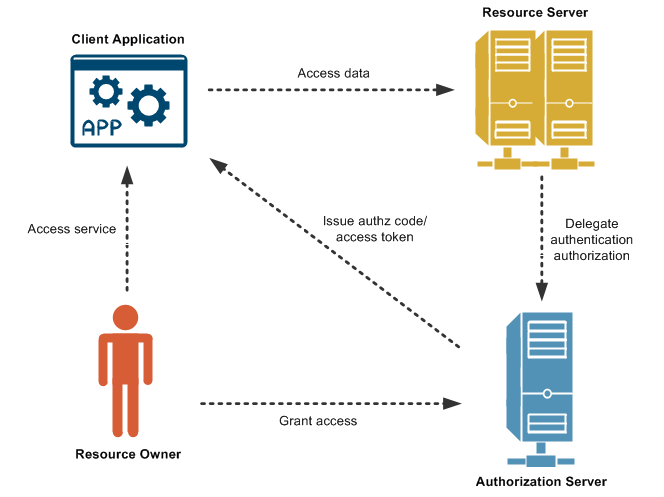

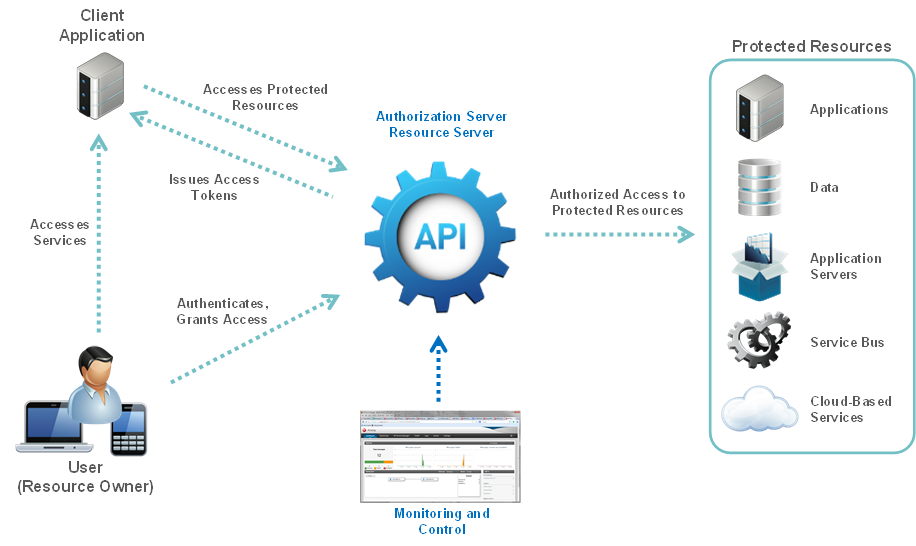

OAuth

OAuth is not technically an authentication method, but a method of both authentication and authorization

In this approach, the user logs into a system. That system will then request authentication, usually in the form of a token. The user will then forward this request to an authentication server, which will either reject or allow this authentication. From here, the token is provided to the user, and then to the requester. Such a token can then be checked at any time independently of the user by the requester for validation, and can be used over time with strictly limited scope and age of validity.

https://nordicapis.com/3-common-methods-api-authentication-explained/

- OAuth (Open Authorization[1][2]) is an open standard for access delegation, commonly used as a way for Internet users to grant websites or applications access to their information on other websites but without giving them the passwords

https://en.wikipedia.org/wiki/OAuth

- REST API - Response Codes and Statuses

the common HTTP response codes associated with REST APIs

https://documentation.commvault.com/commvault/v11/article?p=45599.htm

No comments:

Post a Comment