- DCB is a suite of Institute of Electrical and Electronics Engineers (IEEE) standards that enable Converged Fabrics in the data center, where storage, data networking, cluster Inter-Process Communication (IPC), and management traffic all share the same Ethernet network infrastructure. DCB provides hardware-based bandwidth allocation to a specific

- In addition, you can use Windows

PowerShell commands to enable Data Center Bridging (DCB), create a Hyper-V Virtual Switch with an RDMA virtual NIC (vNIC ), and create a Hyper-V Virtual Switch with SET and RDMAvNICs . https://docs.microsoft.com/en-us/windows-server/networking/sdn/software-defined-networking - It is

surprisingly hard to find a common definition of the All Flash Array (or AFA), but one thing that everyone appears to agree on is the shared nature of AFAs– they are network-attached, shared storage (i.e. SAN or NAS). - This IDC study examines the role of flash storage in the data center, defining the many ways in which it is being deployed. This study defines the different markets for flash-optimized storage solutions, both internal and external and discusses the workloads that products in each of the three all-flash array (AFA) markets are targeting. The definitions provided in this taxonomy represent

the scope of IDC's flash-based storage systems research.

https://www.idc.com/getdoc.jsp?containerId=US42606418 - NAS provides both storage and a file system. SAN (Storage Area Network), which provides only block-based storage and leaves file system concerns on the "client" side. SAN protocols are SCSI, Fibre Channel,

iSCSI , ATA over Ethernet (AoE ), orHyperSCSI .

Hardware-based bandwidth allocation is essential if traffic bypasses the operating system and

Priority-based flow control is essential if the upper layer protocol, such as Fiber Channel, assumes

https://docs.microsoft.com/en-us/windows-server/networking/technologies/dcb/dcb-top

An all-flash array is a shared storage array in which

Hybrid AFAs

The hybrid AFA is the poor man’s flash array.

SSD-based AFAs

all-flash arrays that have

Ground-Up AFAs

The final category is the ground-up designed AFA

A ground-up array implements many of its features in hardware and also takes a holistic approach to managing the NAND flash media because it

https://flashdba.com/2015/06/25/all-flash-arrays-what-is-an-afa/

what to look for in an AFA? Given that the purpose of an AFA is speed, look at IOPS.

because AFAs have grown out of their original Fibre Channel-SAN environment and now come in

https://www.networkcomputing.com/storage/choosing-all-flash-array/1250381987

http://en.wikipedia.org/wiki/Storage_area_network

http://www.webopedia.com/TERM/S/SAN.html

- SANs typically

utilizes Fibre Channel connectivity, while NAS solutions typically use TCP/IP networks, such as Ethernet.

But the real difference is in how the data

NAS requires a dedicated piece of hardware, usually referred to as the head, which connects to the LAN. This device

SAN typically uses Fibre Channel and connects via storage devices

https://www.zadarastorage.com/blog/tech-corner/san-versus-nas-whats-the-difference/

- NAS vs SAN

cheap and poor performance

Fiber Channel needs a second switch

better performance

requires a dedicated card

- With the features we built into

Openfiler , you can take advantage of file-based Network Attached Storage and block-based Storage Area Networking functionality in a single cohesive framework.

openmediavault is the next generation network attached storage (NAS) solution based onDebian Linux. It contains services like SSH, (S) FTP, SMB/CIFS, DAAP media server,RSync , BitTorrent client and many more.Thanks to the modular design of the framework it can be enhanced via plugins.

https://www.openmediavault.org/

FreeNAS vsTrueNAS

http://www.freenas.org/blog/freenas-vs-truenas/

- NAS4Free supports sharing across Windows, Apple, and UNIX-like systems. It includes ZFS v5000

, Software RAID (0,1,5), disk encryption, S.M.A.R. T / email reports etc. with the following protocols: CIFS/SMB (Samba), Active Directory Domain Controller (Samba), FTP, NFS, TFTP, AFP, RSYNC, Unison,iSCSI (initiator and target), HAST, CARP, Bridge,UPnP , andBittorent which is all highly configurable by its WEB interface. NAS4Free canbe installed on Compact Flash/USB/SSD key, Hard disk or booted from aLiveCD /LiveUSB with a smallusbkey for config storage.

https://www.nas4free.org

- The

iSCSI Extensions for RDMA (iSER ) is a computer network protocol that extends the Internet Small Computer System Interface (iSCSI ) protocol to use Remote Direct Memory Access (RDMA). RDMAis provided by either the Transmission Control Protocol (TCP) with RDMA services (iWARP ) that uses existing Ethernet setup and therefore no need of huge hardware investment,RoCE (RDMA over Converged Ethernet) that does not need the TCP layer and therefore provides lower latency, orInfiniBand . It permits data tobe transferred directly into and out of SCSI computer memory buffers (which connects computers to storage devices) without intermediate data copies andwithout much of the CPU intervention.

iSCSI

From Wikipedia, the free encyclopedia

- BACKSTORES

FILEIO

Allows

PSCSI

Allows a local SCSI device of any type to

RAMDISK

Allows

https://www.systutorials.com/docs/linux/man/8-targetcli/

- When running a website or similar workload

, in general, the best measurement of the disk subsystemis known as IOPS: Input/Output Operations per Second. https://www.binarylane.com.au/support/solutions/articles/1000055889-how-to-benchmark-disk-i-o

- In

iSCSI terminology,the system that shares the storage is known as the target. The storage can be a physical disk, or an area representing multiple disks or a portion of a physical disk. For example, if the disk( s) are formatted with ZFS, azvol canbe created to use as theiSCSI storage.

https://www.freebsd.org/doc/handbook/network-iscsi.html

LinuxIO (LIO) has been the Linux SCSI target since kernel version 2.6.38.[ 1][ 2] It supports a rapidly growing number of fabric modules, and all existing Linux block devices asbackstores .

iSCSI stands for Internet Small Computer Systems Interface, an IP-based storage, works on top of internet protocol by carrying SCSI commands over IP network.

What is

This protocol encapsulates SCSI data into TCP packets.

This solution is cheaper than the Fibre Channel SAN (Fibre channel HBAs and switches are expensive).

From the host view the user sees the storage array LUNs like a local

The most important difference is that

Some critics said

Initiator:

The initiator is the name of the

Target:

The target is the name of the

The

The type string can be

iqn.

eui.

Most of the implementations use the

initiator name: iqn.1993-08

1993-08

org

01.35ef13adb6d

Our target name is similar (iqn.1992-08.com

The Open-

It contains kernel modules and an

/etc/init

The configuration files are under the /etc/

nodes directory: The directory contains the nodes and their targets.

https://www.howtoforge.com/iscsi_on_linux

- Open-

iSCSI is partitioned into user and kernel parts.

User space contains the entire control plane: configuration manager,

The Open-

http://www.open-iscsi.com

iSCSI (Internet Small Computer Systems Interface).Like Fibre Channel,iSCSI providesall of the necessary components for the construction of a Storage Area Network.

Linux Open-

Microsoft

VMware

Such an

https://www.thomas-krenn.com/en/wiki/ISCSI_Basics

- Open-source SCSI targets

LIO (Linux-IO) is the standard open-source SCSI target in Linux by

In Linux, there are also three out-of-tree or legacy SCSI targets:

STGT (SCSI Target Framework) has been the standard

http://www.linux-iscsi.org/wiki/Features#Comparison

targetcli is the general management platform for theLinuxIO .

http://www.linux-iscsi.org/wiki/Targetcli

InfiniBand provides the target for various IB Host Channel Adapters (HCAs).

The

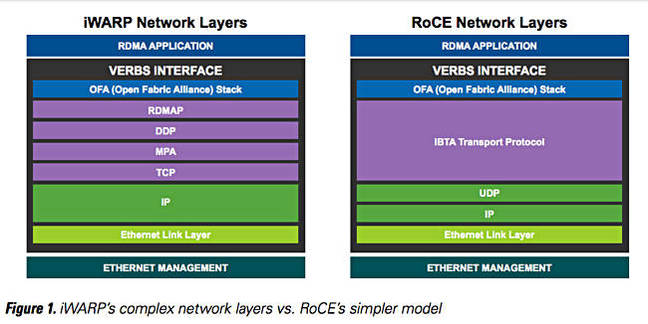

RDMA over Converged Ethernet (

Host Channel Adapter (HCA): provides the mechanism to connect

Converged Enhanced Ethernet (CEE): A set of standards that allow enhanced communication over an Ethernet network.

Data Center Bridging (DCB): A set of standards that allow enhanced communication over an Ethernet network.

http://www.linux-iscsi.org/wiki/InfiniBand

- The SCSI RDMA Protocol (SRP) is a network protocol that allows one computer system to access SCSI devices attached to another computer system via RDMA

http://www.linux-iscsi.org/wiki/SRP

- Sharing via

iSCSI

https://pthree.org/2012/12/31/zfs-administration-part-xv-iscsi-nfs-and-samba

iSCSI is a way to share storage over a network. Unlike NFS, which works at the file system level,iSCSI works at the block device level.

In

https://www.freebsd.org/doc/handbook/network-iscsi.html

iSCSI on Gluster can be set up using the Linux Target driver. This is a user space daemon that acceptsiSCSI (as well as iSER andFCoE .) It interpretsiSCSI CDBs and converts them into some other I/O operation, according to user configuration. In our case, we can convert the CDBs into file operations that run against agluster file. The file represents the LUN and the offset in the file the LBA.

LIO is a replacement for the Linux Target Driver that

In this setup a single path leads to

https://docs.gluster.org/en/v3/Administrator%20Guide/GlusterFS%20iSCSI/

- Edge computing

Edge computing is

https://www.docker.com/solutions/docker-edge

why vSAN ?

new storage protocol, vSAN

fiber channel, block level iSCSI,NFS protocols exit

because of operation requirements

VM - VMDK on ESXi is backed by datastore either by LUN( block) or NFS

LUN configured by RAID5

what if new VM-VMDK requires LUN configured by RAID6? create new datastore ( DS) backed by LUN configured by RAID6

service requirement is delivered by not per VM level but per LUN level

storage administrator has to preconfigure everything

SPBM, Storage Policy Based Management

- Introduction to VMware

vSAN

total cost of ownership (TCO)

converts DAS

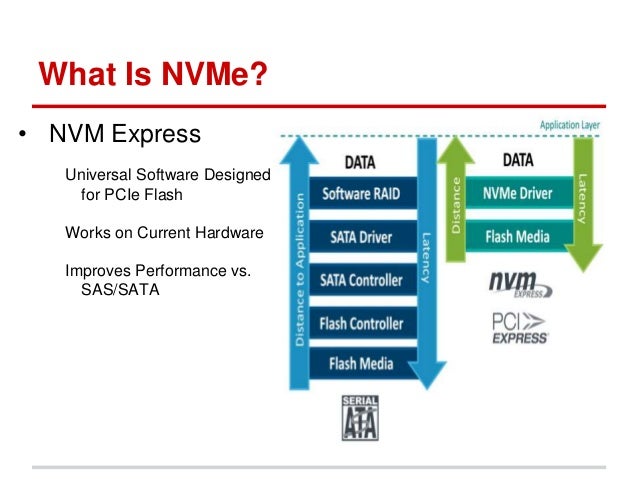

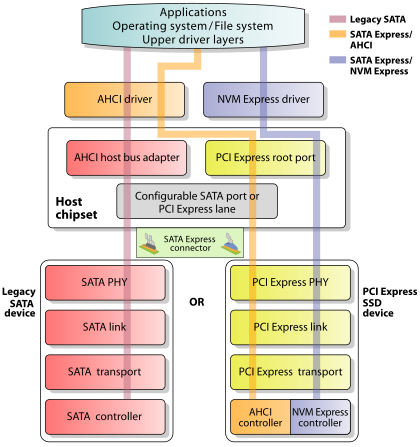

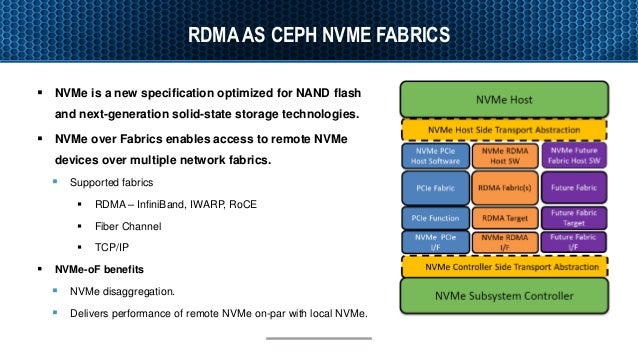

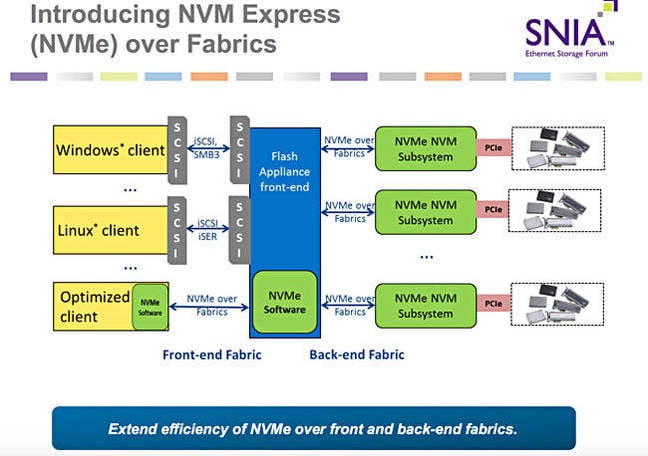

- SAS (based on the SCSI command set) and SATA (based on the ATA command set) are historic protocols developed for mechanical media. They do not have the characteristics to take advantage of the benefits of flash media.

http://www.computerweekly.com/feature/Storage-briefing-NVMe-vs-SATA-and-SAS

- SATA, or Serial ATA, is a

type of connection interface used by the SSD to communicate data with your system

You can think of PCIe , or Peripheral Component Interconnect Express, as a more direct connection to a motherboard — a motherboard extension, if you will. It’s typically used with things like graphics cards and network cards, which need low latency, but has proven useful for data storage as well .

M.2 (“ M dot two”) and U.2 (“ U dot two”) are form factor standards that specify the shape, dimensions, and layouts of a physical device. Both the M.2 and U.2 standards support both SATA and PCIe connections.

https://www.makeuseof.com/tag/pcie-vs-sata-type-ssd-best/

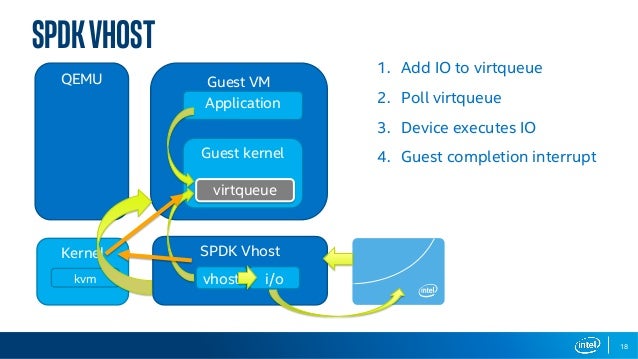

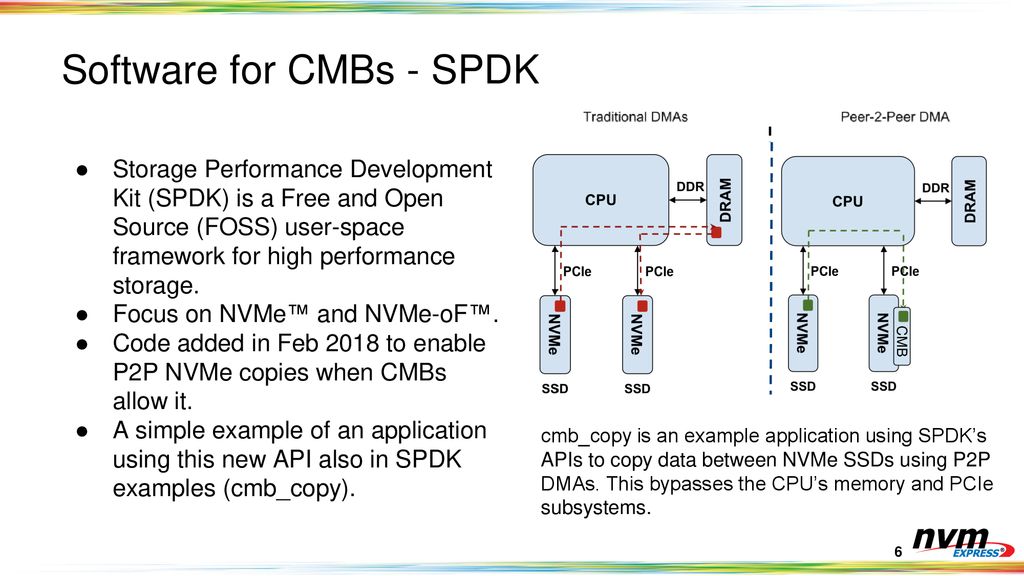

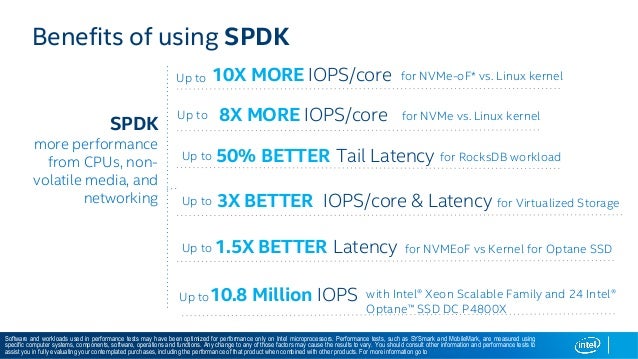

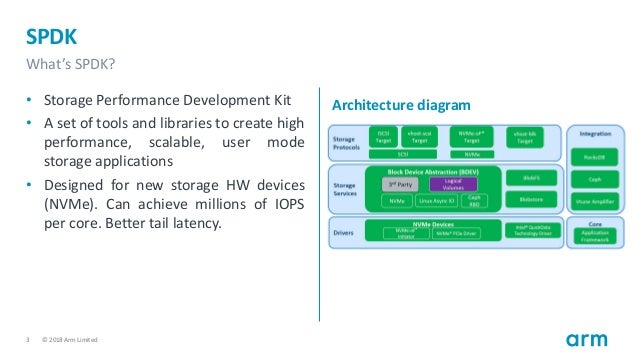

- The Storage Performance Development Kit (SPDK) provides a set of tools and libraries for writing high performance, scalable, user-mode storage applications. It achieves high performance by moving

all of the necessary drivers intouserspace and operatingin a polled mode instead of relying on interrupts, which avoids kernel context switches and eliminates interrupt handling overhead.

https://github.com/spdk/spdk

- The Storage Performance Development Kit (SPDK) provides a set of tools and libraries for writing high performance, scalable, user-mode storage applications. It achieves high performance through the use of

a number of key techniques:

Moving all of the necessary drivers into userspace , which avoids syscalls and enables zero -copy access from the application.

Polling hardware for completions instead of relying on interrupts, which lowers both total latency and latency variance.

Avoiding all locks in the I /O path, instead relying on message passing.

https://spdk.io/

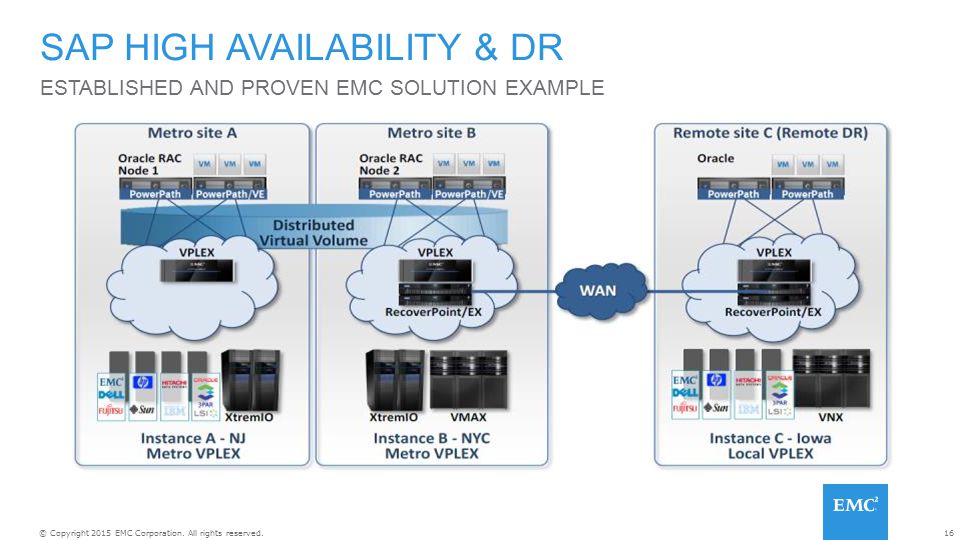

- VPLEX implements a distributed "virtualization" layer within and across geographically disparate Fibre Channel storage area networks and data centers

Switched Fabric or switching fabric is a network topology in which network nodes interconnect via one or more network switches (particularly crossbar switches).

Because a switched fabric network spreads network traffic across multiple physical links, it yields higher total throughput than broadcast networks, such as the early 10BASE5 version of Ethernet, or most wireless networks such as Wi-Fi.

The generation of high-speed serial data interconnects that appeared in 2001–2004 which provided point-to-point connectivity between processor and peripheral devices are sometimes referred to as fabrics; however, they lack features such as a message passing protocol

In the Fibre Channel Switched Fabric (FC-SW-6) topology, devices are connected to each other through one or more Fibre Channel switches.

this topology has the best scalability of the three FC topologies (the other two are Arbitrated Loop and point-to-point)

it is the only one requiring switches

Visibility among devices (called nodes) in a fabric is typically controlled with Fibre Channel zoning.

Multiple switches in a fabric usually form a mesh network, with devices being on the "edges" ("leaves") of the mesh.

Most Fibre Channel network designs employ two separate fabrics for redundancy.

The two fabrics share the edge nodes (devices), but are otherwise unconnected.

One of the advantages of such setup is capability of failover, meaning that in case one link breaks or a fabric goes out of order, datagrams can be sent via the second fabric.

The fabric topology allows the connection of up to the theoretical maximum of 16 million devices, limited only by the available address space (224).

Intelligent Storage System

a new breed of storage solutions known as an intelligent storage system has evolved.

These arrays have an operating environment that controls the management, allocation, and utilization of storage resources.

Components of an Intelligent Storage System

An intelligent storage system consists of four key components: front end, cache , back end, and physical disks. An I /O request received from the host at the front-end port is processed through cache and the back end, to enable storage and retrieval of data from the physical disk. A read request can be serviced directly from cache if we find the requested data in cache .

http://www.sanadmin.net/2015/10/fc-storage.html

No comments:

Post a Comment